Building Effective AI Infrastructure for Modern Data Solutions

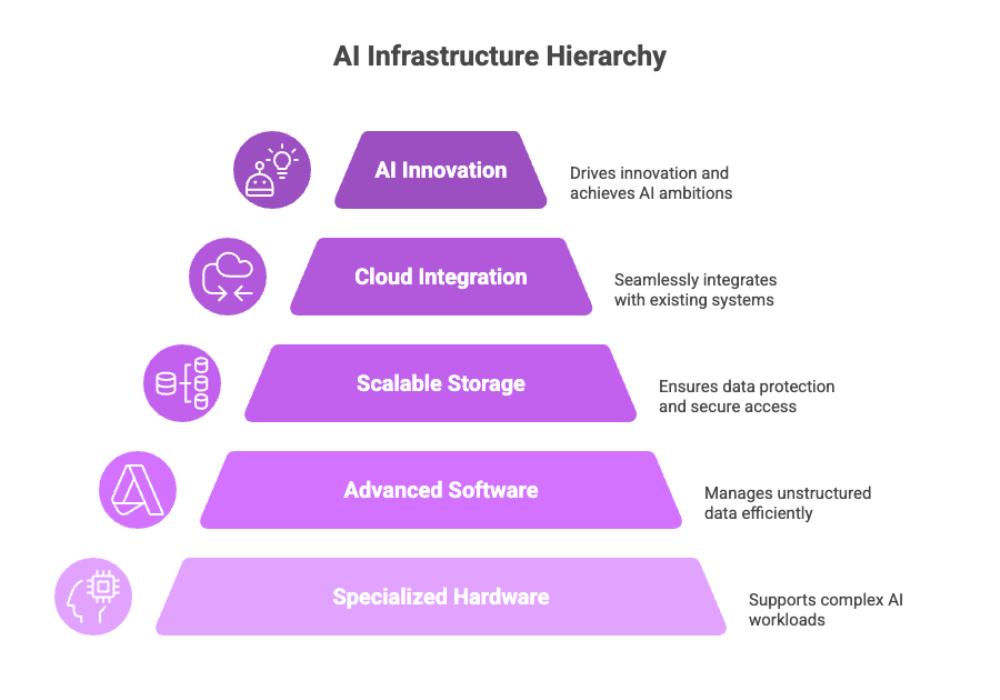

Artificial intelligence (AI) has revolutionized the way businesses operate, making AI infrastructure a critical component for success in today's technology-driven world. This next-generation AI infrastructure stack combines specialized hardware and software components to support the complex demands of AI workloads and machine learning tasks. By leveraging advanced infrastructure tools, scalable storage solutions, and distributed file systems, organizations can efficiently manage unstructured data and implement complex algorithms with parallel processing capabilities.

The AI infrastructure lies at the heart of deploying AI models and running AI systems, enabling enterprises to harness the power of generative AI, deep learning, and foundation models. With cloud infrastructure and robust data management practices, AI infrastructure ensures data protection and secure access controls while integrating seamlessly with existing systems. As AI research advances and AI applications expand, building AI infrastructure that supports AI workflows and accelerates model training is essential for driving innovation and achieving AI ambitions across industries.

Key Takeaways

-

Artificial intelligence (AI) technology enables computers to simulate human thinking and problem-solving, serving as a critical component of AI infrastructure.

-

When combined with the internet, sensors, and robotics, AI performs tasks that typically require human input, such as operating vehicles and delivering data insights. This capability relies heavily on machine learning models.

-

Many AI applications depend on machine learning workloads focused on data and algorithms, which drives the need for robust AI infrastructure.

-

AI technology powers applications like question answering and automated decision-making by leveraging advanced AI infrastructure solutions.

AI Infrastructure Foundation

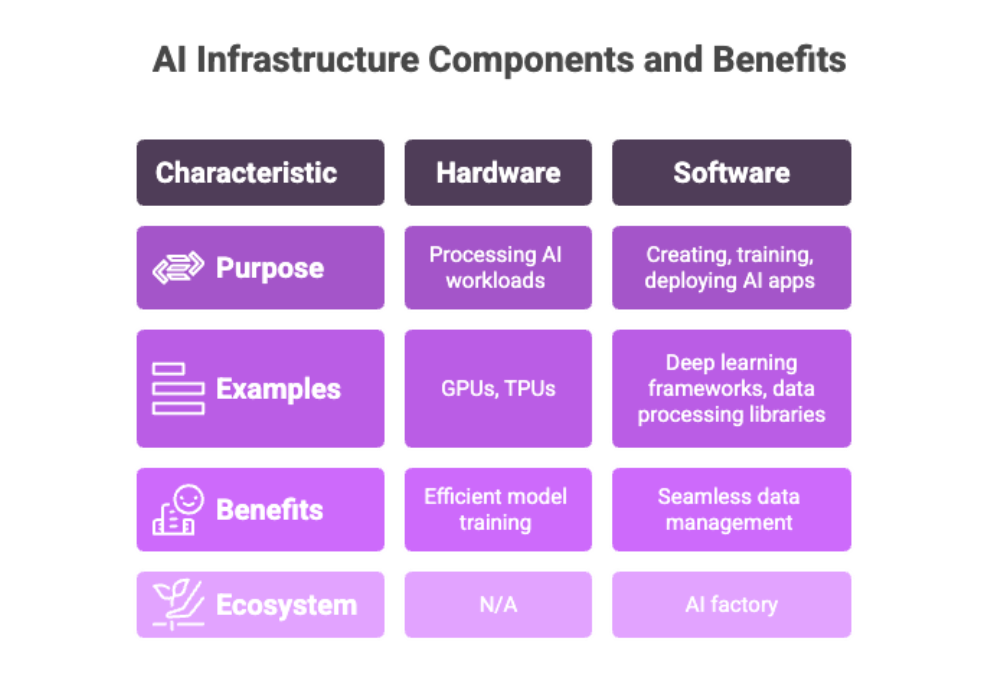

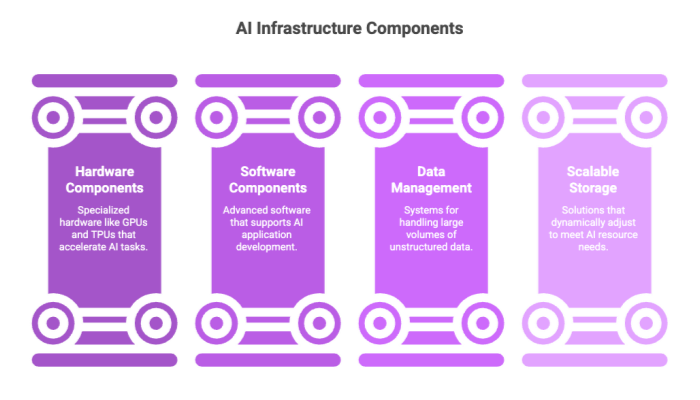

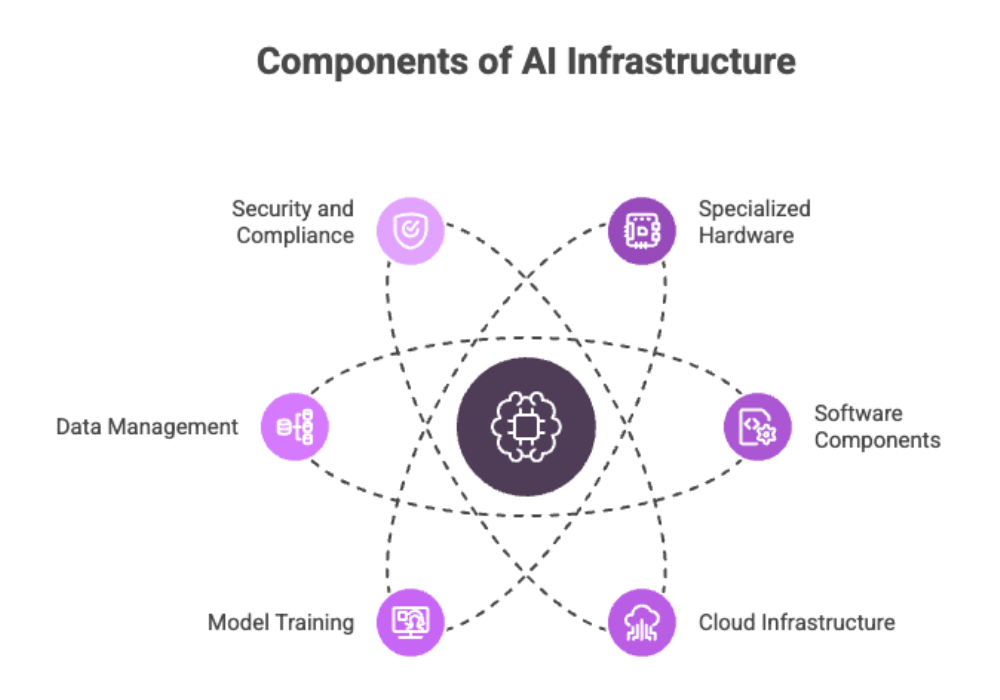

AI infrastructure, also known as the AI stack, comprises the integrated hardware and software components specifically designed to create, train, and deploy AI-powered applications. Central to this infrastructure are specialized hardware units such as graphics processing units (GPUs) and tensor processing units (TPUs), which provide the parallel processing capabilities essential for handling complex machine learning workloads efficiently.

Strong AI infrastructure supports a wide spectrum of AI applications, including chatbots, facial and speech recognition, computer vision, and generative AI applications. It ensures efficient model training and deployment by leveraging scalable storage solutions, distributed file systems, and advanced data processing libraries. By enabling seamless data ingestion, management, and processing, AI infrastructure empowers developers to build and run AI models effectively while streamlining AI workflows.

Enterprises across industries depend on cloud-based AI infrastructure for its scalability, flexibility, and performance, allowing them to scale resources dynamically according to AI project demands. This next-generation AI infrastructure stack integrates hardware and software components optimized for AI tasks, including deep learning frameworks and AI infrastructure tools, ensuring robust support for AI initiatives.

Moreover, the AI infrastructure ecosystem often incorporates the concept of an AI factory—a cohesive system that automates and orchestrates the entire AI development lifecycle from data ingestion and model training to deployment and continuous improvement. Integration with existing systems is crucial to leverage legacy data and applications, ensuring smooth adoption and maximizing the value of AI capabilities within business models.

Overall, AI infrastructure solutions form the backbone of successful AI projects, enabling enterprises to deploy AI models securely and efficiently while fostering innovation through advanced AI research and development.

Building AI Infrastructure

Building AI infrastructure involves essential hardware and software components designed to support AI workloads and machine learning tasks, including complex matrix and vector computations. This infrastructure combines modern specialized hardware—such as graphics processing units (GPUs) and tensor processing units (TPUs)—with advanced software components to efficiently handle AI and machine learning application development.

A robust AI infrastructure stack leverages distributed file systems and parallel processing capabilities to manage large volumes of unstructured data and accelerate efficient data ingestion, processing, and model training. Each component plays a vital role in the AI workflow, from data ingestion and management to deploying AI models using deep learning frameworks and AI infrastructure tools.

Together, these hardware and software components form the foundation for advanced AI solutions, including generative AI applications and AI accelerators. By integrating scalable storage solutions and cloud infrastructure, organizations can dynamically scale resources to meet their AI ambitions, ensuring high performance, flexibility, and security throughout the AI development lifecycle.

AI Development and Deployment: Key Components and How AI Infrastructure Works

AI development and deployment rely on a strong AI infrastructure stack composed of specialized hardware and software components designed to handle the unique demands of AI workloads. Central to this infrastructure are tensor processing units (TPUs) and graphics processing units (GPUs), which provide the parallel processing capabilities essential for efficient machine learning tasks and deep learning applications. Alongside these specialized hardware accelerators, robust operating systems and AI infrastructure tools enable seamless integration and management of AI workflows.

Efficient model training and implementing complex algorithms are critical aspects of AI development. This process often involves large language models and foundation models that require substantial compute power and scalable storage solutions. AI infrastructure ensures these models can be trained and deployed effectively, leveraging cloud infrastructure and services from cloud providers such as Google Cloud, AWS, or Microsoft Azure. This cloud-based AI infrastructure offers flexibility, scalability, and high performance, allowing enterprises to dynamically allocate resources according to AI project demands.

The AI infrastructure stack lies at the heart of successful AI projects, supporting AI capabilities, AI research, and machine learning models. It facilitates the deployment of AI models securely and efficiently, ensuring data protection and compliance with access controls. By integrating hardware and software components optimized for AI workloads, organizations can accelerate AI development cycles and enhance model performance, driving innovation across industries.

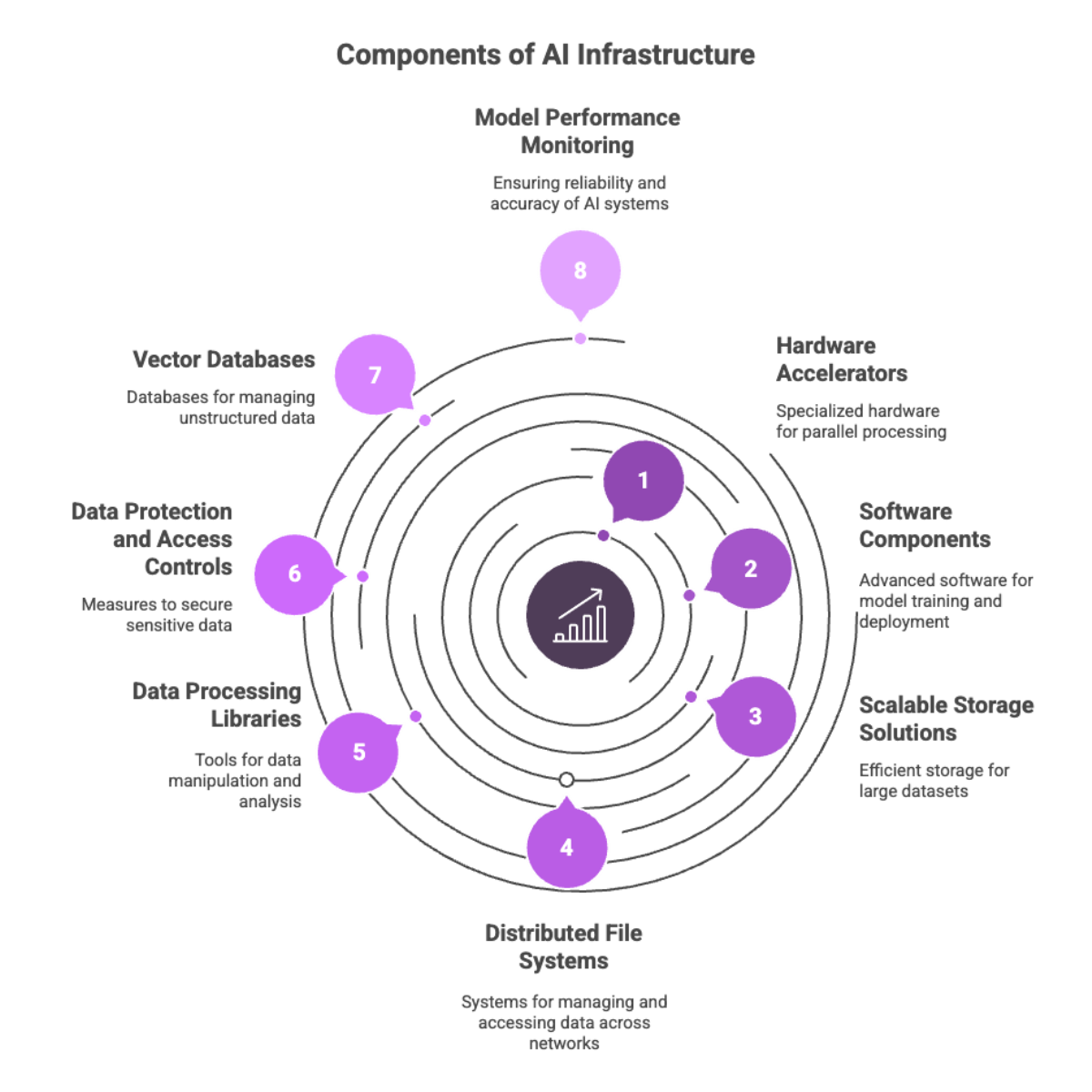

Understanding how AI infrastructure works is crucial for building strong AI solutions. It encompasses data ingestion, data management, model training, model deployment, and continuous monitoring of model performance. Each of these stages depends on the coordinated operation of key components within the AI infrastructure stack, including scalable storage solutions, distributed file systems, data processing libraries, and AI accelerators.

Together, these elements enable enterprises to run AI models and machine learning workloads with high efficiency and reliability, powering a wide range of AI applications from generative AI to computer vision.

Data Management in AI Infrastructure

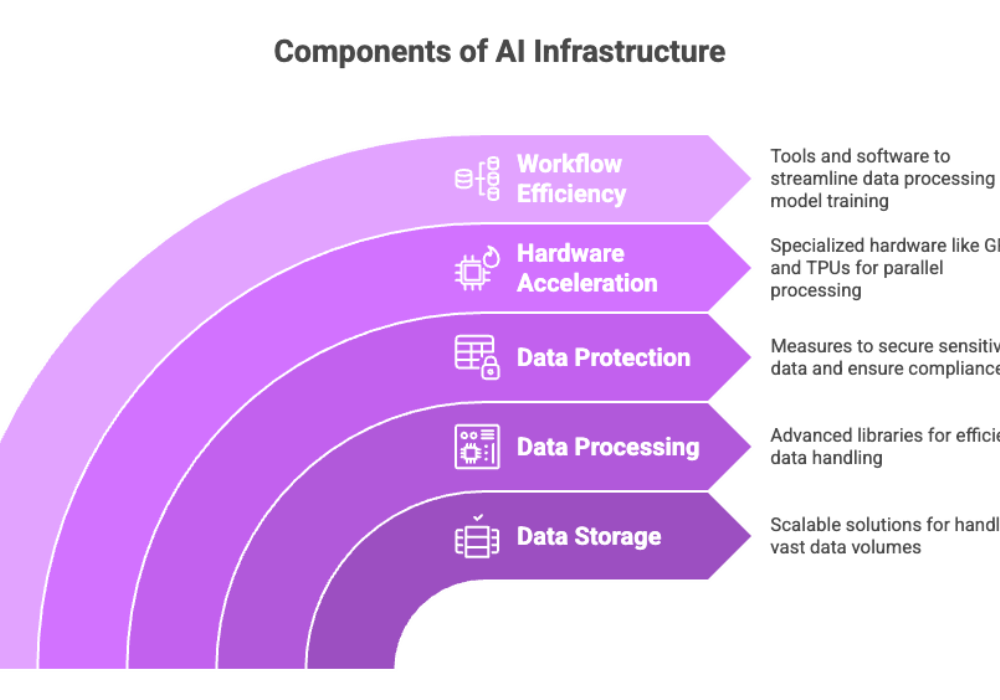

Data management is a critical pillar of AI infrastructure, encompassing data storage, data processing, and data protection. Effective data management relies on scalable storage solutions and advanced data processing libraries that enable the handling of vast volumes of unstructured data and sensitive data securely. These capabilities ensure that AI infrastructure lies at the core of managing complex data demands inherent in AI projects.

AI infrastructure solutions must efficiently support data ingestion and data management workflows, leveraging distributed file systems and parallel processing capabilities to accelerate machine learning workloads. While traditional central processing units (CPUs) play a role, specialized hardware such as graphics processing units (GPUs) and tensor processing units (TPUs) provide the necessary parallel processing capabilities to manage compute-intensive AI tasks.

Strong AI infrastructure ensures robust data protection and enforces strict access controls, safeguarding sensitive data throughout AI workflows. This secure environment is essential for compliance with data protection regulations and maintaining trust in AI systems.

By integrating infrastructure tools and software components optimized for AI workloads, organizations can streamline data processing and storage, enabling efficient model training and deployment. This comprehensive approach to data management supports the entire AI development lifecycle—from data ingestion and transformation to running machine learning models—empowering data science teams to innovate and deliver scalable AI solutions.

Deep Learning Applications and AI Infrastructure

Deep learning applications, including computer vision, natural language processing, and speech recognition, are heavily dependent on strong AI infrastructure. This infrastructure lies at the heart of enabling efficient model training and deployment, leveraging both specialized hardware and advanced software components. Key hardware accelerators such as graphics processing units (GPUs) and tensor processing units (TPUs) provide the parallel processing capabilities essential for handling the complex matrix and vector computations involved in deep learning tasks.

AI infrastructure solutions support the entire AI development lifecycle by integrating scalable storage solutions, distributed file systems, and data processing libraries. These components facilitate efficient data ingestion and data management, which are critical for training machine learning models and foundation models, including large language models. Additionally, AI infrastructure ensures robust data protection and access controls, safeguarding sensitive data throughout AI workflows.

Vector databases play a pivotal role in managing unstructured data, enabling secure, efficient storage and retrieval that supports generative AI applications and AI accelerators. Monitoring model performance is another crucial aspect, ensuring that AI systems operate reliably and deliver accurate insights.

By combining hardware and software components optimized for AI workloads, strong AI infrastructure empowers enterprises to build, deploy, and scale deep learning applications effectively. This infrastructure not only accelerates AI research and development but also drives innovation across diverse industries, helping organizations realize their AI ambitions with confidence and agility.

AI Infrastructure Tools and Technologies

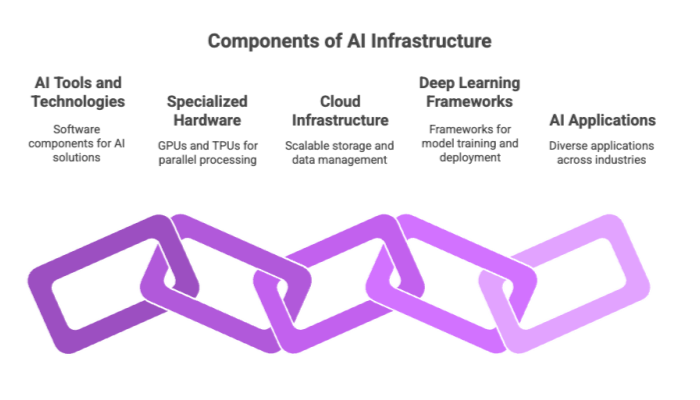

AI infrastructure tools and technologies are fundamental for building, deploying, and managing advanced AI solutions. These tools encompass a wide range of software components, including machine learning frameworks, data processing libraries, and orchestration platforms that streamline AI workflows and accelerate efficient model training. Leveraging such tools enables organizations to implement complex algorithms and run AI tasks with higher accuracy and speed.

The next-generation AI infrastructure stack lies at the core of these capabilities, combining specialized hardware—such as graphics processing units (GPUs) and tensor processing units (TPUs)—with scalable cloud-based AI infrastructure. This combination provides the parallel processing capabilities and flexible resource allocation necessary to support diverse AI initiatives and AI applications across industries.

Cloud infrastructure plays a pivotal role in modern AI infrastructure solutions, offering scalable storage solutions and distributed file systems that handle vast volumes of unstructured and sensitive data securely. Integration with existing systems ensures seamless data ingestion, management, and protection, adhering to strict access controls and data protection standards.

Strong AI infrastructure ensures efficient model training and deployment by utilizing deep learning frameworks and AI accelerators, facilitating the development and operationalization of large language models, foundation models, and generative AI applications. This robust infrastructure supports a broad spectrum of AI technology and AI systems, from chatbots and computer vision to autonomous vehicles, enabling enterprises to achieve their AI ambitions with reliability and high performance.

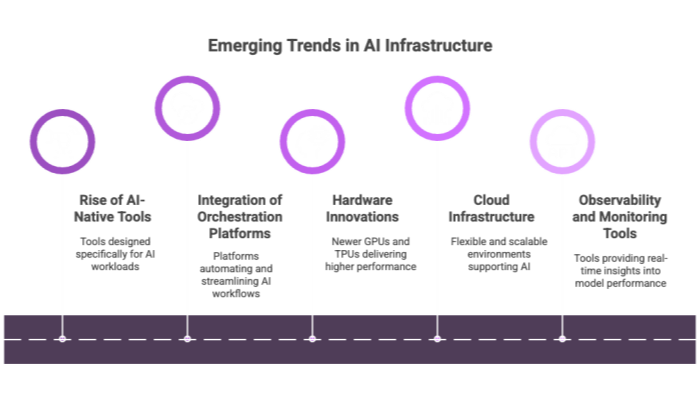

Emerging Trends in AI Infrastructure

As AI technology continues to evolve rapidly, several emerging trends are shaping the future of AI infrastructure. One significant development is the rise of AI-native infrastructure tools designed specifically to optimize AI workloads rather than adapting traditional IT systems. These tools focus on improving efficiency in model training, deployment, and inference, enabling organizations to handle increasingly complex AI models such as large language models and foundation models with greater ease.

Another key trend is the integration of advanced orchestration platforms that automate and streamline AI workflows, often referred to as AI factories. These platforms coordinate data ingestion, model training, deployment, and continuous monitoring, fostering faster iteration and reducing time-to-market for AI applications. The increasing adoption of containerization and Kubernetes-based management further enhances scalability and resource utilization.

Hardware innovations are also driving the next generation AI infrastructure, with newer generations of graphics processing units (GPUs) and tensor processing units (TPUs) delivering higher performance and energy efficiency. Additionally, specialized AI accelerators and custom chips are emerging to address specific AI tasks, such as natural language processing and computer vision, providing tailored computational power.

Cloud infrastructure continues to play a pivotal role, offering flexible, scalable environments that support hybrid and multi-cloud strategies. This flexibility allows enterprises to balance data privacy and compliance requirements with the need for high-performance computing resources.

Lastly, AI infrastructure is increasingly incorporating observability and monitoring tools that provide real-time insights into model performance, detect data and model drift, and ensure compliance with security and data protection standards. This holistic approach to infrastructure management supports continuous improvement and reliability of AI systems.

By staying abreast of these emerging trends and integrating them into their AI infrastructure stack, organizations can maintain a competitive edge and fully leverage the transformative potential of artificial intelligence.

Conclusion

In summary, building and maintaining a strong AI infrastructure is essential for organizations aiming to harness the full potential of artificial intelligence and machine learning workloads. By integrating specialized hardware and software components, scalable storage solutions, and advanced data management practices, enterprises can ensure efficient model training, deployment, and monitoring of AI models. Incorporating natural language processing (NLP) techniques within this infrastructure further enhances AI capabilities, enabling more sophisticated applications such as conversational agents, sentiment analysis, and language generation.

As AI research advances and generative AI applications continue to evolve, a robust AI infrastructure stack that supports NLP and other AI tasks becomes a critical competitive advantage. Embracing cloud infrastructure, secure data protection measures, and seamless integration with existing systems empowers organizations to innovate rapidly while maintaining compliance and operational excellence.

Ultimately, a well-designed AI infrastructure lays the foundation for scalable, flexible, and high-performing AI solutions that drive business value across diverse industries.