Best Local LLM for Coding: Top Choices for Efficient Development

In today’s fast-paced software development landscape, having a powerful AI assistant right on your own machine can make all the difference. Local large language models (LLMs) for coding offer developers speed, privacy, and control—helping to streamline coding tasks and boost productivity without relying on the cloud. This article will help you identify the best model for your coding needs.

Key Takeaways

-

Local LLMs provide cost reduction compared to proprietary coding tools while ensuring data security by running on your own infrastructure.

-

Key features of local LLMs for coding include offline operation, customizable models, support for multiple programming languages, and the ability to fine-tune for specific coding tasks.

-

When choosing between open-source and closed-source LLMs, cost concerns are important: open-source models are typically free and can be run locally to avoid ongoing cloud fees, while closed-source models often require subscriptions or cloud usage fees.

-

These models support multiple languages and various coding styles, enhancing the coding experience by offering intelligent code suggestions and assisting with debugging code and complex coding tasks.

-

The open source nature of local LLMs fosters a vibrant developer community that contributes to continuous improvements, helping developers tackle coding challenges and improve code quality through fine tuning and customization.

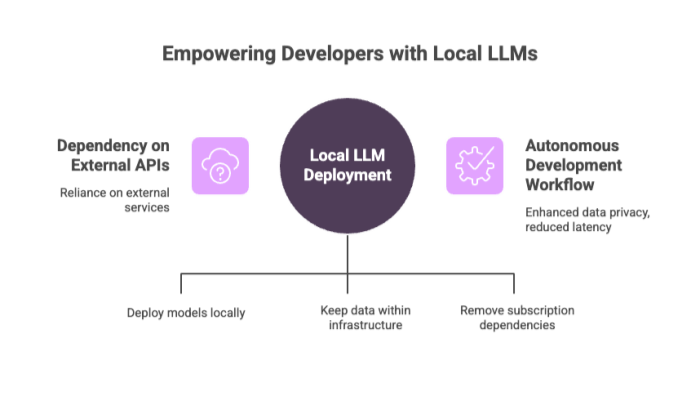

Introduction to Local LLMs

Local LLMs, or local large language models, are AI-powered tools that developers can run directly on their own local machines. These models eliminate the need for an internet connection or reliance on external APIs, enabling offline code generation with enhanced data privacy and reduced latency.

For developers seeking autonomy and tighter control over their development workflows, both open source models and open source LLMs provide the ideal solution. These models can be deployed locally or, alternatively, through hosted providers, offering flexibility in how they are run. Running models on local machines helps ensure data security by keeping sensitive information within your own infrastructure. Open source LLMs can assist with a variety of coding tasks such as generating code, debugging, providing intelligent code suggestions, and completing programming assignments in multiple programming languages. They can also discuss code, write code, and handle various tasks across different domains, including web development. From Python and Java to SQL and JavaScript, local LLMs support a broad range of coding environments.

Because local deployment removes dependency on proprietary coding assistant subscriptions, developers benefit from cost savings, improved data privacy, and greater customization flexibility. Whether you’re building web applications, experimenting with machine learning, or working on natural language processing, local LLMs are becoming indispensable tools in the modern software development toolkit.

Benefits of Using Local Models

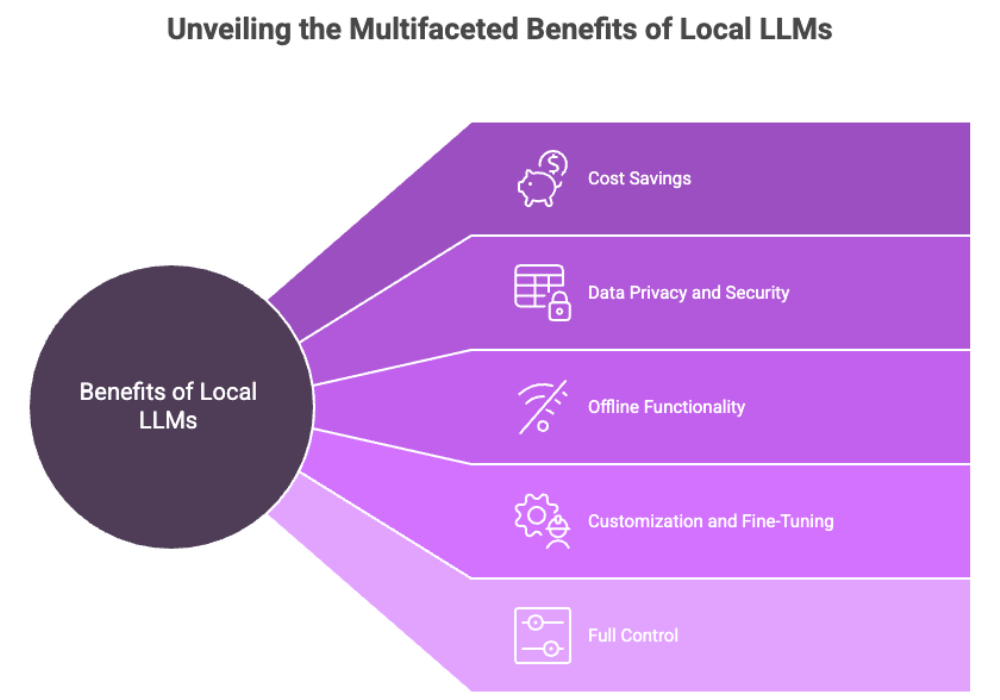

Running LLMs locally offers numerous benefits, especially for development teams and independent programmers looking to optimize both cost and performance:

-

Cost Savings: By eliminating recurring fees associated with proprietary coding tools or cloud services, local LLMs deliver long-term financial benefits.

-

Data Privacy and Security: All code processing remains on your local machine or secure infrastructure—perfect for environments handling sensitive data.

-

Offline Functionality: With no need for an internet connection, developers can work anytime, anywhere—ideal for remote work and travel scenarios.

-

Customization and Fine-Tuning: Open-source LLMs can be fine-tuned to align with a developer’s specific coding style, organizational standards, or application domains, helping with reducing errors by improving code quality and debugging.

-

Full Control: You get granular control over model parameters, training data, update cycles, and model behavior, enabling a tailored development experience.

While initial setup may involve hardware considerations and software configuration, these upfront efforts are balanced by sustained gains in autonomy, reliability, and performance. Local LLMs can also accelerate the development cycle by enabling rapid iteration, prompt updates, and efficient deployment within a prompt management system.

Code Generation and LLMs

At the heart of local LLM coding workflows is code generation—the ability to write, suggest, or complete blocks of code based on natural language prompts or partial source code. Evaluating a model's ability to generate and understand code is crucial for selecting the right tool for your development needs.

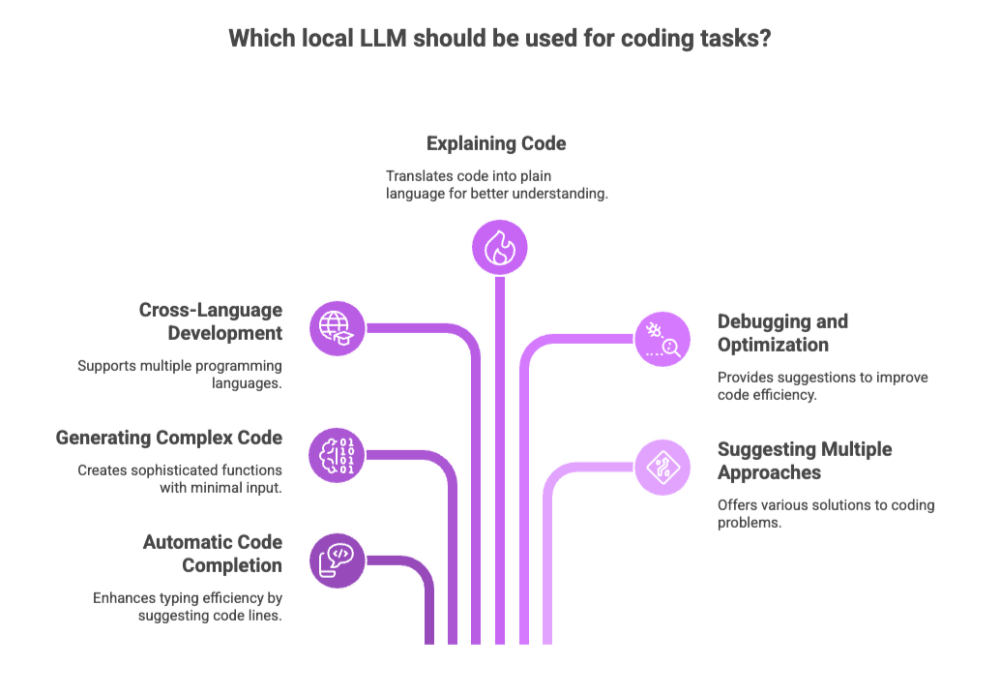

Some ways local LLMs assist in coding tasks include:

-

Automatic Code Completion: Suggest the next line or block of code while you’re typing.

-

Generating Complex Code: Write sophisticated functions, including complex SQL queries or machine learning models, with minimal human input.

-

Cross-Language Development: Support for a wide range of programming languages like Python, C++, JavaScript, Java, and Go.

-

Explaining Code: Translate complex or legacy code into plain language for better understanding.

-

Debugging and Optimization: Provide suggestions to refactor or optimize inefficient or error-prone code.

-

Suggesting Multiple Approaches: Propose multiple approaches to solving coding problems, giving developers a variety of solutions to consider.

There are various models available to suit different requirements, from lightweight options for personal projects to larger models designed for enterprise-scale deployments.

By running these AI models locally, developers avoid latency issues and retain full control over their source code and project history.

LLMs for Coding Tasks

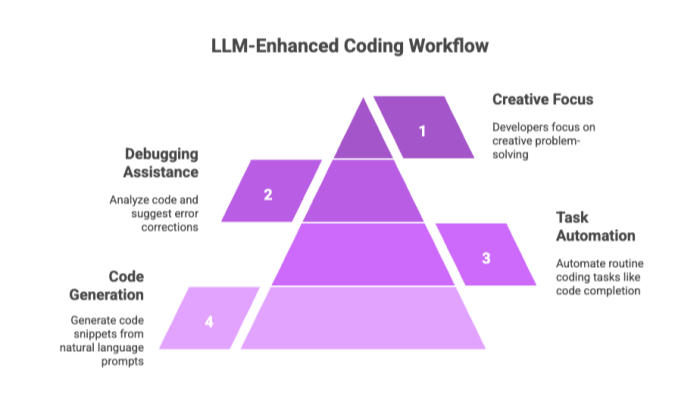

Large language models (LLMs) have transformed the way developers approach coding tasks by providing intelligent, context-aware assistance throughout the coding process. These models excel at code generation, enabling developers to generate code snippets or even entire functions from natural language prompts. For example, Code Llama and WizardCoder are adept at understanding various programming languages and coding standards, allowing them to produce high-quality, maintainable code that aligns with best practices.

Beyond generating code, LLMs can automate routine coding tasks such as code completion, where they suggest the next logical line or block of code, and code refactoring, which helps improve code readability and efficiency. When faced with complex tasks, such as integrating multiple APIs or handling intricate business logic, LLMs can break down the problem and generate structured solutions, saving developers valuable time.

Debugging is another area where LLMs shine. By analyzing code and identifying potential errors, these models can suggest corrections and optimizations, streamlining the debugging process and reducing the likelihood of bugs making it into production. As a result, developers can focus more on creative aspects and problem-solving, while LLMs handle repetitive or time-consuming coding tasks. This synergy between human expertise and AI-driven code generation is reshaping modern software development workflows.

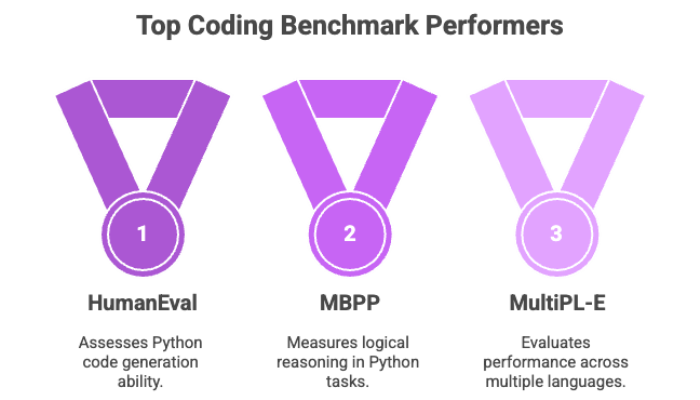

Key Coding Benchmarks

To determine the best local LLM for coding, it’s essential to evaluate model performance through coding benchmarks. These tests measure how well a model can solve programming problems across various complexity levels and languages. Coding benchmarks are also crucial for identifying the top models for coding tasks, helping users choose the most effective solutions for their needs.

Notable Coding Benchmarks:

-

HumanEval: Developed by OpenAI, this benchmark assesses the ability of LLMs to generate functional Python code for specific problems.

-

MBPP (Mostly Basic Python Programming): Measures the model’s ability to complete short programming tasks that require logical reasoning and syntactic correctness.

-

MultiPL-E: Evaluates performance across multiple programming languages.

-

CodeXGLUE: Tests code completion, code translation, and summarization abilities of language models.

Key factors influencing benchmark results include:

-

Context Window Size: Determines how much context (previous code or instructions) the model can remember during generation.

-

Fine-Tuning Quality: Fine-tuned models consistently outperform their base counterparts in specialized tasks.

-

Model Parameters: Larger models with more parameters often perform better, but they require more hardware resources.

When assessing which LLM to use, look for benchmark scores that align with your specific development needs.

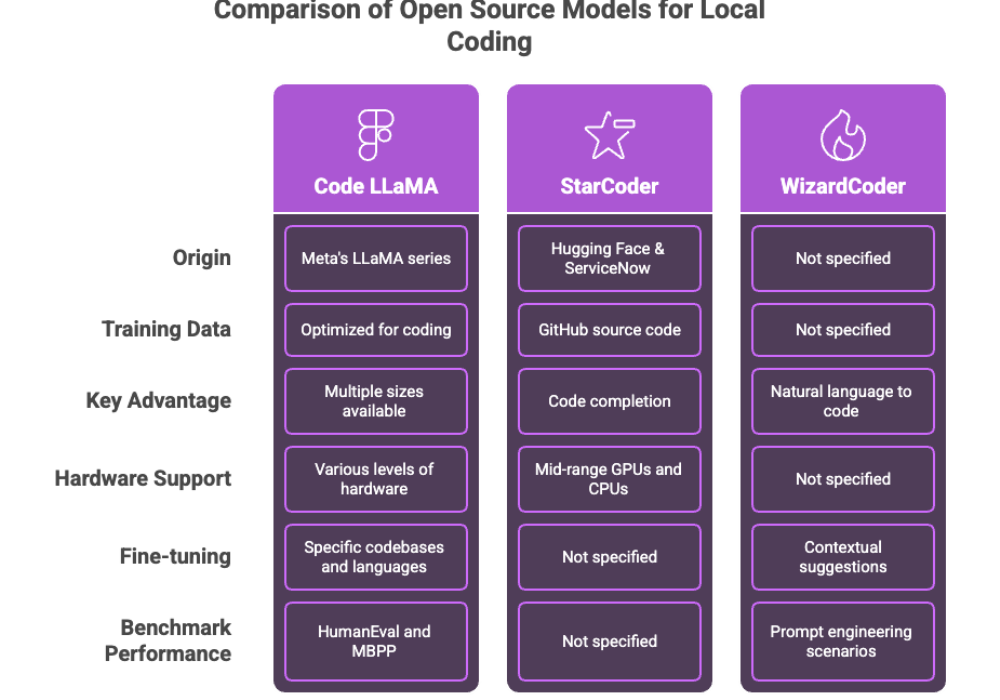

Code LLaMA and Other Options

Several open source models have emerged as top performers for local coding use cases, offering transparency and flexibility. Each comes with distinct strengths and customization opportunities, and organizations can fine tune these models to better align with their specific coding standards and domain requirements. Running these models locally can also deliver low latency, which is crucial for real-time coding tasks and efficient workflows.

1. Code LLaMA

-

Created by Meta, it’s a derivative of the LLaMA series optimized for coding.

-

Available in multiple sizes to support various levels of consumer hardware.

-

Performs well in benchmarks like HumanEval and MBPP.

-

Supports fine-tuning for specific codebases and languages.

2. StarCoder

-

Developed collaboratively by Hugging Face and ServiceNow.

-

Trained on a large corpus of GitHub source code.

-

Excels in code completion and context-aware generation.

-

Works efficiently on mid-range GPUs and CPUs.

3. WizardCoder

-

Known for its instruct-tuned architecture that enables natural language to code generation.

-

Particularly strong in prompt engineering scenarios.

-

Can be combined with retrieval-augmented generation for contextual suggestions.

These models can be downloaded and run locally using tools like LM Studio, Ollama, or command-line utilities that support model formats such as GGUF. Thanks to their open-source nature, these models can be audited, fine-tuned, and integrated into IDEs or coding platforms.

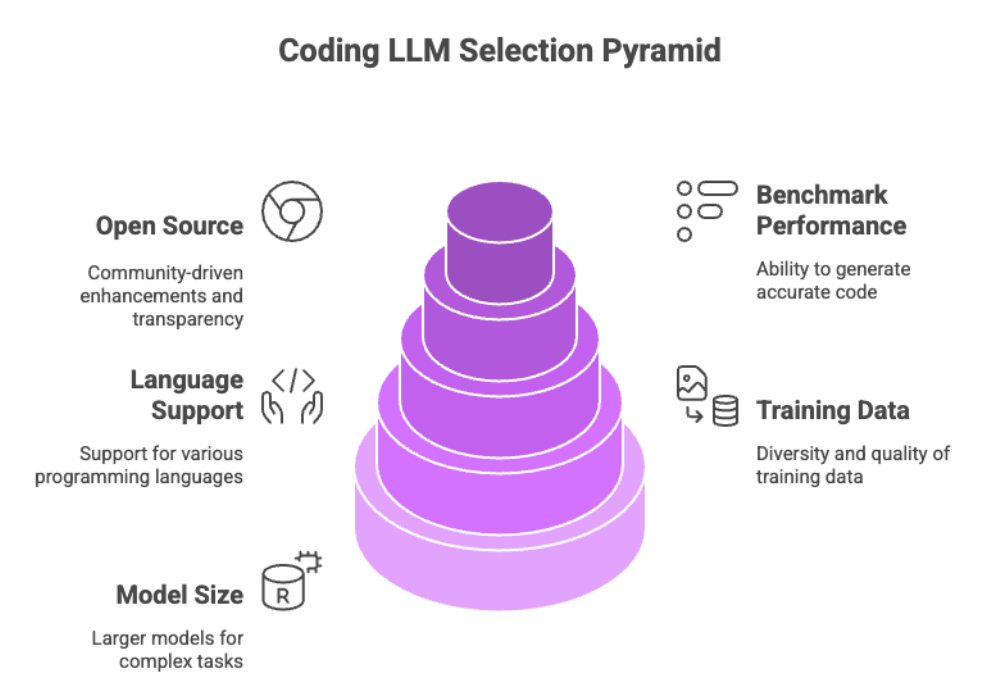

Coding LLMs Comparison

Choosing the right coding LLM involves evaluating several key factors to ensure the model aligns with your specific development needs. The size of the model, the diversity and quality of its training data, and its support for various programming languages all play a crucial role in determining its effectiveness for different coding tasks. Larger models, such as Gemini 2.5 Pro, often deliver superior performance on complex coding tasks due to their extensive training and greater capacity, but they also demand more powerful hardware and resources.

In contrast, smaller models like DeepSeek R1 offer a more accessible entry point for developers with limited computational resources, providing a balance between performance and efficiency. The open source nature of models like StarCoder is another important consideration, as it allows for community-driven enhancements, customization, and transparency—benefits that proprietary models may not offer.

When comparing coding LLMs, it’s essential to look at key coding benchmarks such as HumanEval and MBPP. These benchmarks assess a model’s ability to generate accurate, functional code and handle a variety of coding tasks across different programming languages. By considering these factors—model size, training data, programming language support, and benchmark performance—developers can make informed decisions about which coding LLM best fits their workflow, whether they prioritize handling complex coding tasks or need a lightweight solution for everyday development.

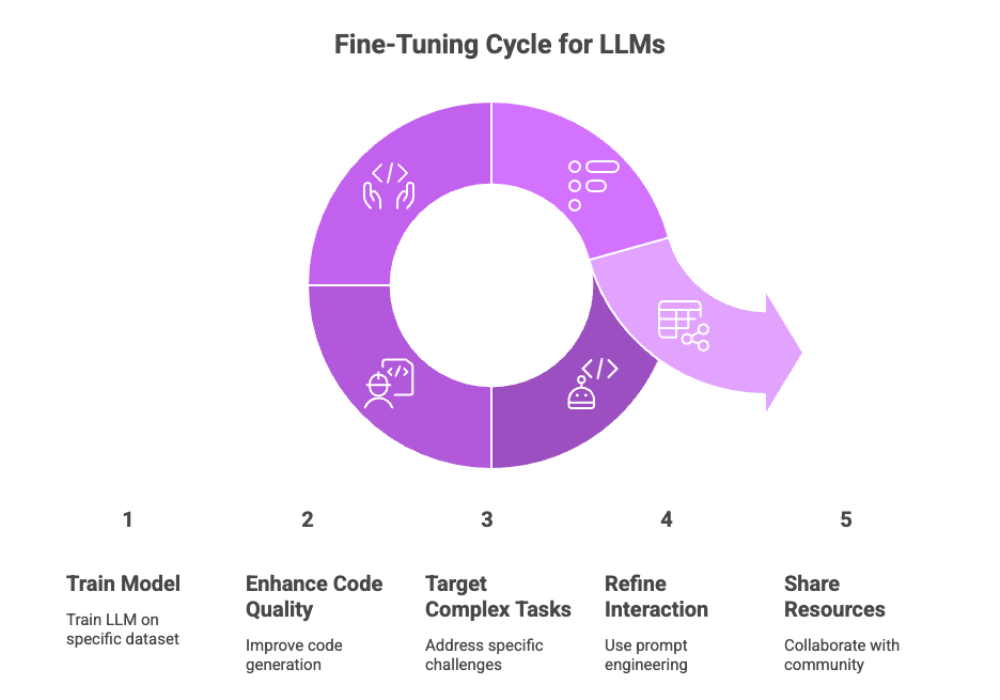

Fine-Tuning LLMs for Coding

Fine-tuning is a powerful technique that allows developers to adapt LLMs to their unique coding requirements, resulting in improved code quality and more relevant code generation. By training a model on a specific dataset—such as a repository of Python code or code adhering to particular coding standards—developers can enhance the model’s ability to generate code that matches their preferred style and project needs. This process is especially valuable for organizations with established coding standards or those working with niche programming languages.

Fine-tuning can also target complex tasks, such as advanced debugging or code refactoring, making the model more adept at handling the specific challenges faced in a given development environment. Techniques like prompt engineering further refine the interaction between developers and LLMs, enabling more precise and context-aware code generation.

The open source community plays a pivotal role in advancing fine-tuning strategies. By sharing datasets, best practices, and fine-tuned models, the developer community accelerates the improvement of coding LLMs for a wide range of programming languages and coding tasks. This collaborative approach not only leads to more effective and efficient fine-tuning but also democratizes access to high-quality AI tools, empowering developers everywhere to enhance their coding process and reduce errors in generated code.

Choosing the Best LLM for Coding

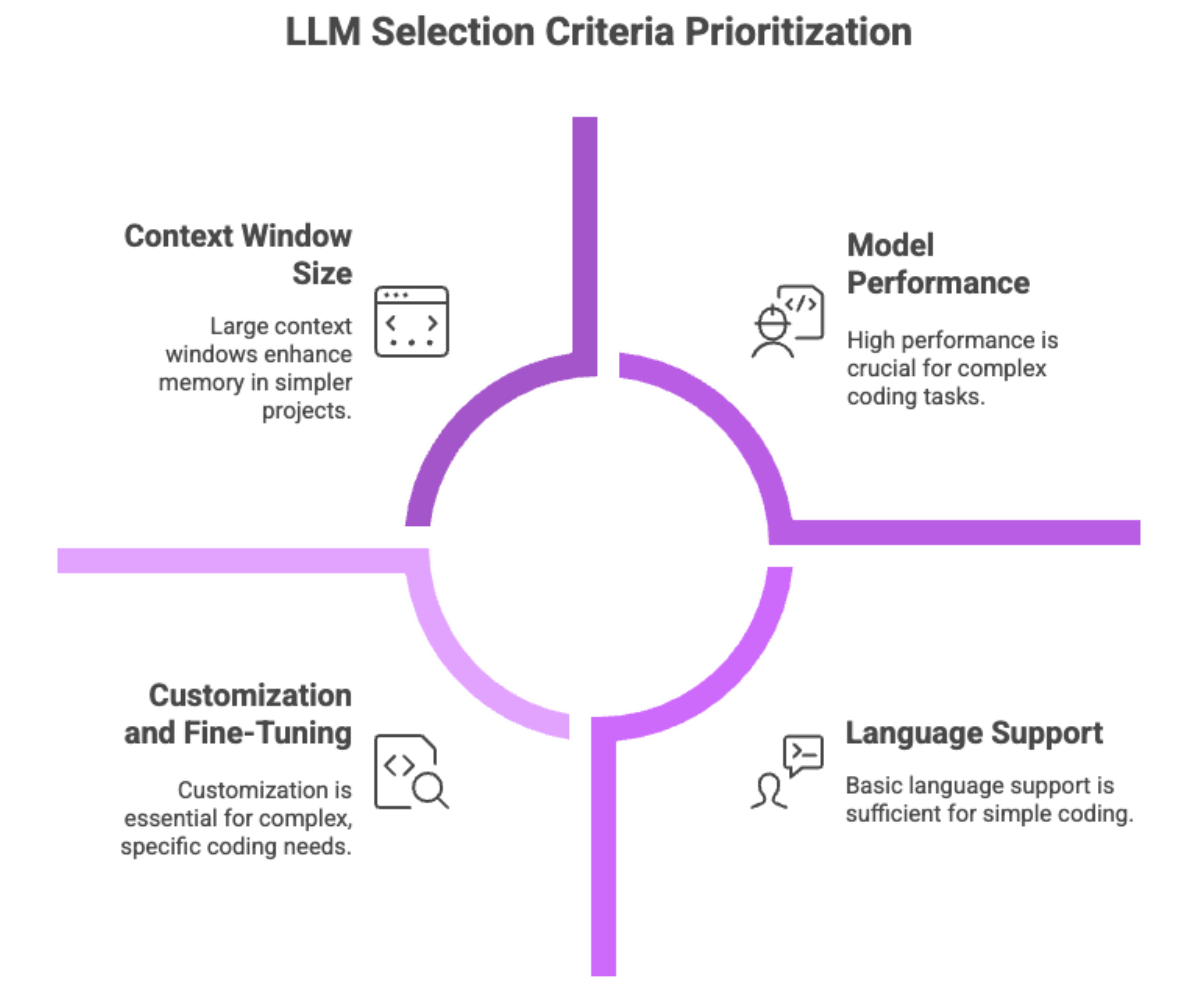

Selecting the best local LLM for coding depends on multiple technical and practical considerations. Developers and teams should assess the following criteria when evaluating options:

-

Model Performance: Choose LLMs that perform well on benchmarks like HumanEval and MBPP. These indicators reflect how effectively a model can generate code, solve problems, and support development workflows.

-

Context Window Size: The larger the context window, the better the model can "remember" prior code or instructions, which is especially useful in large files or complex tasks.

-

Language and Environment Support: Ensure compatibility with various programming languages such as Python, Java, JavaScript, C++, and domain-specific languages.

-

Customization and Fine-Tuning: Look for models that support fine-tuning, allowing you to align model outputs with specific coding styles, project requirements, or regulatory standards.

-

Update Cycle and Maintenance: Choose models and tools that are actively maintained, patched, and improved by either an open source community or dedicated development teams.

-

Cost and Licensing: Evaluate open-source licensing terms to ensure compliance, especially for commercial or enterprise use. Many popular local LLM models are free for personal or research use, but commercial applications may require attribution or specific licenses.

By combining these metrics, you can confidently identify the local model that best aligns with your technical requirements and long-term project goals.

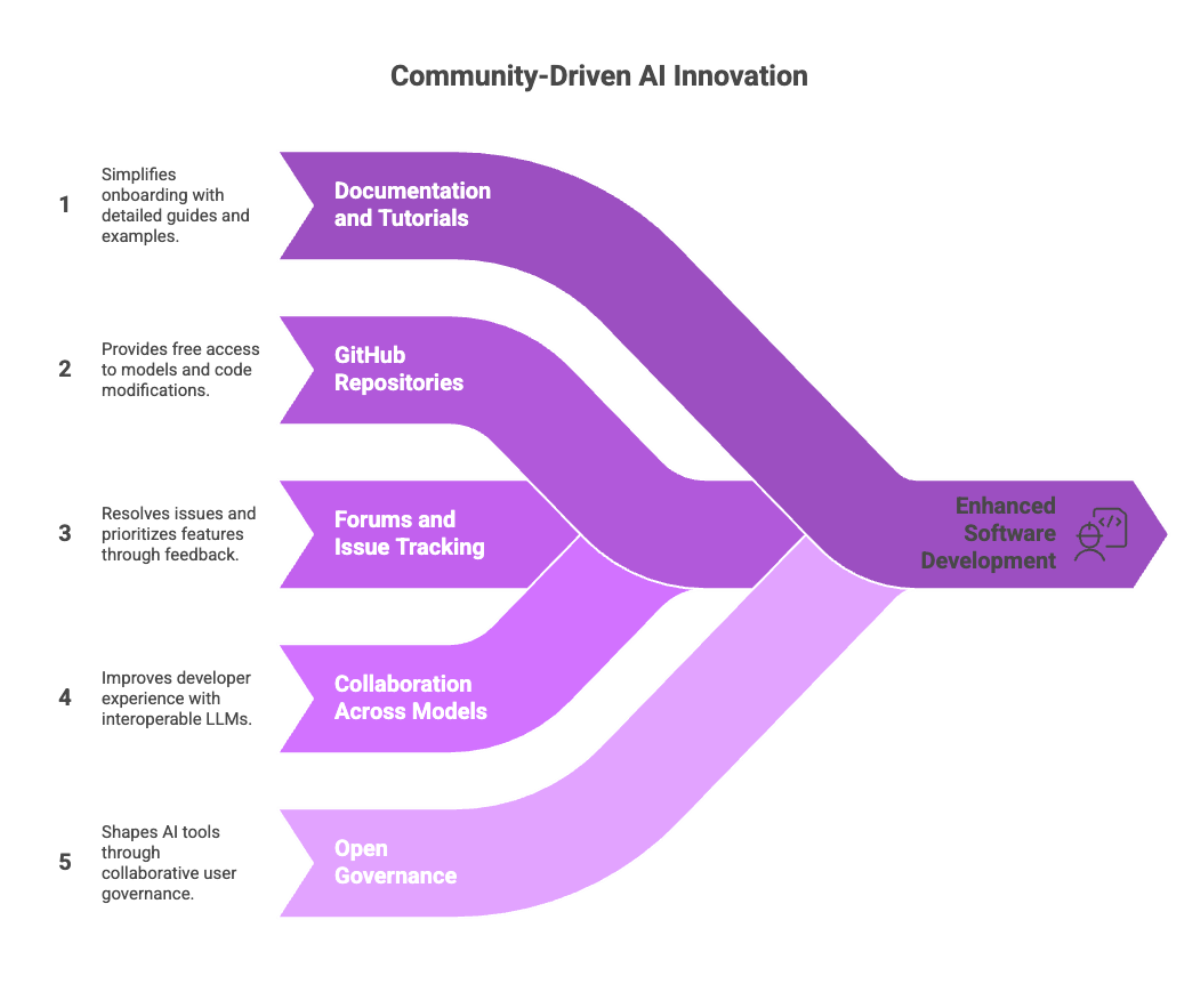

Open Source Coding LLMs Community

One of the strongest drivers of innovation in this space is the open source coding LLMs community. Active, collaborative, and developer-friendly, this community fuels rapid improvements in model performance, tools, and integrations.

What Makes the Community Valuable?

-

Documentation and Tutorials: Most major projects include detailed setup guides, examples, and community-written enhancements that make onboarding easier.

-

GitHub Repositories and Discussions: Model weights, pre-trained versions, and code for running local LLMs can be freely downloaded, forked, and modified.

-

Forums and Issue Tracking: Real-time feedback loops help resolve issues and prioritize features.

-

Collaboration Across Models: Interoperability among LLMs (e.g., Code LLaMA and StarCoder) through shared tools and standards like gguf format improves the developer experience.

-

Open Governance: Many projects encourage pull requests and collaborative governance, letting users shape the direction of AI tools they rely on.

Being part of this ecosystem not only boosts your software development capabilities but also helps democratize AI innovation, especially in regions or industries where proprietary tools are cost-prohibitive.

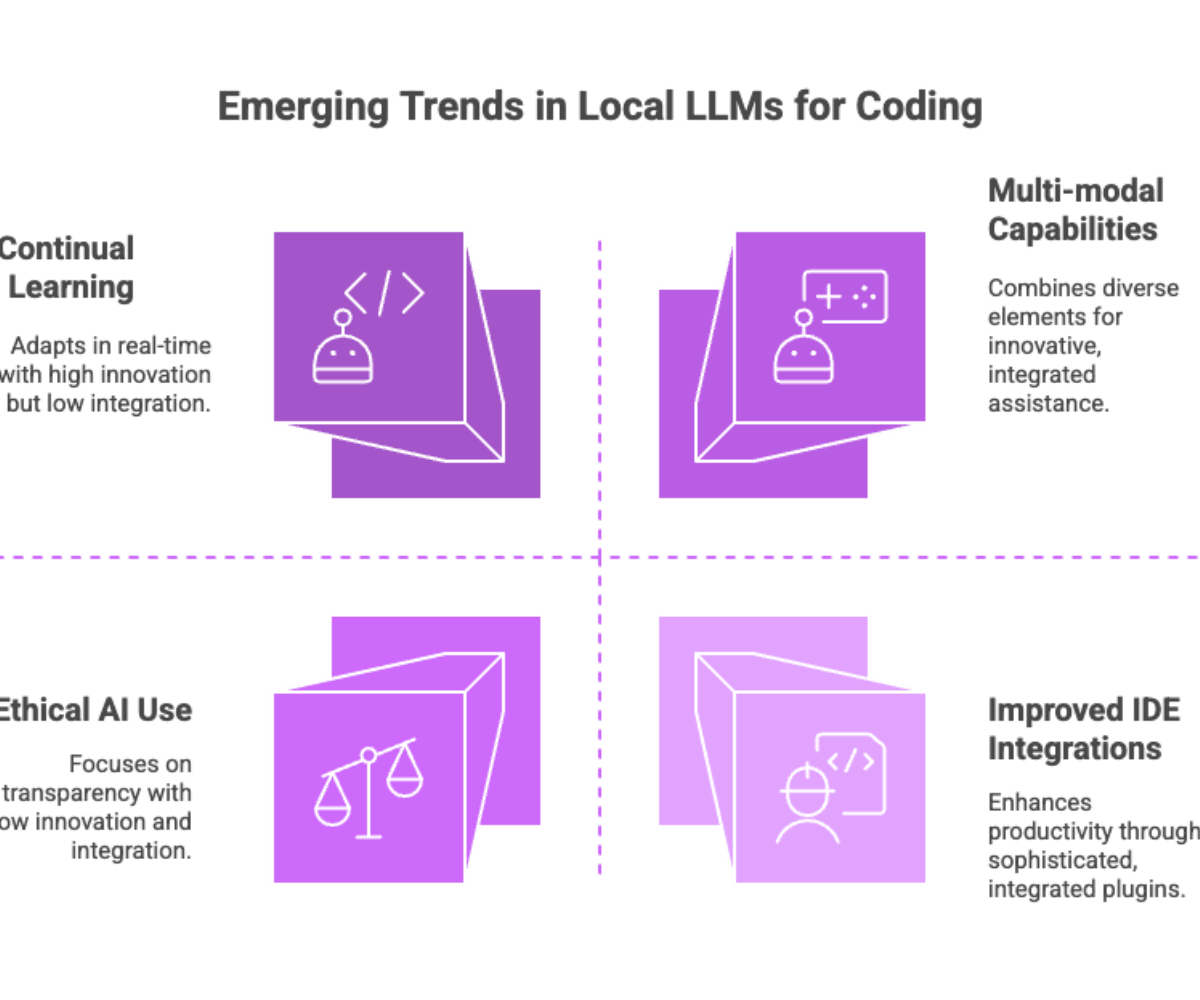

Future Directions for Coding LLMs

The trajectory of local LLMs for coding is advancing quickly, with several emerging trends worth watching:

-

Multi-modal Capabilities: Future models may combine text, code, and visual elements (like diagrams or UIs) to offer richer assistance.

-

Improved IDE Integrations: Plugins for tools like Visual Studio Code and JetBrains IDEs are becoming more sophisticated, enabling seamless interaction with local models.

-

Hybrid Models: Combining small, efficient local LLMs with optional cloud augmentation can provide the best of both worlds—privacy with scalability.

-

Continual Learning: Models that evolve based on local codebases (without retraining from scratch) are on the horizon, allowing real-time adaptation.

-

Ethical AI Use: As models take on more coding responsibility, transparency and bias mitigation in auto-generated code will grow in importance.

These developments will continue to improve how developers interact with AI tools—boosting productivity while ensuring greater trust and control over the codebase. To learn more, explore modern software development best practices.

Conclusion

The best local LLMs for coding give developers powerful, flexible, and private tools for accelerating the software development process. Whether you're building web apps, working in data science, or contributing to open-source libraries, these models deliver intelligent code suggestions, bug fixes, and even entire modules—without reliance on third-party providers.

By leveraging open source LLMs such as Code LLaMA, StarCoder, and WizardCoder, and deploying them through tools like LM Studio or Ollama, developers gain:

-

Elimination of subscription fees

-

Improved performance through fine-tuning

-

Secure workflows with enhanced data privacy

As the open-source community continues to innovate and share resources, the capabilities of local LLMs will only grow—empowering more developers around the world to code smarter, faster, and safer.