Best Local LLM for Python Coding: Top Picks and Practical Insights

In this article, we explore the best local LLM for Python coding available in 2025, and provide a comprehensive comparison of the best LLMs for Python coding to help you identify top-performing models for your needs. We focus on open source models that excel in code generation and handling complex tasks.

We cover key features of various models, share insights on prompt engineering, and highlight how these AI assistants improve developer productivity while offering cost savings and full control over data privacy. Whether you’re interested in fine-tuning, low latency performance, or integrating with your existing workflow, this guide provides a hands-on experience to help you choose the best model for your coding needs.

Key Takeaways

Local LLMs combine the open source nature and full control over source code with the ability to handle complex coding tasks, offering developers a powerful AI assistant for writing code and managing complex SQL queries without the need for an api call to external servers.

Choosing the best local llm for python coding involves evaluating all the benchmarks related to code generation and considering factors like usage costs, context window size, and support for different llms to ensure optimal performance and cost-efficiency.

The rise of open source llms provides a cost-effective alternative to closed source models, enabling developers to leverage vast amounts of training data and customize models to their specific needs, reducing cost concerns while maintaining data privacy and improving llm interactions.

Effective tooling is essential for AI-powered development workflows, enabling seamless integration with IDEs, code repositories, and automation scripts. This supports tasks like testing, debugging, environment management, and integrating AI agents into development pipelines to enhance productivity and code quality.

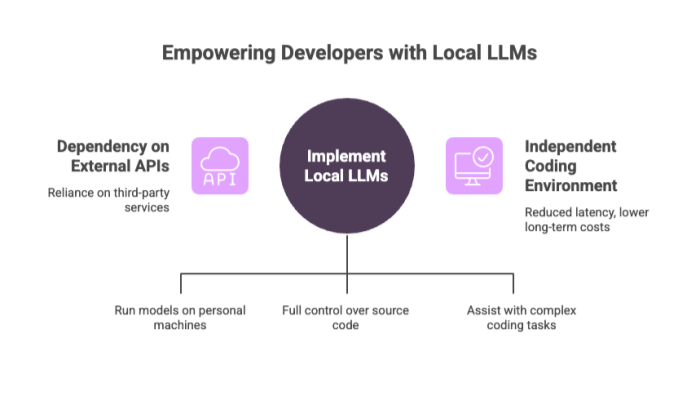

Introduction to Local LLMs

Local Large Language Models (LLMs) are reshaping the way developers approach coding. By running AI models directly on personal machines, local LLMs eliminate the need for constant internet connections or reliance on third-party APIs. This results in reduced latency, stronger data privacy, and significantly lower long-term costs.

For developers working with sensitive codebases or proprietary systems, local LLMs provide full control over source code and data handling. They’re especially valuable in industries like cybersecurity, healthcare, and finance—where data privacy and regulatory compliance are non-negotiable. The effectiveness of local LLMs also depends on the underlying system, including both hardware and software components, which support performance, security, and functionality.

Local LLMs aren’t just about data safety. These models support various programming languages, offer real-time code generation, assist with complex coding tasks, and improve development efficiency. Developers can use them for tasks such as writing Python scripts, generating boilerplate code, and performing code completion directly on their local machine.

Benefits of Local LLMs

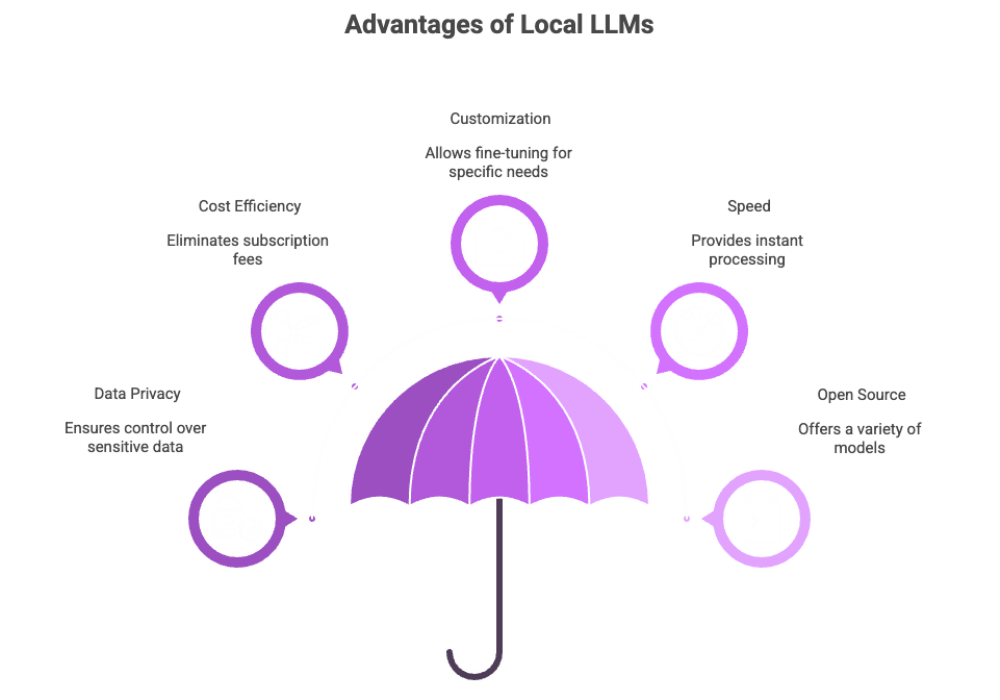

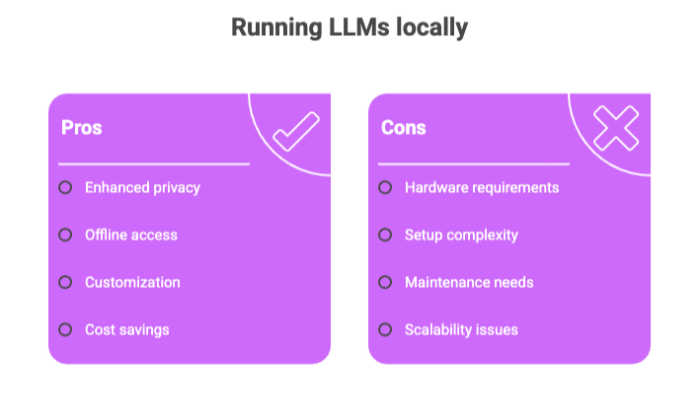

Local LLMs bring a host of advantages to developers tackling coding tasks, especially when it comes to data privacy, cost efficiency, and customization. By running open source coding LLMs directly on their own machines, developers gain full control over their code and sensitive data, eliminating the risks associated with sending information to external servers or relying on commercial LLMs. This local approach not only safeguards intellectual property but also slashes usage costs, as there are no recurring subscription fees or pay-per-use charges.

Another key benefit is the speed of code generation and execution. Local models process requests instantly, without the delays of an API call or cloud round-trip, making them ideal for rapid code completion and iterative development. Developers can also fine tune these models to specific programming languages or project requirements, resulting in more accurate and context-aware code suggestions.

The open source nature of local LLMs means there’s a wide variety of models to choose from, each with unique key features and compatibility with different programming languages. This flexibility empowers developers to select or create the best coding LLM for their workflow, ensuring efficient, secure, and cost-effective coding.

Choosing the Best LLM

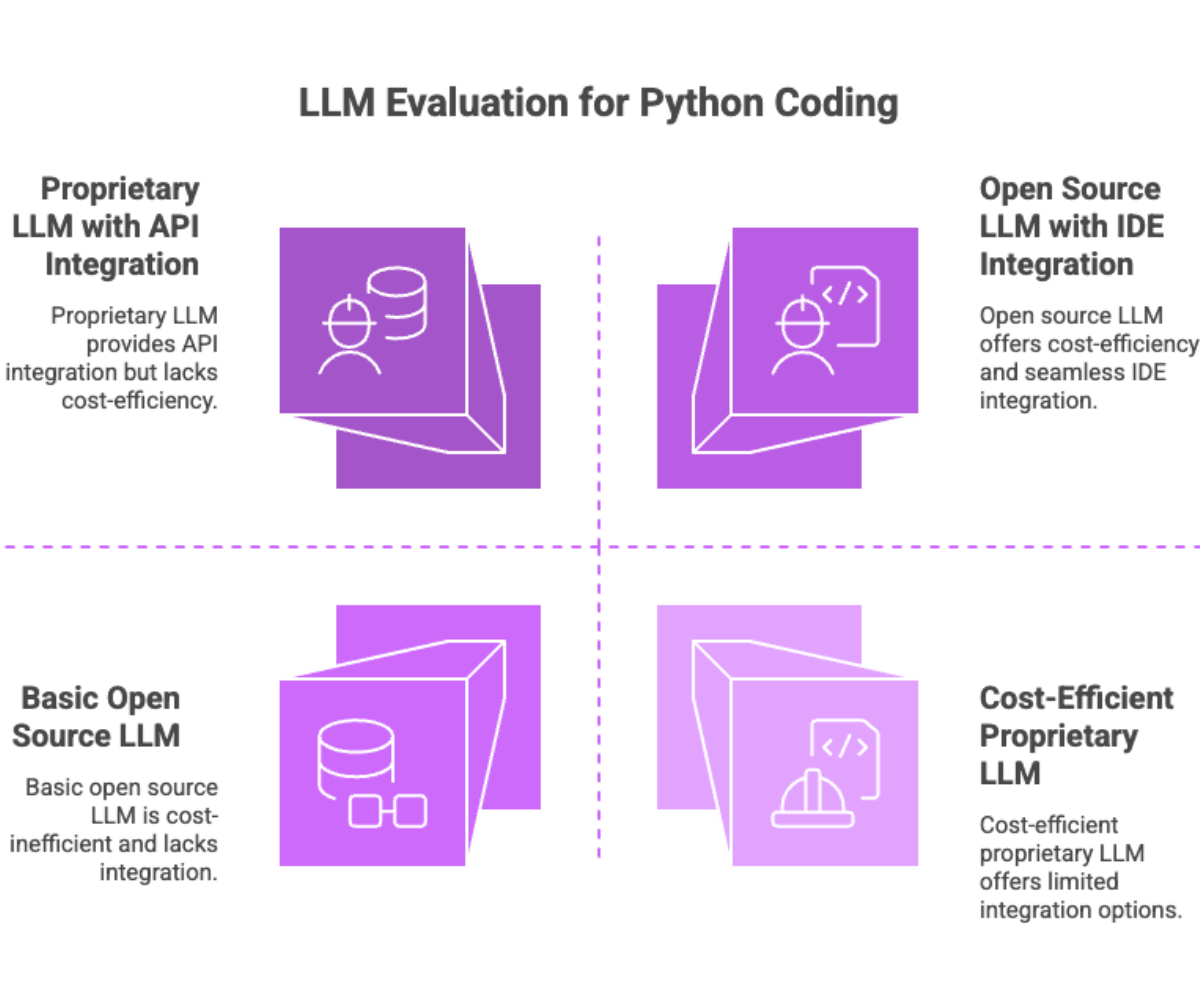

When it comes to selecting the best LLM for Python coding, multiple factors influence the choice. Evaluating top models based on benchmarks and practical considerations helps users select the most suitable LLM for their coding needs. Developers must evaluate the model’s ability to perform specific coding tasks efficiently, especially in high-pressure or production-grade environments.

Considerations for Selection:

Key coding benchmarks like HumanEval and MBPP help evaluate model proficiency in generating correct, testable code.

The context window size determines how much code or prompt history the model can remember at once—a critical factor in generating consistent logic in longer scripts.

Support for multiple programming languages, especially Python, JavaScript, and C++, expands usability.

Models that allow for fine-tuning enable developers to adapt LLMs to specialized tasks or proprietary coding standards.

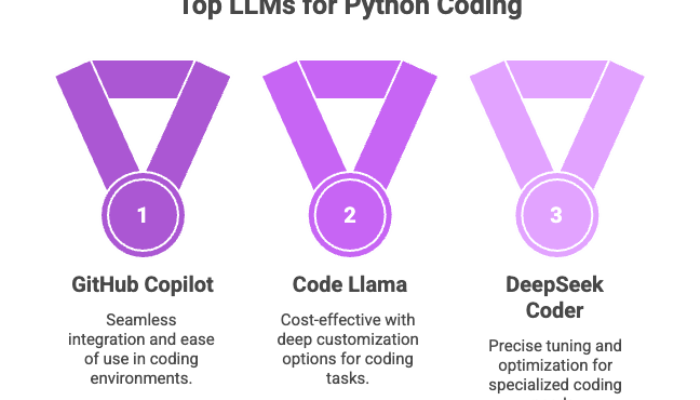

Open-source coding LLMs such as Code Llama and DeepSeek Coder provide a cost-effective foundation for developers who prioritize customization. These models allow deeper access to model weights and training data, enabling more precise tuning and optimization.

On the other hand, commercial options like GitHub Copilot offer seamless integration with Visual Studio Code and other editors, but raise data privacy and cost concern issues due to their dependency on cloud infrastructure.

Local LLM Options

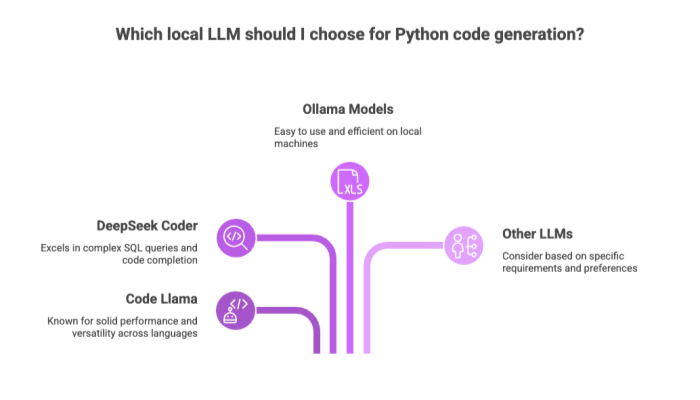

The landscape of local models is quickly evolving, with powerful new options available to developers who prefer running LLMs offline. Among the leading models for Python code generation are:

Code Llama: Known for its solid performance on Python benchmarks and ability to generate code across various programming languages.

DeepSeek Coder: Designed specifically for developers, this model supports a broad context window and excels at tasks such as complex SQL queries and code completion.

Ollama Models: Engineered for ease of use, these models run efficiently on local machines, especially on macOS and Linux, using a single executable file approach for minimal setup.

Each of these models serves as a valuable tool for developers seeking to enhance their coding workflows.

Local LLMs can be integrated with existing tools and workflows such as Jupyter Notebooks, VS Code, and custom IDEs through API endpoints or command-line interfaces. They allow for running models locally, removing the need to send code to a server for interpretation or analysis. Choosing an open source model for coding tasks offers advantages like greater controllability, offline capabilities, and improved privacy.

Developers may also consider other llms such as Claude, Gemini, or Aider, depending on their specific requirements and preferences.

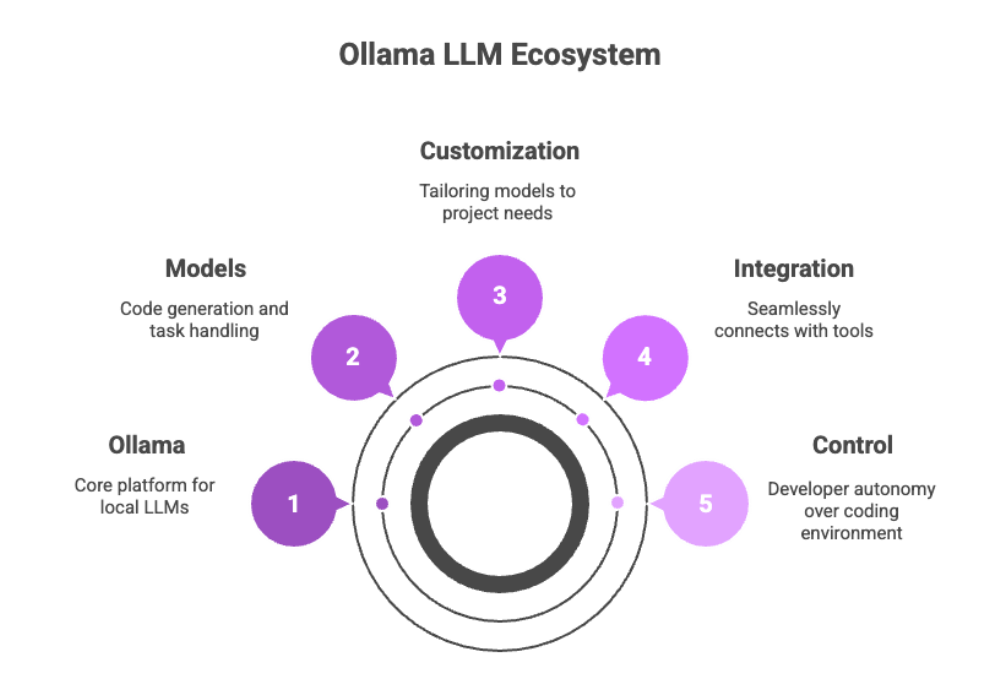

Ollama Models

Ollama has emerged as a leading platform for running local LLMs, offering developers a streamlined way to leverage powerful models for a wide range of coding tasks. Ollama models are engineered for fast, reliable code generation and are capable of handling complex coding tasks across multiple programming languages, including Python and complex SQL queries. The Ollama model stands out as an open source solution that can be fine tuned to meet specific project needs, whether that’s generating Python code snippets or automating intricate database operations.

Developers can easily create and customize Ollama models, taking advantage of openly available model weights and robust API endpoints. This flexibility allows seamless integration of Ollama models into existing development tools and workflows, making it simple to automate code completion, refactor code, or generate example code on demand.

With its focus on local LLMs, Ollama empowers developers to maintain full control over their coding environment while benefiting from the latest advancements in open source AI tools.

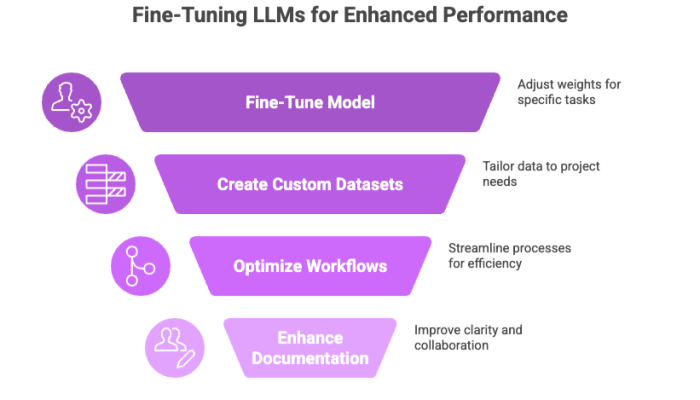

Fine-Tuning Local LLMs

To maximize performance, many developers choose to fine-tune local models on specific datasets or repositories. This process involves adjusting model weights to better suit tasks like:

Generating domain-specific source code

Adhering to internal coding standards

Improving complex code generation

To further optimize the integration and performance of fine-tuned local LLMs, custom datasets, workflows, or documentation can be created to address specific project needs and enhance collaboration.

Fine-tuning allows LLMs to learn from a developer’s own project structure, coding style, and preferred naming conventions. For instance, a Python-based data analytics team might fine-tune Code Llama on their Jupyter notebook history to generate more relevant function names and docstrings.

While fine-tuning requires training data, compute resources, and familiarity with machine learning, many open-source tools such as Hugging Face Transformers or LangChain make the process more accessible.

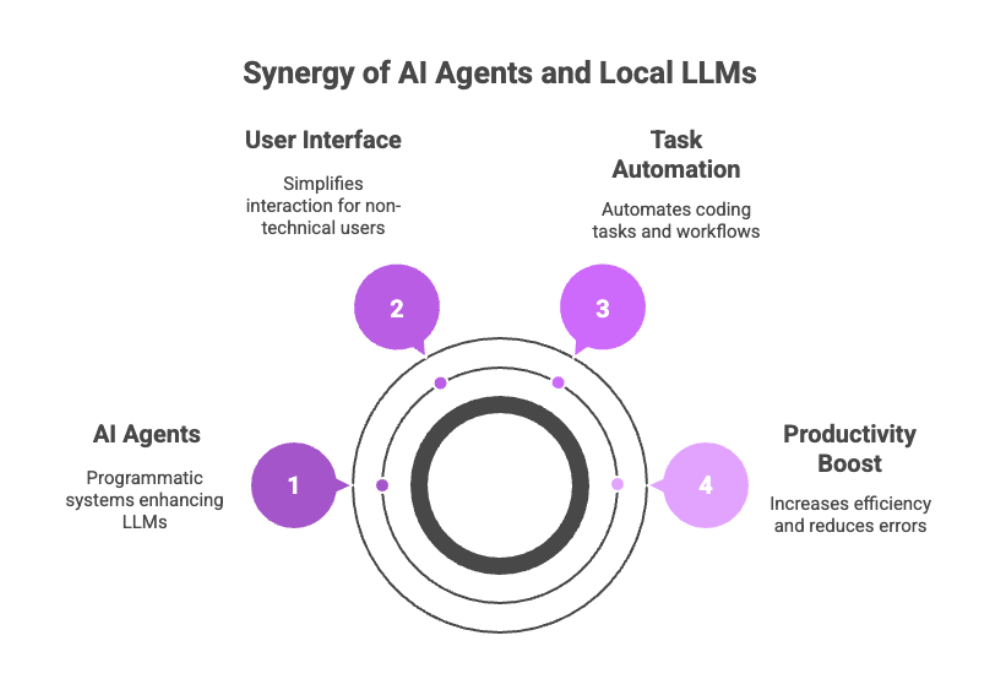

AI Agents and Local LLMs

Local LLMs can be further enhanced with AI agents—programmatic systems that interact with the LLM to automate and coordinate coding tasks. These agents provide:

A user-friendly interface for non-technical users

Smart chaining of actions like reading files, interpreting errors, and rewriting code

Support for prompt engineering to instruct models with task-specific directives

For example, a local agent powered by DeepSeek Coder could:

-

Analyze a CSV file

Generate Python code to perform data cleaning

Output a summary in markdown for documentation

This synergy between AI agents and local LLMs streamlines workflows, reduces repetitive coding tasks, and boosts productivity.

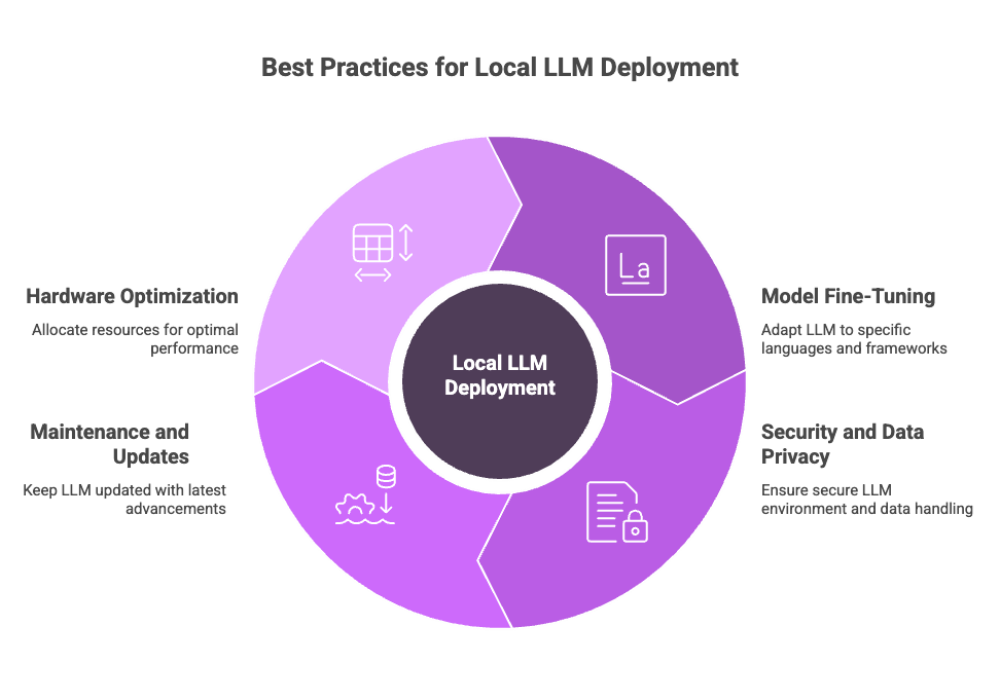

Best Practices for Local LLMs

To ensure optimal performance, security, and maintainability, developers should follow best practices when deploying and using local LLMs for coding tasks. Automating basic stuff, such as fixing type errors, understanding code, or adding documentation, can significantly improve overall productivity by freeing up time for more complex problem-solving.

Always review and thoroughly test generated code to ensure its quality and reliability. For more information on best practices, see our article on mastering the software coding process for effective development.

1. Model Fine-Tuning

Adapt your LLM to specific programming languages and frameworks you regularly use (e.g., Django, Flask, NumPy).

Fine-tune on real-world code repositories to align the model with team standards or project-specific syntax.

2. Security and Data Privacy

Always run the LLM in a sandboxed or isolated environment to avoid unauthorized access to local files.

Use encryption and secure storage when feeding sensitive code or data to the model.

Avoid using models that require an external API key unless trust and compliance standards are verified.

3. Maintenance and Updates

Regularly update models and tools to benefit from bug fixes, new capabilities, and security patches.

Participate in open-source communities to stay current on the latest advancements in coding LLMs.

4. Hardware Optimization

Allocate adequate resources—especially GPU memory and CPU cores—for smoother inference and reduced latency.

Optimize model deployment with formats like gguf, and frameworks like llama.cpp or vllm.

By following these practices, developers can make the most out of their local LLM infrastructure while preserving performance and security.

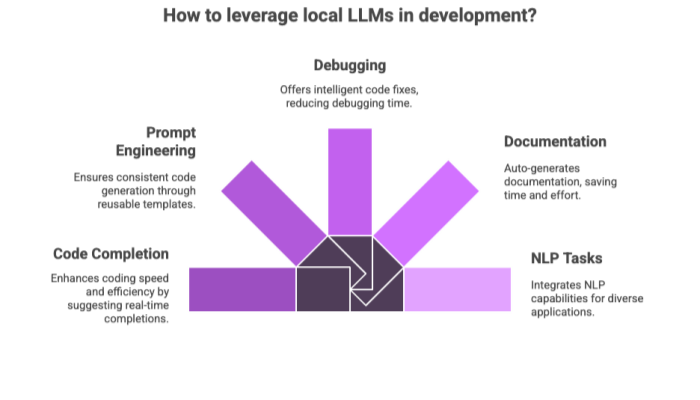

Local LLMs for Real-World Coding

Local LLMs are not theoretical tools—they’re already playing a transformative role in real-world development environments. Developers are increasingly relying on local LLMs for generating code, which introduces unique workflows and challenges, such as debugging, integrating with existing tools, and ensuring code quality.

As these models continue to evolve, it’s crucial to include diverse and well-structured examples in their training data, such as high-quality programming problems and instruction-answer pairs, to further enhance their performance and reliability.

Use Cases Include:

Code Completion: Suggesting completions for functions, classes, or entire scripts in real-time.

Prompt Engineering: Creating reusable prompt templates for consistent code generation.

Debugging: Locally analyzing errors and offering intelligent code fixes.

Documentation: Auto-generating docstrings or README content based on source code.

Natural Language Processing Tasks: Local models like DeepSeek Coder can also handle text classification, entity recognition, and sentiment analysis, blending code and NLP tasks in one toolset.

With full control over the training data and model weights, developers can tailor these models to suit exact workflows—be it rapid prototyping or production-ready code deployment.

Running Local LLMs Locally

While the benefits are strong, running a local model comes with hardware and setup requirements. Running a large language model locally requires adequate hardware and software resources to ensure smooth operation.

Minimum Recommended Setup:

Modern multi-core CPU

16GB+ RAM

Discrete GPU with at least 8GB VRAM (e.g., NVIDIA RTX series)

SSD storage for quick model load times

Local LLMs can be run with:

PyTorch, TensorFlow, or ONNX Runtime

Command-line tools like Llamafile, Ollama, and lmdeploy

GUI wrappers like LM Studio, which simplify model switching and deployment

For larger models, integration with cloud GPUs (via AWS or GCP) is possible while maintaining local orchestration. Hybrid setups allow high performance without compromising control.

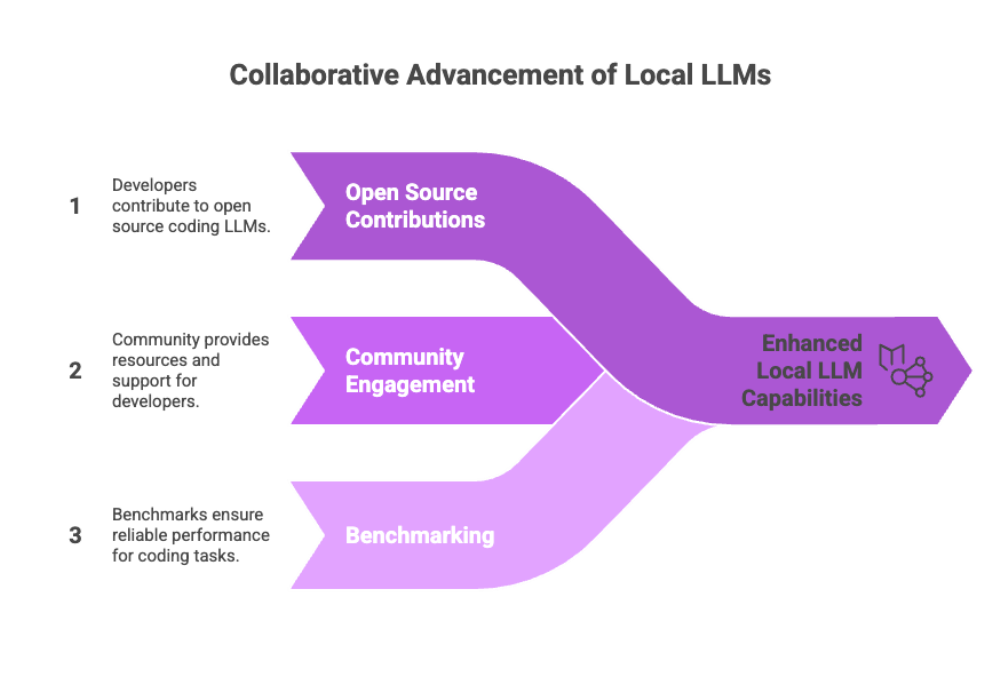

Local LLM Community

The local LLM community is a vibrant and rapidly expanding ecosystem, driven by developers and organizations passionate about open source coding LLMs. This community is instrumental in advancing the capabilities of local models, sharing example code, and providing practical resources such as tutorials, forums, and documentation. By engaging with the local LLM community, developers can access a wealth of knowledge on best practices for code generation, coding tasks, and model optimization.

Community-driven projects like Code Llama and DeepSeek Coder have set new standards for code generation and completion, with their performance regularly evaluated against key coding benchmarks like HumanEval and MBPP. These benchmarks ensure that local LLMs deliver reliable results for real-world coding tasks.

Whether you’re looking for guidance on integrating a local model, troubleshooting issues, or contributing to open source projects, the local LLM community offers invaluable support and fosters collaboration among developers working with coding LLMs.

Local LLM Support

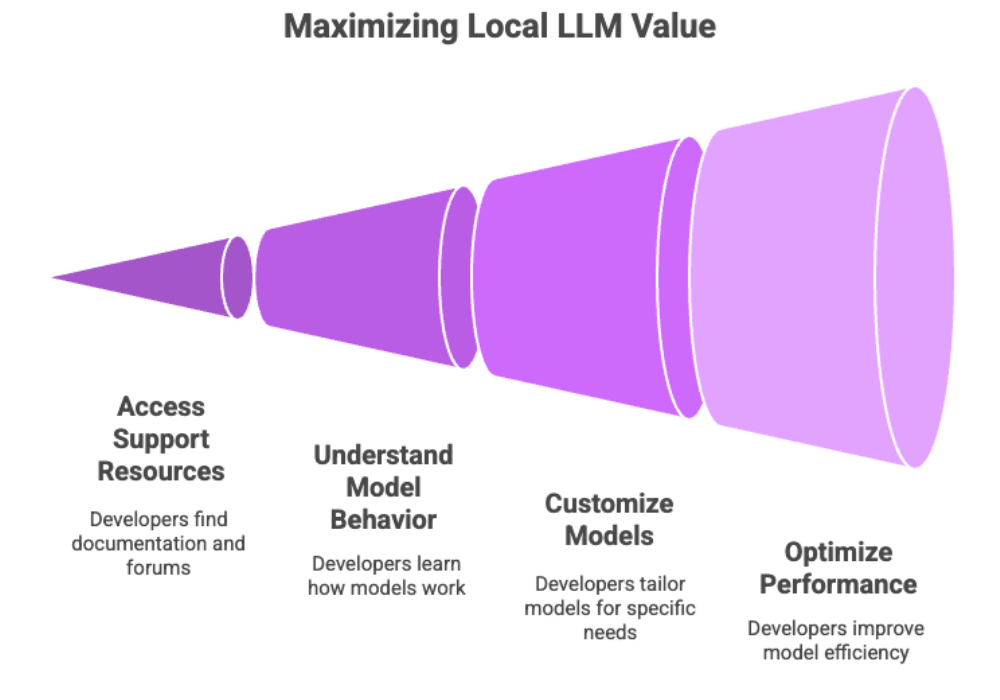

Robust support is essential for developers adopting local LLMs, and the open source coding LLM ecosystem delivers on this front. Developers can tap into a wide range of support resources, including detailed documentation, example code, and active online forums dedicated to local models and coding LLMs. These resources make it easier to resolve issues, understand model behavior, and optimize performance for both basic and complex coding tasks.

In addition to community-driven support, many open source coding LLMs are designed for easy customization, allowing developers to fine tune models for specific programming languages or unique project requirements. This adaptability ensures that local LLMs can be seamlessly integrated into diverse workflows, from automating code completion to tackling complex coding challenges. By leveraging the available support, developers can maximize the value of their local LLMs and stay ahead in the rapidly evolving landscape of AI-powered coding.

Comparison of Local LLMs

Here’s a quick side-by-side of the top local LLMs for Python and general-purpose code generation:

Model |

Strengths |

Weaknesses |

|---|---|---|

Code Llama |

Strong Python performance, open-source, stable |

Requires more VRAM for larger versions |

DeepSeek Coder |

Long context, multilingual code support |

Still in active development; less documentation |

Ollama Models |

Easy install, optimized for consumer hardware |

Smaller models may lack advanced capabilities |

StarCoder |

Good JavaScript and Python output |

Heavier compute footprint |

When comparing these models, it's important to evaluate each model's ability to handle specific coding tasks and benchmarks, such as HumanEval, MBPP, and DS-1000, to understand their strengths and limitations.

Each model serves a different need—from lightweight scripting on laptops to enterprise-scale deployments with fine-tuned capabilities.

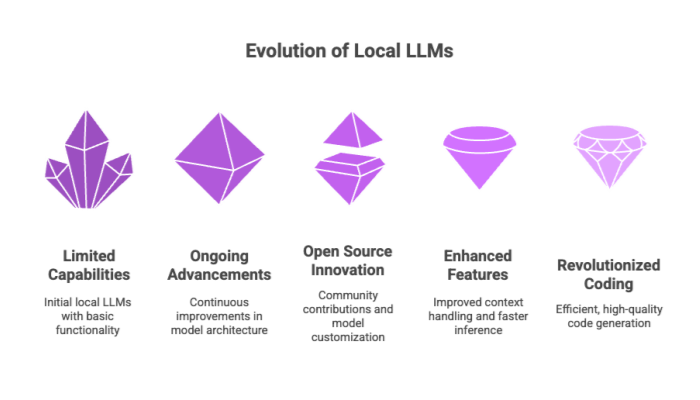

Future of Local LLMs

The future of local LLMs is bright, with ongoing advancements poised to transform how developers approach code generation, natural language processing tasks, and complex coding tasks. As local models continue to evolve, their ability to handle increasingly complex tasks and larger context window sizes will make them indispensable tools for software development. The open source nature of these models will fuel further innovation, enabling developers to fine tune and customize LLMs for specialized applications and industries.

Key features such as improved context handling, faster inference, and enhanced support for various programming languages will drive broader adoption of local LLMs. As the community grows and more developers contribute to model development and benchmarking, we can expect local LLMs to deliver even greater cost savings, data privacy, and full control over coding workflows.

Ultimately, local LLMs will empower developers to create high-quality code more efficiently, revolutionizing the way we build and maintain software in an increasingly AI-driven world.

Conclusion

Choosing a local LLM for Python coding in 2025 is no longer a fringe decision—it’s a mainstream strategy for developers who want:

Full control over coding workflows

Cost-effective alternatives to commercial AI tools

Private, secure environments for sensitive projects

Customizable AI that evolves with their needs

This blog post aims to share practical insights and experiences to help developers make informed decisions about local LLMs for Python coding.

Open-source coding LLMs like Code Llama, DeepSeek Coder, and Ollama models empower individuals and teams to build smarter, faster, and more securely. With the support of an active open-source community and ever-growing model options, the local LLM revolution in software development is just getting started.