EU digital omnibus reshapes GDPR AI rules across Europe

The European Commission has unveiled a sweeping “digital omnibus” package that would relax parts of GDPR, delay core provisions of the AI Act and streamline several other flagship digital laws.

Supporters call it a long overdue simplification that cuts red tape, reduces cookie fatigue and saves billions in compliance costs. Critics, including major civil-rights groups, describe it as a “massive rollback” of digital protections that risks weakening the very foundations of EU tech policy.

For executives, the move is not just another regulatory tweak: it reshapes how data, consent and AI governance will work in Europe for the rest of the decade.

Key takeaways

-

The digital omnibus would delay central AI Act obligations and soften aspects of GDPR and ePrivacy, allowing broader use of personal data for AI training and fewer explicit consent prompts.

-

Business groups welcome the reforms as a way to boost competitiveness, while civil-rights organisations warn they undermine hard-won digital protections and user control.

-

Enterprises should treat the proposals as a signal that Europe wants both innovation and enforceable rights, and use this transition period to modernise data governance, AI oversight and product design.

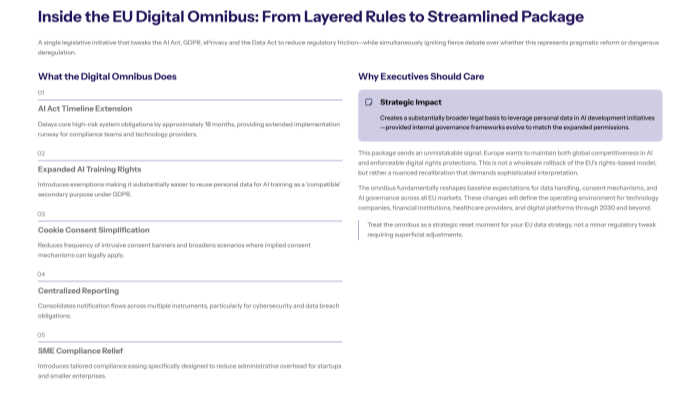

What the EU digital omnibus proposal actually includes

The “digital omnibus” is a single legislative initiative aimed at streamlining several existing EU tech rules rather than a brand-new regulation. The package touches the AI Act, GDPR, the ePrivacy directive and the Data Act, with the stated goal of simplifying overlapping obligations and cutting administrative overhead.

According to early descriptions, the Commission believes the current framework has become too fragmented and burdensome, particularly for smaller firms. It argues that adjustments are needed to reduce compliance costs, reduce repetitive consent prompts, and clarify how data can be used for AI development while still preserving “high levels” of protection.

From rulebook to omnibus simplification

The EU originally built its digital regime through a stack of separate laws: GDPR to govern personal data, the ePrivacy directive to regulate tracking and communications, the Digital Services Act for intermediary responsibility, the Digital Markets Act for gatekeeper power, and the AI Act for algorithmic risk.

The omnibus is an explicit acknowledgement that this layered approach has created complexity. It attempts to:

-

align definitions and concepts across multiple instruments

-

rationalise reporting and cybersecurity notification requirements

-

reduce overlaps between AI Act obligations and GDPR data rules

-

streamline cookie consent and online tracking provisions

In principle, simplification makes sense. In practice, the specific changes proposed have triggered intense argument about whether simplification has tipped into deregulation.

Headline changes at a glance

A high-level comparison of current rules versus the omnibus direction helps clarify what is at stake:

|

Area |

Current framework |

Proposed omnibus direction |

|---|---|---|

|

AI Act timelines |

Earlier enforcement for high risk systems |

Delay of key high risk obligations by up to 18 months |

|

Data use for AI training |

Strict consent and purpose-limitation under GDPR |

Easier use of personal data for AI training, with new exemptions |

|

Cookie consent |

Frequent consent prompts and banners |

Reduced frequency of banners, broader implied consent cases |

|

Reporting and cybersecurity |

Multiple, fragmented reporting channels |

More centralised notification procedures |

|

Small company compliance |

Same framework with limited simplifications |

Tailored compliance easing for SMEs and startups |

Sources such as The Guardian and The Verge note that, if adopted as drafted, the changes would make it significantly easier for tech firms to use personal data to train models and reduce the number of times users must explicitly consent to tracking.

Who supports the rollback narrative and who welcomes the change

Reactions to the digital omnibus fall broadly into two camps: those who see it as an overdue modernisation, and those who view it as a reversal of the EU’s role as the world’s strictest digital regulator.

Business and industry push for lighter rules

European business associations and US-linked tech lobbies have applauded the proposals. They argue that:

-

cumulative regulation has made Europe less attractive for AI and data-intensive startups

-

repetitive consent steps alienate users without meaningfully improving privacy

-

fragmented obligations across multiple laws create unnecessary legal friction

Groups like the Computer and Communications Industry Association (CCIA), whose members include Amazon, Apple, Google and Meta, have even suggested the omnibus does not go far enough and should be the starting point for a broader review of the entire digital rulebook.

From their perspective, this is about competitiveness. Faced with huge AI investments by US and Chinese companies, Europe risks falling behind unless it trims what industry sees as “regulatory fat”.

Civil-rights advocates warn of structural erosion

On the other side, organisations such as European Digital Rights (EDRi) and privacy campaigners argue that the omnibus crosses a line from simplifying to weakening rules. EDRi has warned that the package risks dismantling the “foundations of human rights and tech policy in the EU,” particularly by making it easier to reuse intimate data for AI training without explicit consent.

Former officials, including ex-commissioner Thierry Breton, have also cautioned against unpicking existing protections under the banner of “anti-red tape” reform. Their concern is that incremental adjustments now could be followed by further deregulation later, leaving citizens with significantly less control than GDPR originally promised.

This tension reflects a deeper issue: AI models trained on personal data can exhibit opaque behaviour, bias and unexpected outputs, especially when not constrained by strong governance. Cognativ has previously examined structural problems in model behaviour in its coverage of why some AI models struggle with edge cases and why pure technical fixes cannot substitute for clear policy boundaries.

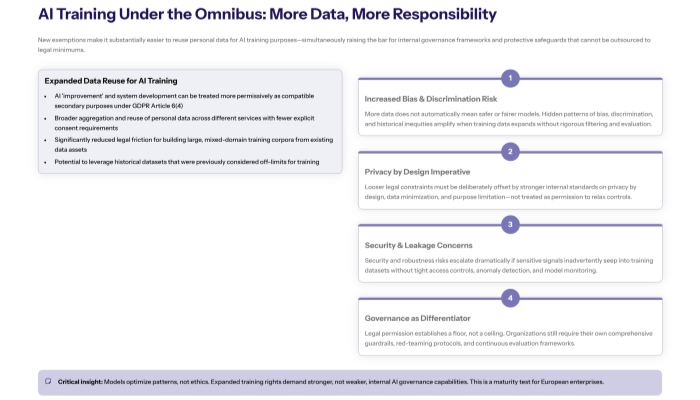

Implications for AI training and data use

One of the most contested elements of the digital omnibus is the proposal to relax how personal data can be used in AI training. Critics worry that the combination of looser consent requirements and delayed AI Act enforcement will create a window where powerful models can be trained on sensitive information with limited oversight.

Expanded scope for data reuse

Under a stricter reading of GDPR, companies must ensure that any reuse of personal data for AI training is compatible with the original purpose for which it was collected, and often requires explicit consent. The omnibus would introduce new exemptions that:

-

allow broader reuse of data for AI training, including for “improvement” of services

-

make it easier for companies to treat AI development as a secondary compatible purpose

-

reduce friction in accessing and aggregating datasets across different services

For AI teams, this looks attractive: fewer consent barriers, larger training corpora, smoother internal data flows. For data-protection officers and risk managers, it looks more ambiguous. The potential for hidden bias, discrimination or misuse grows as more data types are swept into training sets without granular control.

Cognativ’s analysis of MIT research on models lacking inherent values underscores why governance cannot be an afterthought. The fact that models optimise for patterns, not ethics, means expanded training rights must be matched by stronger internal safeguards, not just legal tweaks.

Tension with AI safety and security concerns

Looser data reuse rules also intersect with security and robustness issues. Poor-quality or misaligned training data can increase the risk of harmful outputs, leakage of sensitive information or exploitable behaviour in deployed systems. The debate about “AI brain rot” from exposure to low-quality or self-generated content is one side of this discussion; the other is the quiet risk that sensitive personal data embedded in training sets reappears in tools that feel conversational and benign.

For enterprises, the key takeaway is that legal permission does not equal risk-free practice. Internal standards for data minimisation, privacy by design and secure development must be more stringent than the lowest common regulatory denominator.

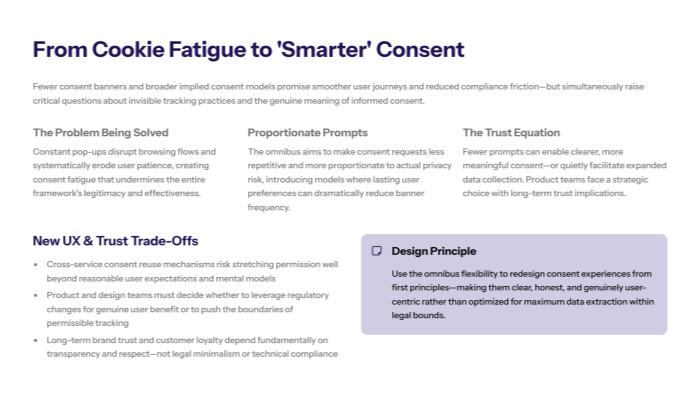

Cookie fatigue, consent and user experience

Another high-profile plank of the digital omnibus is the promise to end “cookie banner fatigue.” The Commission wants to reduce the number of prompts users must click through, especially for routine or low-risk tracking.

Reducing banners without expanding invisible tracking

Few users or product teams love constant pop-ups. But most privacy advocates argue that banners, however annoying, at least give individuals periodic visibility into tracking practices. Moving toward fewer prompts could:

-

streamline user journeys and reduce bounce rates

-

make privacy choices more persistent and meaningful if designed well

-

or, conversely, normalise broader data collection under default settings

The key design question is where the line is drawn. If consent is captured once and then applied across multiple services and contexts, the scope for silent expansion of tracking increases. The omnibus debate is therefore not only a legal one but a product-design issue.

Enterprises that take the moment to rethink consent UX from first principles can create experiences that are both less intrusive and more honest. Those that simply use the omnibus as an excuse to collect more without clear explanation risk reputational damage and user backlash, even if they remain technically compliant.

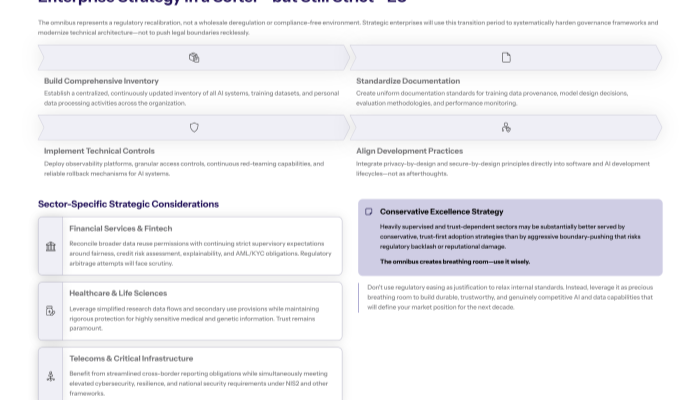

Enterprise strategy in a softer but still strict Europe

From an enterprise point of view, the digital omnibus is best understood not as deregulation, but as a recalibration. Europe is trying to remain both a champion of rights and a viable location for AI and data-driven innovation.

Governance and architecture as differentiators

Organisations that invest now in strong AI and data governance will have a structural advantage, regardless of how the final text lands. This involves:

-

building centralised inventories of AI systems and datasets

-

standardising documentation for training data, model design and evaluation

-

implementing technical controls for observability, access, red-teaming and rollback

-

aligning software development processes with security and privacy-by-design principles

Cognativ’s AI services and secure development offering are rooted in exactly this idea: that compliant, trustworthy systems depend on architecture and process as much as on legal interpretation.

Sector-specific adaptation

Different industries will experience the omnibus in different ways:

-

Financial services and fintech will need to reconcile looser data reuse options with stringent supervisory expectations on fairness, creditworthiness and anti-discrimination.

-

Healthcare and life sciences may welcome simplified data-sharing for research, but must handle heightened sensitivity around medical and biometric data.

-

Telecom and critical infrastructure will balance streamlined reporting obligations against increasing cyber and operational resilience expectations.

For each, the strategic question is whether to use regulatory easing to push boundaries or to leverage it as breathing room to build more robust internal controls. In many cases, a conservative approach will be better aligned with long-term trust and regulatory relationships, particularly in heavily supervised sectors such as banking and fintech solutions .

Conclusion

The EU’s digital omnibus proposal marks a significant shift in how Europe manages the tension between innovation and protection. By delaying parts of the AI Act, relaxing certain GDPR constraints and simplifying cookie rules, the Commission is clearly signaling that it does not want regulation to become an outright barrier to AI and data-driven growth. At the same time, the intensity of the backlash from civil-rights groups shows that any perceived erosion of user control will face sustained scrutiny in the months ahead.

For enterprises, the smartest response is not to exploit every new flexibility, but to use this moment to modernise governance, architecture and product design. Organisations that can demonstrate both technical excellence and principled stewardship of data will be best placed to operate in a Europe that remains demanding, even if some of its rules are being tweaked in the name of simplification.

If your organisation is re-evaluating its AI and data roadmap in light of these changes, it may be the right time to explore dedicated support. Learn more about Cognativ’s broader AI services and solutions portfolio and how we work across regulated industries such as banking and fintech to align strategy, governance and delivery.

To keep a clear view of how AI policy, infrastructure and market signals evolve, follow What Goes On , Cognativ’s weekly tech digest, via the Cognativ AI and software insights blog for continuous, executive-level analysis.