Nvidia’s Current Strengths in the AI Chip Market

Nvidia is at the center of the AI chip market, dominating the landscape with nvidia’s GPUs powering everything from data centers to autonomous driving and generative AI platforms. The company’s reputation as a valuable company in the tech sector comes not just from its market share but also from Its ability to set the pace for innovation.

Unlike many other big tech companies still experimenting with ai technology, Nvidia has decades of GPU expertise, allowing it to scale quickly when the AI wave hit. The company has evolved beyond graphics cards for gaming into a leader in ai computing and ai infrastructure.

Key aspects of Nvidia’s dominance

Early adoption of artificial intelligence use cases.

Investment in ai research and ai developers ecosystems.

A strong grip on data centers, which now account for a major part of Nvidia’s revenue.

Industry-defining platforms like nvidia ai enterprise that provide ai software, management tools, and ai accelerators for enterprise-scale deployments.

This ecosystem effect means Nvidia benefits not just from selling ai chips but from the integrated ai systems that surround them.

GPU Architecture and Innovation

At the core of Nvidia’s success is its GPU architecture, specifically designed for specific AI tasks. While centralized cloud servers were once the only viable way to run AI, Nvidia built cutting edge GPUs optimized for ai workloads, lowering power draw while maximizing computing power.

Highlights of Nvidia’s GPU Technology

Parallel computing through graphics processing units (GPUs) designed for ai workloads rather than just rendering images.

Proprietary platforms like Nvidia’s CUDA (Compute Unified Device Architecture) that lock in developers and enable accelerated computing across industries.

Custom ai accelerators for autonomous driving, pc chip markets, and even edge deployments.

Energy efficiency improvements through optimized gpu design, reducing costs while maintaining cutting edge GPUs.

This constant gpu innovation ensures that when ai models scale, Nvidia’s GPUs remain the default choice to run AI models and powers AI across industries.

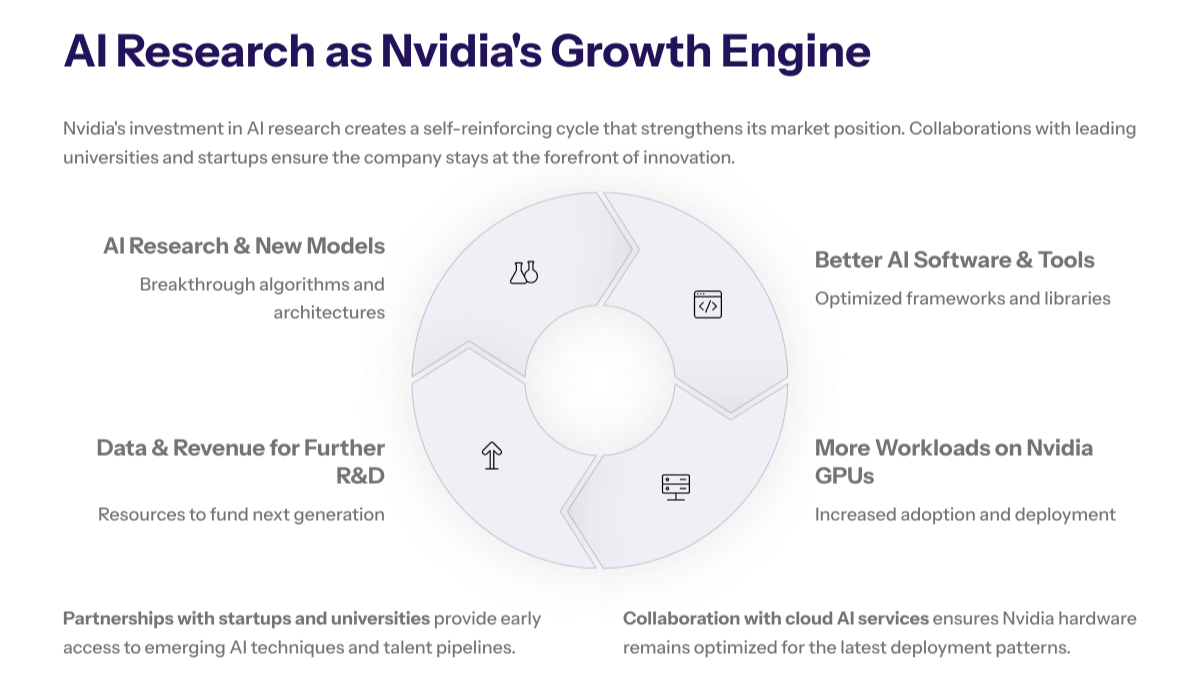

AI Research and Development

If gpu architecture is the foundation, then AI research is Nvidia’s growth engine. The company reinvests heavily into developing new AI models, ai software, and partnerships that encourage ai developers to stick to its ecosystem.

Areas of focus in Nvidia’s ai research include:

Generative AI: Supporting the training of massive models that fuel the next wave of ai applications.

AI accelerators: Specialized nvidia chips for robotics, healthcare, and edge AI scenarios.

AI factories: Conceptual data centers where Nvidia’s general purpose GPUs act as the “factories” powering continuous learning for AI models.

Close collaboration with ai startups, universities, and microsoft ai services to accelerate ai technology adoption.

This dual strategy—own research plus external collaboration—ensures that Nvidia continues to drive the AI revolution, creating a self-reinforcing cycle: as more ai developers train on Nvidia’s GPUs, more ai workloads are optimized for its chips, increasing nvidia’s dominance.

Strategic Investments for Growth

Beyond ai research, Nvidia has been deliberate in making strategic investments that expand its reach:

Acquisitions: Investments in networking companies and software providers ensure Nvidia isn’t just about gpu hardware, but about a full integrated ecosystem.

Startups: By funding promising AI startups, Nvidia both ensures access to generative AI breakthroughs and prevents competitors from gaining footholds.

Enterprise Solutions: The launch of Nvidia AI Enterprise ties together ai software, ai models, and ai accelerators into one unified platform for corporations.

Cloud Partnerships: Collaboration with google cloud, microsoft azure, and other cloud platforms ensures that even other tech giants rely on Nvidia’s ecosystem.

These strategic investments secure nvidia’s role not just as a chip maker but as the backbone of AI infrastructure.

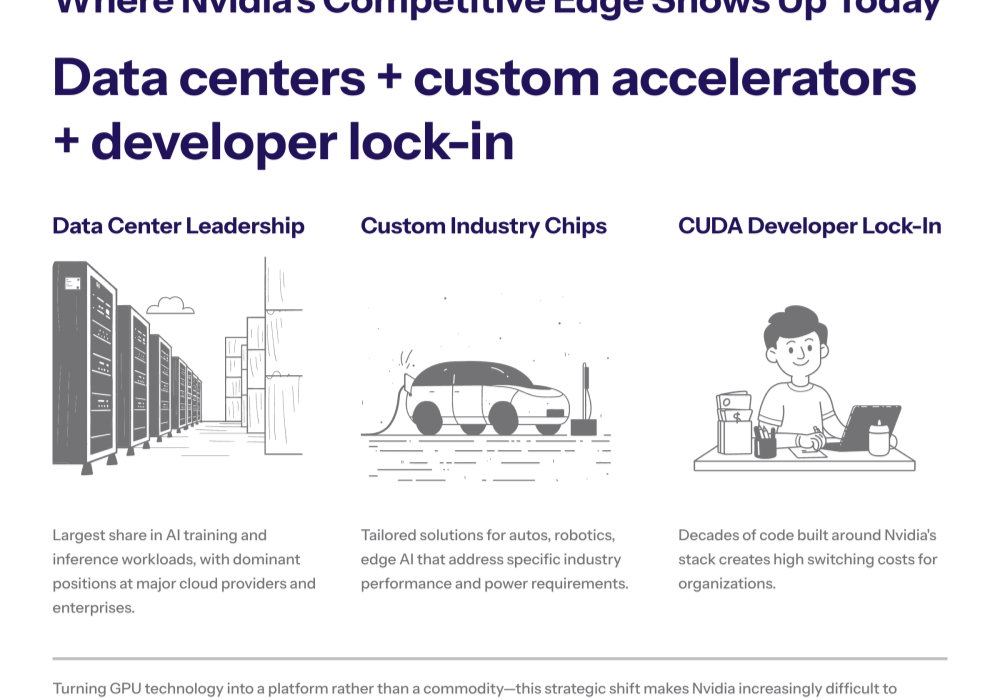

Nvidia’s Competitive Edge Today

So how does Nvidia maintain its competitive edge right now?

By leveraging its market share in data centers, the largest and most profitable AI workload segment.

By providing custom chips and ai accelerators for industries like autonomous driving.

By maintaining a deep developer lock-in through Nvidia’s CUDA platform, making it difficult to challenge Nvidia.

By turning gpu technology into a platform business rather than a commodity.

Even as other tech giants attempt to break Nvidia’s monopoly by designing their own chips, Nvidia holds the advantage of an integrated ecosystem built over decades.

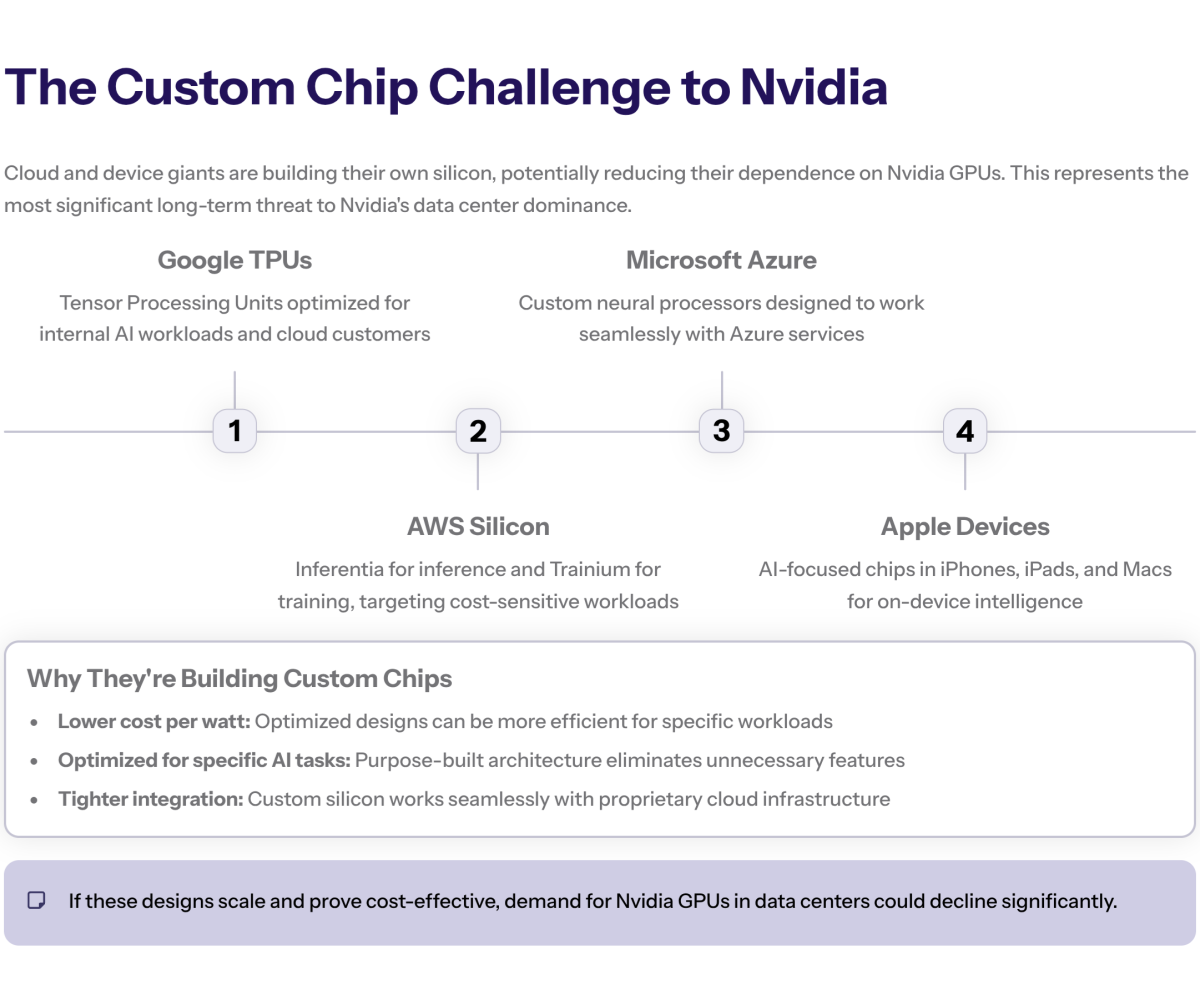

The Growing Competition in the AI Chip Market

The AI chip market is heating up as other tech giants—including Google, Amazon, Microsoft, and Apple—move aggressively into designing their own chips. These big tech companies see Nvidia’s dominance and are determined to challenge Nvidia by cutting reliance on Nvidia’s GPUs.

Google has developed TPUs (Tensor Processing Units) for internal workloads, particularly generative AI.

Amazon AWS offers Inferentia and Trainium chips, targeting the same ai workloads that Nvidia chips currently dominate.

Microsoft Azure is experimenting with neural processors and microsoft ai services powered by its own silicon.

Apple is expanding its pc chip and custom chips to power ai applications in consumer devices.

While none have yet matched Nvidia’s GPU architecture, the strategic threat is real: if these tech giants succeed in breaking Nvidia’s monopoly, they could drastically reduce Nvidia’s revenue from data centers.

Threats from Custom Chips

Custom silicon is one of the most pressing threats. Custom chips offer:

Lower cost per watt compared to Nvidia’s cutting edge GPUs.

Optimized performance for very specific AI tasks (e.g., generative AI inference).

Tight integration with cloud services and cloud infrastructure.

If cloud providers scale these custom chips effectively, they could reduce demand for Nvidia’s GPUs in data centers. This is why Nvidia is countering with Nvidia AI Enterprise—a full suite that makes switching harder.

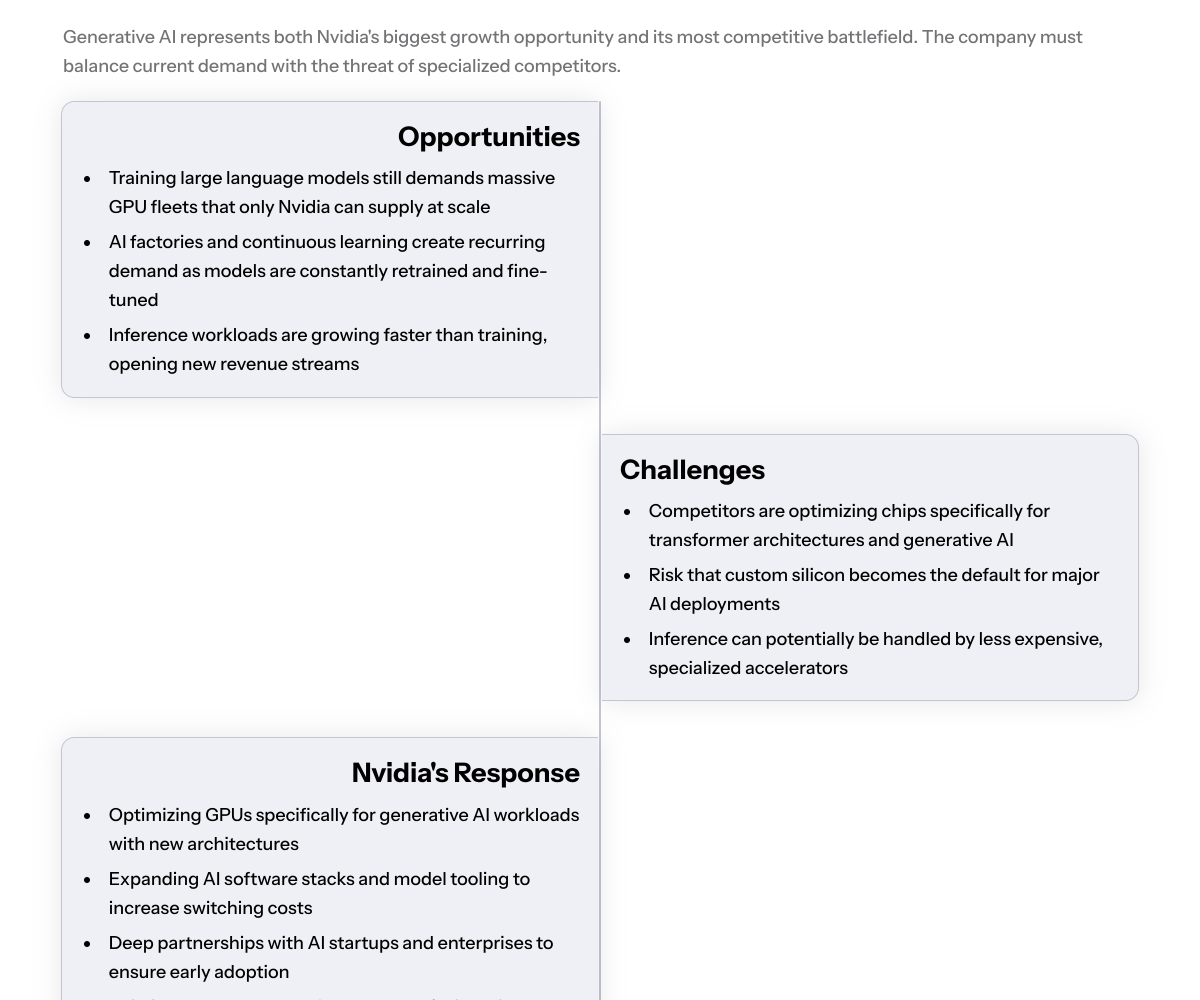

The Role of Generative AI in Nvidia’s Strategy

The generative AI boom has given Nvidia both an opportunity and a challenge.

Opportunities:

Training large AI models requires massive computing power, and Nvidia’s GPUs remain the gold standard.

Generative AI startups (like OpenAI, Anthropic, and Stability AI) rely heavily on Nvidia chips for training.

The trend toward AI factories and continuous learning systems ensures long-term demand for ai accelerators.

Challenges:

Competitors may build ai infrastructure specifically tuned for generative AI workloads.

If custom chips from big tech companies become the default, Nvidia risks being sidelined in the next AI wave.

Nvidia’s response:

Aggressively optimize gpu architecture for generative AI workloads.

Expand ai research into large language models and ai software stacks.

Invest in strategic partnerships with both ai startups and enterprise AI customers.

Data Centers and Nvidia’s Revenue

One of the biggest drivers of Nvidia’s revenue is data center adoption. Nvidia’s data center revenue has surged as companies build ai infrastructure at scale.

Why data centers matter?

They represent the largest single ai chip market.

Nvidia’s general purpose GPUs are versatile, powering training and inference across industries.

The need for accelerated computing in data-heavy workloads (finance, healthcare, autonomous vehicles) guarantees continued demand.

But there’s risk:

Other tech giants running cloud platforms may favor their own chips, reducing reliance on Nvidia chips.

Stock price volatility reflects fears of dependency on a single sector (data centers).

If hyperscalers succeed, they could challenge Nvidia’s competitive edge.

Strategic Paths Forward

So how does Nvidia maintain its competitive edge against rising competition?

Expand Nvidia AI Enterprise

Offer a complete AI stack: ai software, ai models, gpu hardware, and management tools.

Make switching away from Nvidia as costly and complex as possible.

Deepen Ecosystem Lock-in

Enhance Nvidia’s CUDA platform so ai developers continue building on Nvidia.

Provide developer tools, model hubs, and AI frameworks.

Energy Efficiency Leadership

Invest in gpu design breakthroughs that cut power draw while scaling performance.

Position Nvidia chips as the most cost-efficient solution long-term.

AI Factories and AI Infrastructure

Build the concept of AI factories where Nvidia’s GPUs act as industrial-scale engines for ai workloads.

Position itself as the backbone of generative AI worldwide.

Strategic Alliances with Cloud Providers

Partner with Google Cloud, Microsoft Azure, and Amazon AWS, even if they build own chips.

Secure integrated ecosystem deals where Nvidia remains essential in hybrid deployments.

Diversify Beyond Data Centers

Expand into autonomous driving, edge AI, robotics, and pc chip markets.

Ensure that if data center demand dips, other verticals sustain growth.

Long-Term Challenges

Big tech companies will continue to challenge Nvidia with custom chips.

Governments may push to break Nvidia’s monopoly by supporting alternative vendors.

Nvidia relies heavily on data centers—a sector that may shift rapidly if hyperscalers prefer own chips.

The stock price could fluctuate dramatically with every shift in market share.

Conclusion: Nvidia’s Competitive Edge

Nvidia’s ability to maintain its competitive edge depends on its integrated ecosystem—spanning gpu architecture, ai research, strategic investments, and ai software platforms. While other tech giants aim to disrupt with custom chips, Nvidia’s dominance in AI workloads and its lock-in with ai developers give it a powerful lead.

The future of the AI chip market will hinge on:

How quickly competitors scale their own chips.

How effectively Nvidia continues to innovate in gpu technology.

Whether Nvidia AI Enterprise becomes the standard ai infrastructure for enterprises.

If Nvidia balances gpu innovation with ecosystem lock-in, it can sustain nvidia’s dominance even in a market where big tech companies fight to carve their share.

p>