Mastering Data Transformation: Techniques, Tools, and Tips

Data transformation turns raw data into a useful format, crucial for effective data management and business analysis. By standardizing and cleaning data, organizations can make informed decisions and improve operational efficiency. This guide will take you through the process, techniques, and benefits of data transformation, helping you leverage your data better.

Key Takeaways

-

Data transformation is essential for enhancing data quality, improving decision-making, and facilitating operational efficiency through techniques like data cleansing, normalization, and mapping.

-

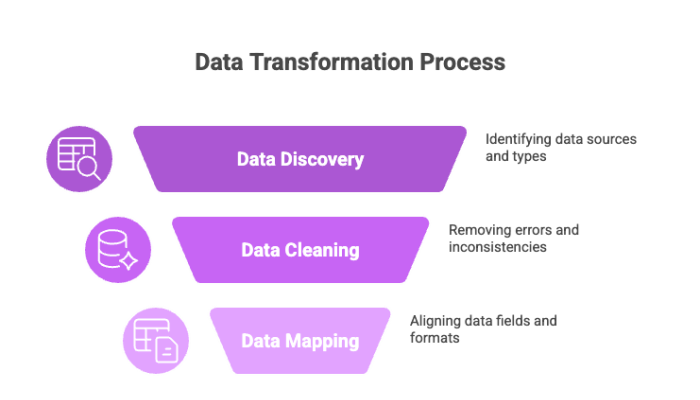

The data transformation process involves key steps such as data discovery, cleaning, and mapping, which are critical for ensuring data accuracy and consistency before analysis.

-

Organizations must consider aspects like cost, scalability, and data security when selecting data transformation tools, while also adhering to best practices for effective transformation.

Understanding Data Transformation

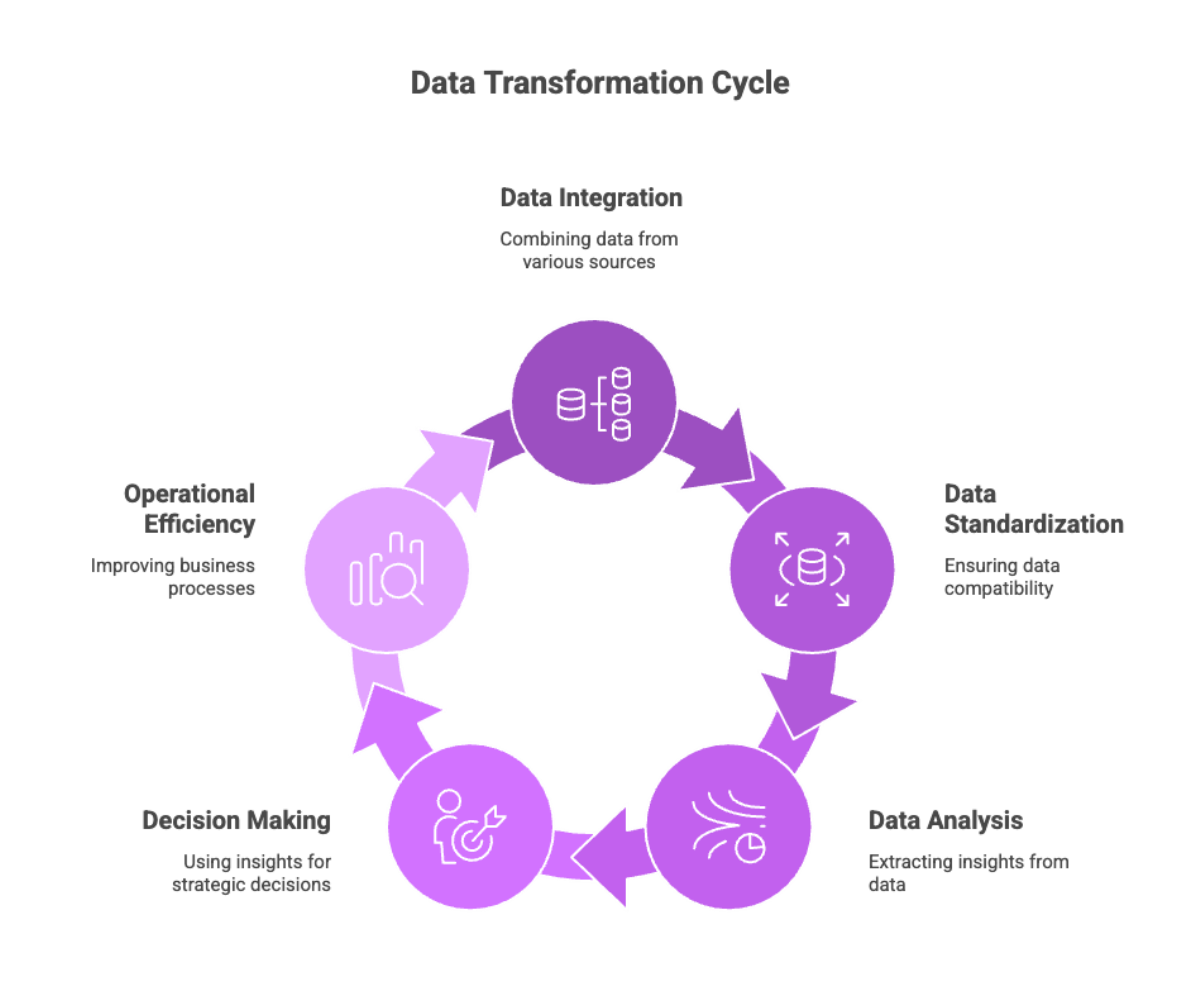

Data transformation is the process of converting raw data into a format that is more useful for business tasks. This transformation supports various aspects of data management, including data integration, data warehousing, and data analysis. Improved access to standardized data allows organizations to quickly and easily retrieve information for analysis, enhancing their ability to gain timely, data-driven insights.

Effective data transformation involves combining records from multiple tables and data sources to create cohesive data sets, ensuring compatibility between different data types, applications, and systems. Organizations can also transform data to enhance their operational efficiency.

The importance of data transformation in business cannot be overstated. It enhances business intelligence, decision-making, collaboration, and scalability. Organizations typically perform data transformation either manually, through automation, or a combination of both to handle large volumes of data efficiently.

Automation is particularly critical in data transformation processes, especially when dealing with large datasets, as it saves time and minimizes errors. Ultimately, data transformation organizes data effectively and enhances its quality for further analysis.

The Data Transformation Process

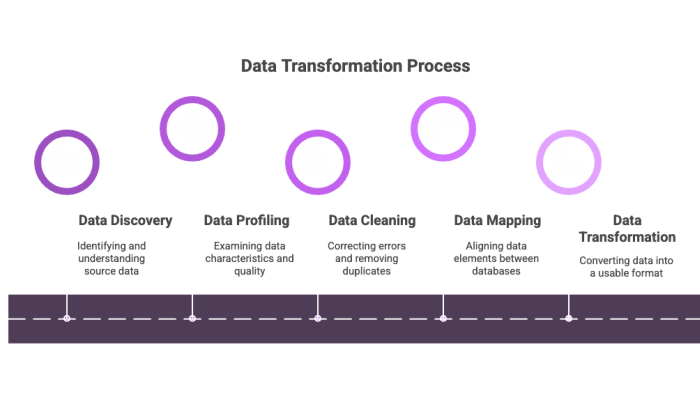

The data transformation process involves several key steps to convert data raw data into a usable format. These steps include data discovery, data cleaning, and data mapping, each playing a crucial role in preparing data for analysis and ensuring its accuracy and consistency, including converting data.

Initial steps such as data discovery and quality checks set the foundation for effective data transformation, while data mapping aligns data elements between databases. These processes are essential for transforming data and integrating standardized data from multiple sources seamlessly.

Data discovery

Data discovery aims to identify the source data and understand its characteristics to ensure effective transformation. During data profiling, the following should be examined to gain a comprehensive understanding of the data:

-

Data types

-

Volumes

-

Value ranges

-

Relationships

-

Irregularities

-

Duplicates

Profiling helps identify key data attributes and assess the quality of data assets, enabling organizations to spot data quality issues before transformation begins.

Understanding data relationships is also essential for effective data profiling, as it helps in organizing data for accurate analysis.

Data cleaning

The purpose of data cleansing in the data transformation process is to improve data quality by correcting errors, removing duplicates, and addressing missing values. This process is fundamental to ensuring the accuracy and reliability of the data. Data cleaning involves identifying and fixing errors, removing duplicates, and handling missing values, which are essential steps in enhancing data quality. Addressing these issues ensures that the data is accurate and ready for analysis.

Common techniques in data manipulation include removing duplicates, filling in missing values, correcting typos, and standardizing formats. Handling incomplete or malformed data is crucial as it lowers data quality and reduces the reliability of insights. Techniques like data imputation and data exclusion are employed to manage incomplete data, ensuring that the transformed data is of high quality and reliable for business intelligence purposes.

Data mapping

Data mapping is the process of creating a schema to guide the transformation process by defining how source elements correspond to target elements. This phase involves determining the current structure, required transformations, and mapping individual fields. The purpose of data mapping is to align data elements between databases, ensuring that the transformed data is consistent and accurate.

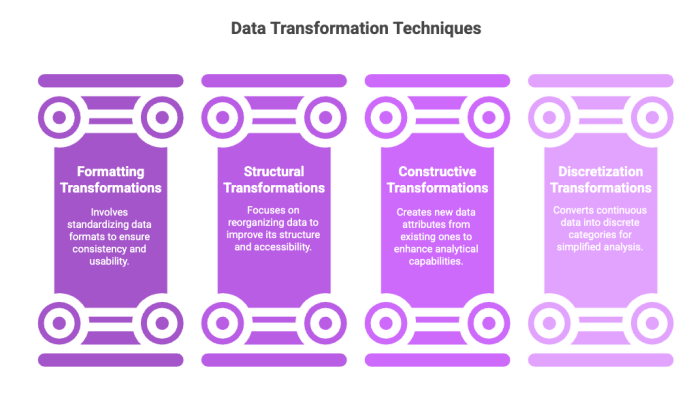

Types of Data Transformation Techniques

Data transformation techniques can be broadly categorized into four main types:

-

Formatting transformations

-

Structural transformations

-

Constructive transformations

-

Discretization transformations

Data transformation methods can enhance the effectiveness of these techniques.

These techniques enhance the usability of data across various applications, leading to improved business intelligence and analytics.

Examples of specific transformations include data normalization, data aggregation, and feature engineering. Each of these techniques plays a crucial role in the data transformation process, ensuring that data is prepared effectively for analysis and decision-making.

Data normalization

Data normalization is the process of standardizing formats, ranges, and values to reduce redundancy and improve integrity. The goal of normalization is to enhance data integrity and reduce redundancy, making it easier to analyze and use the data effectively.

This process is particularly crucial in machine learning, where algorithms are sensitive to the scale of input features and require consistent output data formats for accurate predictions and analysis.

Data aggregation

Data aggregation refers to the process of combining raw data from various sources into summary forms for analysis. The goal of aggregation combines data in data transformation is to create summaries or averages that represent underlying patterns, providing a high-level overview of data for identifying trends and patterns.

Techniques used in data aggregation include summarization, averaging, and grouping, which are commonly applied in financial analysis and sales forecasting to provide valuable insights.

Feature engineering

Feature engineering enhances model performance by deriving new attributes from existing data. This process is essential for enabling advanced analytical techniques and machine learning, as it helps create features that improve the accuracy and effectiveness of models.

Transforming raw data into meaningful features, feature engineering enables data scientists and analysts to extract valuable insights and make more informed decisions.

ETL vs. ELT in Data Transformation

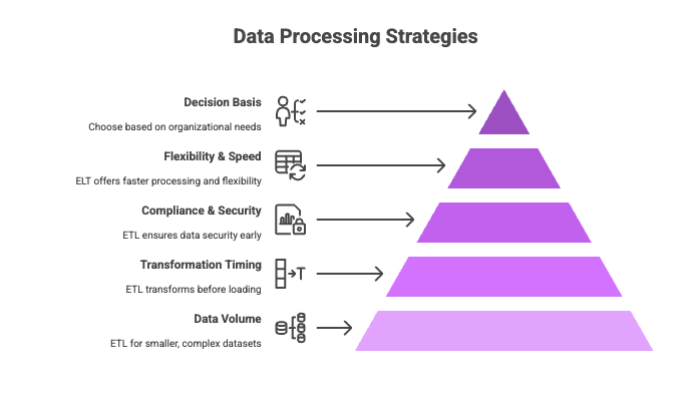

The primary distinction between ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) lies in the sequence of operations. ETL transforms data before loading it into the target system, making it suitable for smaller, complex data sets that require extensive transformations. ELT, on the other hand, loads raw data first and transforms it afterward, which is ideal for handling larger datasets with speed and flexibility. The choice between ETL and ELT largely depends on business needs, data volume, and the capabilities of the target database.

ETL is often preferred in scenarios requiring strict data compliance and security, as it processes sensitive information before loading. ELT supports the retention of raw data in cloud data warehouses, enabling extensive historical data analysis and faster processing through elt data transformation.

Ultimately, both methods have their advantages, and the decision to use ETL or ELT should be based on the specific requirements and constraints of the organization.

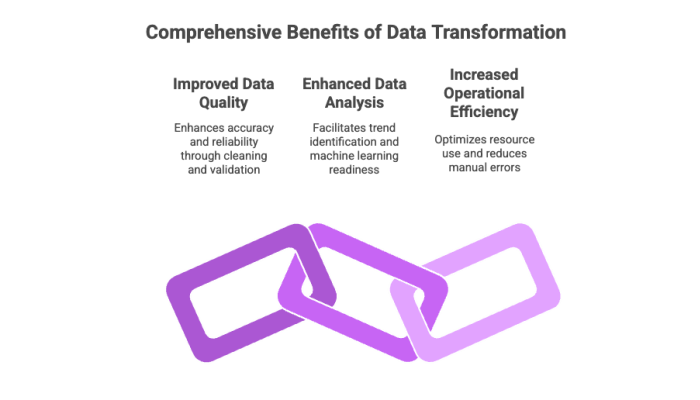

Benefits of Data Transformation

Data transformation provides many advantages that improve the overall efficiency of data management and analysis:

-

Improves data quality

-

Ensures consistency across different systems

-

Uses techniques like data cleansing and normalization to enhance the accuracy of insights derived from data

These benefits support better decision-making and business intelligence.

Data transformation also facilitates the integration of diverse data sources, enabling seamless data merging and improved operational efficiency. The interconnected nature of improved data quality, effective decision-making, and operational efficiency highlights the comprehensive benefits of data transformation.

Improved data quality

High-quality data is essential for accurate decision-making and effective analysis. Data cleaning enhances quality by correcting errors, addressing missing values and missing data, and improving data quality by removing invalid data, ensuring that the data is reliable and accurate.

Data validation checks are critical for maintaining data integrity and ensuring completeness and accuracy. By implementing data cleaning and validation processes, organizations can significantly enhance the overall quality of their data, leading to more trustworthy insights and better business outcomes.

Enhanced data analysis

Structured raw data through transformation equips organizations to identify trends effectively. Normalization is crucial for machine learning models that require consistent input feature scales, ensuring that the data is ready for advanced analytics.

Data aggregation provides a summarized view that can reveal trends and patterns in the underlying data values, enabling organizations to make data-driven decisions with greater confidence through a data review by aggregating data points and utilizing data visualization.

Increased operational efficiency

Automation through data transformation contributes to reducing manual errors and optimizing resource use. Automating data processes significantly decreases errors due to manual entry and oversight, leading to more reliable data management.

Automation allows for better allocation of resources, as fewer human hours are required for data processing tasks, ultimately enhancing operational efficiency.

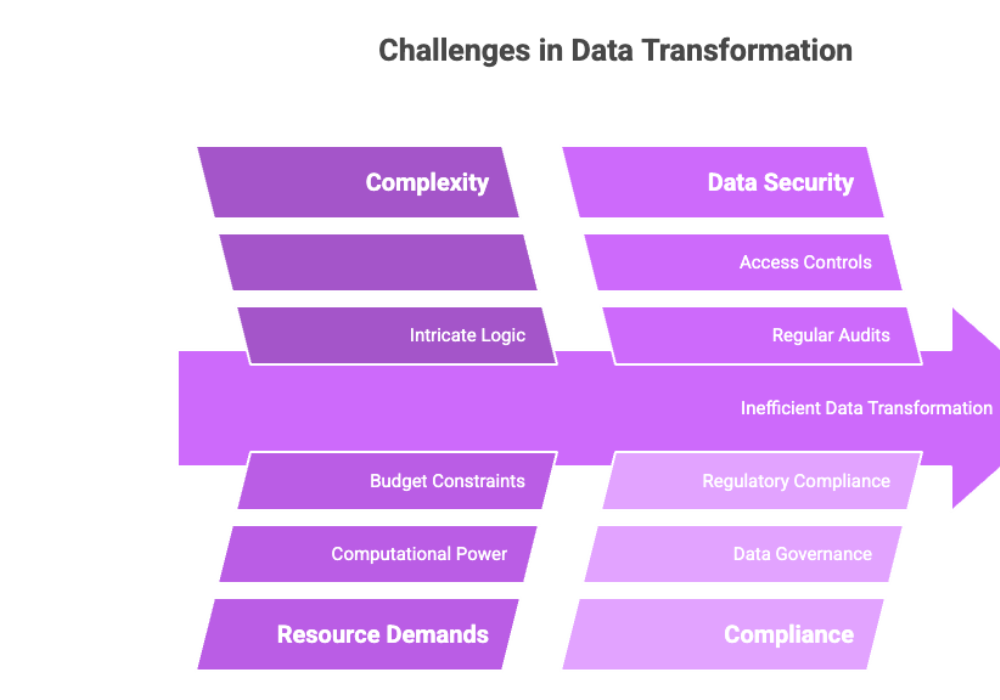

Challenges in Data Transformation

The data transformation process is not without its challenges of data transformation. Complexity, resource demands, and data variety are major hurdles that organizations face. The transformation process becomes more complicated with large data volumes and the need for compliance with data governance regulations. Additionally, data transformation often requires skilled professionals and robust infrastructure to meet its demands.

Utilizing automation can enhance the efficiency of the data transformation process, addressing some of the challenges encountered.

Handling complex data transformations

Dealing with varied and multi-structured data demands intricate logic. It also necessitates the ability to adapt effectively. A deep understanding of data context and transformation types, combined with the ability to use automation tools, is critical for effective data transformation.

Regular validation of transformed data supports ongoing data governance and quality assurance, ensuring that the data remains accurate and reliable throughout the transformation process.

Ensuring data security

Data transformation processes must include robust access controls to prevent unauthorized usage. Regular audits of data transformation practices bolster data security and ensure compliance with data governance regulations. Protecting sensitive data is crucial to maintain confidentiality and integrity during the transformation process, making access controls and audits key strategies for ensuring data security.

Resource demands

Data transformation is considered expensive due to significant budget constraints for tools, infrastructure, and expertise. Transforming large datasets requires heavy computational power, which can slow down other programs and affect overall performance.

Performing transformations in an on-premises data warehouse can further impact operations, highlighting the need for optimized resource allocation to maintain efficiency.

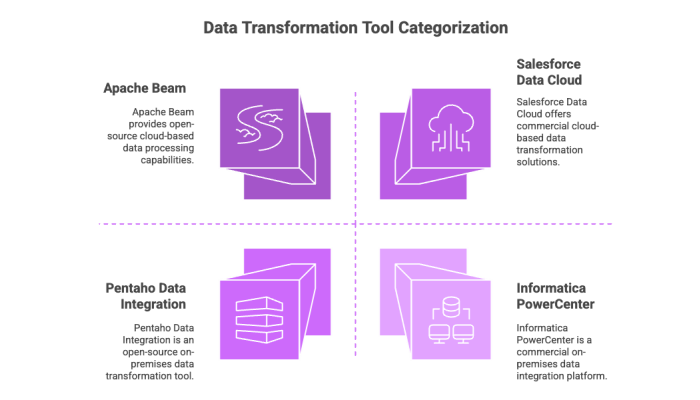

Choosing the Right Data Transformation Tools

When selecting data transformation tools, organizations should consider factors such as cost, scalability, and compatibility with their current infrastructure. Businesses should utilize data transformation tools for better data management and operational efficiency. These tools can be categorized into commercial, open-source, on-premises, and cloud-based options, each offering unique advantages based on organizational needs. Utilizing advanced tools can simplify the handling of diverse data types and enhance the overall transformation process.

Adopting third-party services can further enhance the data conversion and migration process, making it more efficient. Testing tools through cross-departmental proof-of-concept can provide valuable insights into their effectiveness and suitability for specific tasks.

Automation in data transformation tools minimizes the need for manual data transformation coding, streamlining the transformation process and providing significant operational advantages through code generation.

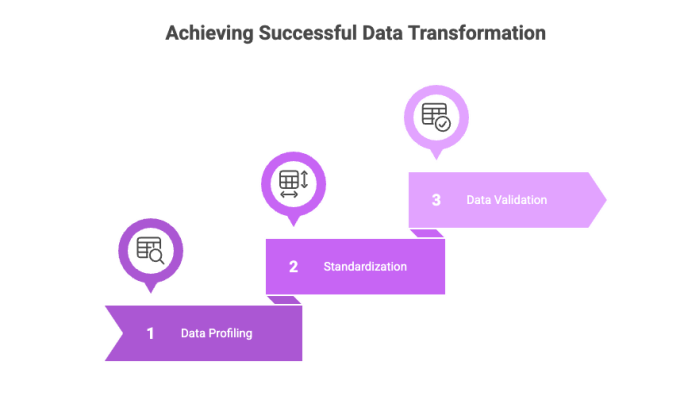

Best Practices for Effective Data Transformation

Adhering to best practices is essential for successful data transformation. This includes conducting thorough data profiling, standardizing data types and naming conventions, and validating transformed data. These practices ensure that the data transformation process is efficient and effective, leading to high-quality, reliable data for analysis and decision-making.

Iterative testing and adjustments are often necessary to refine the transformation process and address any issues that arise. Cross-departmental proof-of-concept tests can also help evaluate the effectiveness of data transformation tools and practices.

Conduct thorough data profiling

Conducting thorough data profiling is the first step in any data transformation process. Data profiling helps in assessing data quality, identifying inaccuracies, and understanding data complexity before starting the transformation. Effective data profiling increases the efficiency and effectiveness of data transformations, ensuring that the data is ready for the subsequent steps.

Standardize data types and naming conventions

Consistency in data types and naming conventions is vital for seamless data integration. Consistent data types help streamline data integration and reduce errors during transformation. Data validation checks should include assessing adherence to predefined business rules, ensuring that the transformed data is accurate and reliable.

Implementing standardized data types and naming conventions leads to improved data accuracy and operational efficiency.

Validate transformed data

During the transformation process, data validation plays a crucial role. It ensures the accuracy and quality of the data. This involves verifying:

-

Data types

-

Formats

-

Accuracy

-

Consistency

-

Uniqueness

These checks ensure that the transformed data meets the desired format standards.

During data validation, adherence to naming conventions and data types, and checking for missing, inaccurate, or duplicate data should be ensured. Indicators of a flaw in the data transformation process include missing, inaccurate, or duplicate data points, highlighting the need for thorough validation.

Summary

Mastering data transformation is crucial for leveraging data assets effectively. By understanding the data transformation process, employing various techniques, and choosing the right tools, organizations can significantly enhance data quality, analysis, and operational efficiency.

Overcoming challenges through best practices and robust security measures ensures that data transformation efforts lead to reliable and valuable insights. As we navigate the evolving landscape of data management, embracing these practices will empower organizations to make data-driven decisions with confidence.

Frequently Asked Questions

This FAQ section addresses common queries related to data transformation, highlighting key concepts such as data preparation, code execution, handling unstructured data, the roles of data analysts and data engineers, and the use of data preparation tools.

Understanding these aspects helps data professionals effectively manage data silos, perform data enrichment, and apply common data transformation techniques to optimize data integration and analysis.

What is data transformation?

Data transformation is the process of converting raw data into a more usable format for business tasks, enhancing data integration, warehousing, and analysis. This conversion is essential for deriving meaningful insights and enabling effective decision-making.

Why is data transformation important for businesses?

Data transformation is crucial for businesses as it improves decision-making and collaboration by ensuring that data is accurate and consistent, ultimately enabling effective analysis and scalability.

What are the main steps in the data transformation process?

The main steps in the data transformation process are data discovery, data cleaning, and data mapping, which are essential for preparing data for effective analysis. Each step is critical to ensuring the integrity and usability of the data.

How do ETL and ELT differ in data transformation?

ETL transforms data prior to loading it into the target system, whereas ELT loads raw data first and performs transformations afterward. This fundamental difference makes each method suitable for varying data volumes and business requirements.

What are some common challenges in data transformation?

Common challenges in data transformation include managing complex transformations, ensuring data security, and addressing high resource demands throughout the process.