Mastering Local AI Agents: Practical Tips for Effective Implementation

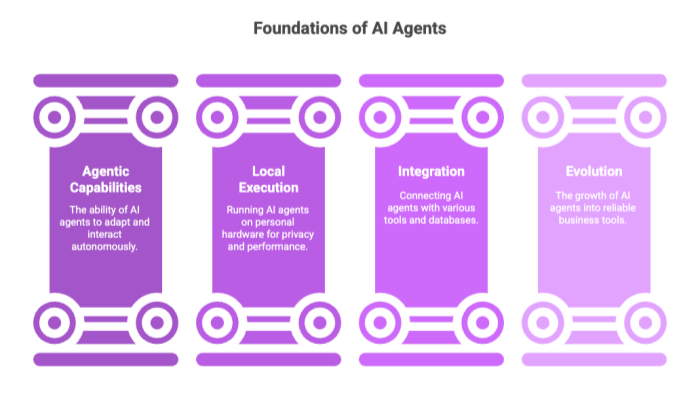

In the world of artificial intelligence (AI), ai agents are quickly becoming essential tools for both individuals and enterprises. These autonomous agents are essentially self-directed programs designed to perform multiple tasks — ranging from data analysis and image generation to natural language processing and even handling complex reasoning.

Unlike traditional static systems, ai agents have agentic capabilities: they can adapt to inputs, interact with external tools, and execute workflows independently. When running locally on your own hardware, these agents deliver enhanced data privacy, better performance, and eliminate reliance on third-party cloud services.

A basic ai agent can be as simple as a script capable of answering questions using a language model, while advanced versions integrate with APIs, vector databases, and external tools to act as digital co-pilots. Over time, with the right architecture, local ai agents evolve into reliable agents that seamlessly augment business processes or personal productivity.

Understanding AI Models

At the core of building agents are ai models — computational frameworks designed to generate responses, predict patterns, and manage complex tasks. Among these, large language models (LLMs) have been the breakthrough technology.

Large Language Models and Their Role

Large language models like GPT, Llama, and Mistral use billions of parameters to process natural language, enabling agents to interpret and answer questions, summarize documents, or even write code.

A llm model can be fine-tuned for domain-specific applications, from medical diagnostics to customer service automation.

With techniques like retrieval augmented generation (RAG), llm models can be combined with a vector store or vector database to pull relevant information from external knowledge bases.

Semantic Search and Vector Embeddings

Semantic search ensures agents retrieve answers based on meaning, not just keyword matching.

Vector embeddings map text into numerical space, allowing fast comparison in vector stores for knowledge retrieval.

This means a local ai agent can instantly respond to a query using a vast local knowledge base without needing an internet connection.

Choosing the Right Model

Not all ai models are created equal. Developers must weigh model size against hardware capabilities. While larger models offer more nuanced reasoning, they require powerful GPUs or optimized environments. In contrast, smaller models can run effectively on a local machine with less computing power.

Setting Up a Local Machine for AI

Before you can deploy ai agents, your local machine must be prepared for running llms and managing local models.

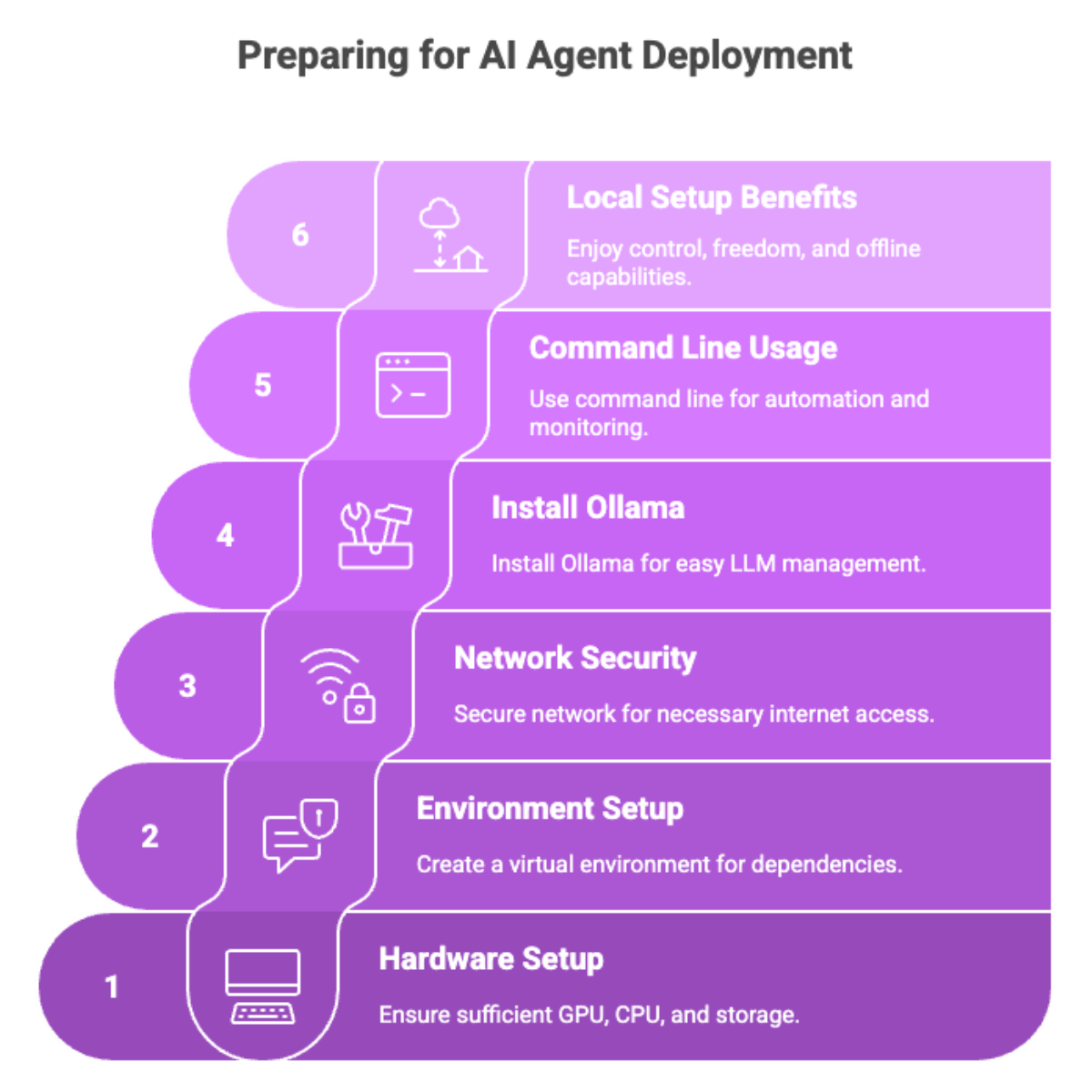

Hardware and Environment Setup

Ensure your machine has sufficient GPU memory, CPU cores, and storage space.

Set up a virtual environment (e.g., with Conda or venv) to manage dependencies cleanly.

Prioritize network security, since some tasks require temporary internet access (like downloading pre-trained models or libraries).

Installing Essential Tools

Installing Ollama

Installing ollama is one of the easiest ways to run llm models locally.

It provides a command line tool that abstracts away complexities, letting you load and test local models quickly.

Command Line and Workflow

Using the command line ensures you can automate tasks, schedule ai agents, and monitor performance.

Tools like ollama run allow you to test simple agents and iterate before scaling up.

Benefits of a Local Setup

More control over your system and data.

Freedom from relying solely on centralized cloud services.

Ability to create user friendly interfaces for local or offline environments.

With this foundation, developers can begin building agents that are both practical and scalable.

Creating Effective Multi-Agent Workflows

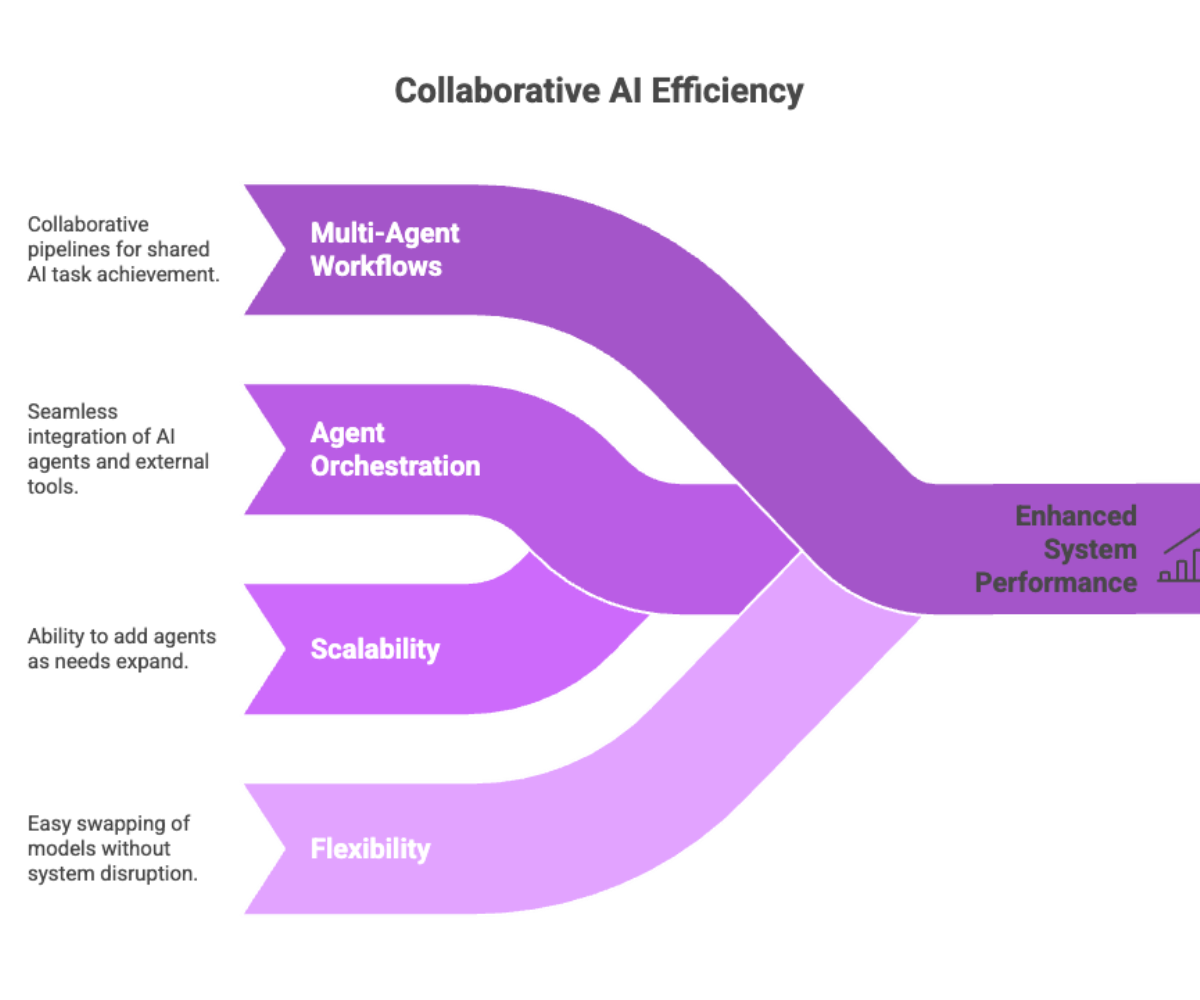

One of the biggest advantages of ai agents is their ability to work together. Instead of building a single basic ai agent to handle everything, developers can create multiple agents with specialized roles. This setup mirrors how teams operate in real life — each agent brings its own ai capabilities and handles specific complex tasks.

What Are Multi-Agent Workflows?

Multi agent workflows are pipelines where multiple agents collaborate to achieve a shared outcome.

Example: In a content pipeline, one agent could handle semantic search in a vector store, another could generate responses, and a third could verify factual accuracy.

By delegating tasks, ai agents avoid bottlenecks and achieve greater system performance.

Agent Orchestration

Agent orchestration is the process of managing how agents communicate, pass data, and make decisions together.

Tools like LangGraph enable seamless integration between local models, external APIs, and vector databases.

With orchestration, developers can ensure that relevant information is efficiently shared, avoiding duplication or wasted processing.

Key Benefits of Multi-Agent Workflows

Scalability: Add more agents as your needs grow.

Flexibility: Swap in a new llm model or external tool without breaking the system.

Reliability: If one agent fails, another can compensate — ensuring resilience.

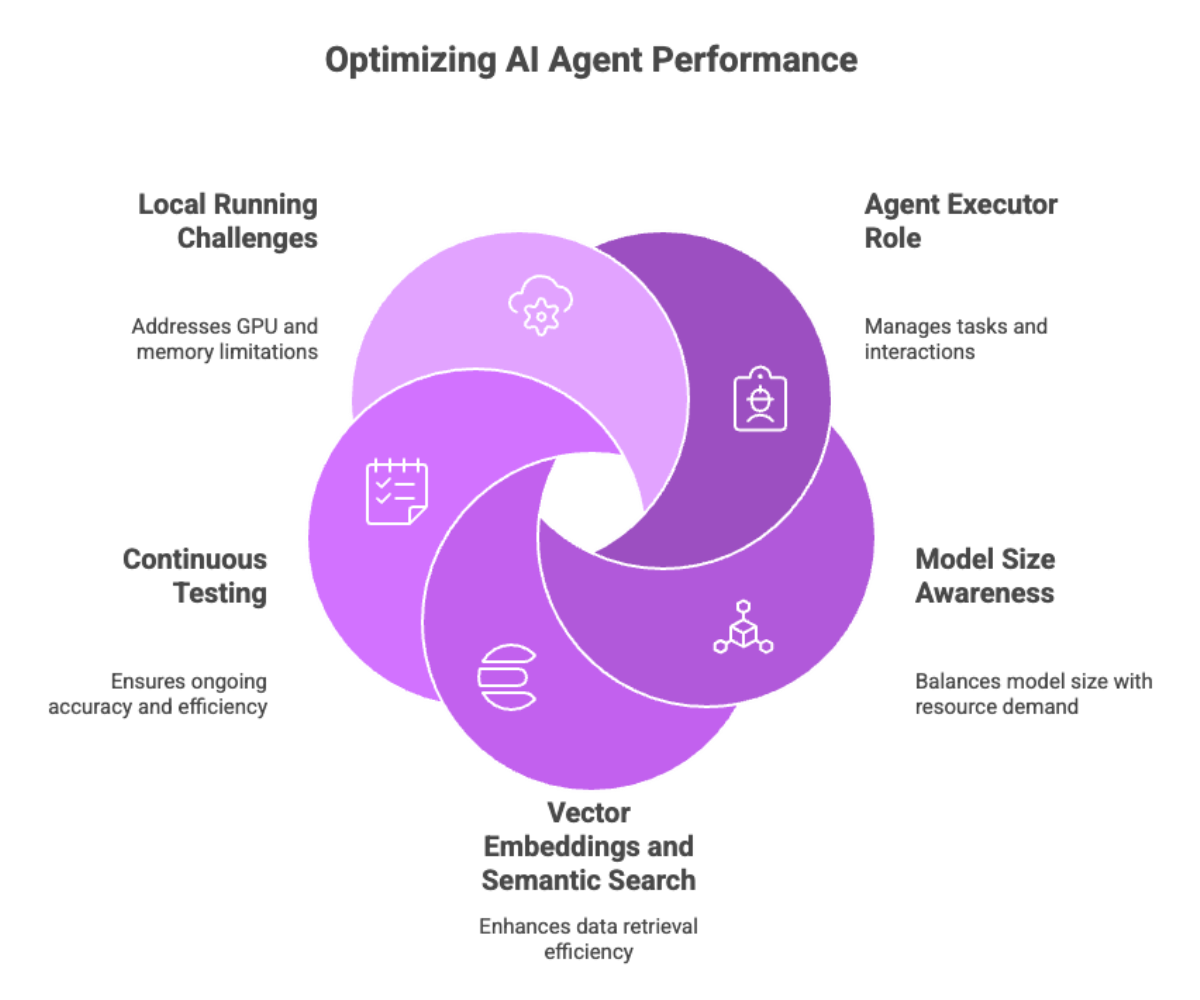

Agent Executor and Performance Optimization

Every ai agent relies on an agent executor — the engine that runs ai agents, manages workflows, and controls agentic capabilities.

The Role of the Agent Executor

The agent executor launches tasks, manages interactions, and ensures agents deliver responses correctly.

In multi agent workflows, it decides which agent should handle which part of the process.

Think of it as the project manager for a team of autonomous agents.

Performance Optimization Strategies

Model Size Awareness

While larger models provide more nuanced answers, they increase resource demand.

Use smaller models for lightweight tasks like query classification or keyword extraction.

Vector Embeddings and Semantic Search

Store data in a vector store for rapid retrieval.

Leverage semantic search to fetch the most relevant information before calling the llm model.

Continuous Testing

Regularly test and fine-tune ai agents using benchmark tasks.

Adopt good practice by logging metrics like latency, accuracy, and cost.

Unique Challenges in Running Locally

Running llms locally introduces challenges like GPU limits and memory optimization.

Developers must balance running locally with the occasional use of cloud services for tasks requiring scale.

Building AI Apps with AI Agents

The most exciting application of ai agents is turning them into fully functional ai apps with user friendly interfaces.

From Agents to Apps

A local ai agent can be wrapped in an ai app, giving users direct interaction via chatbots, dashboards, or voice interfaces.

Example: An ai app could take user queries, route them through a multi agent workflow, and return a polished answer with references.

Developer Tools and Frameworks

Prompt Hub for managing prompts and testing different configurations.

LangGraph for workflow orchestration.

Installing ollama for local LLM inference.

External tools like APIs for retrieval, file processing, or automation.

Community Contributions and Open-Source Support

Many developers are building open-source frameworks to accelerate building agents.

Contributing back to the community improves agent reliability and speeds up the learning process for others.

Benefits of AI Apps

Provide a user friendly interface for non-technical users.

Enable real-time data analysis, image generation, and automation.

Make ai agents accessible as everyday productivity companions.