Understanding Edge AI: Benefits and Applications for Modern Technology

Edge AI is the convergence of artificial intelligence (AI) and edge computing, designed to bring powerful machine learning directly to local edge devices such as IoT sensors, gateways, smartphones, and industrial controllers. Unlike traditional cloud-based processing, where data must travel back and forth to a cloud computing facility or centralized data center, edge AI performs real time data processing where the data is generated.

This shift in compute infrastructure offers several transformative benefits:

-

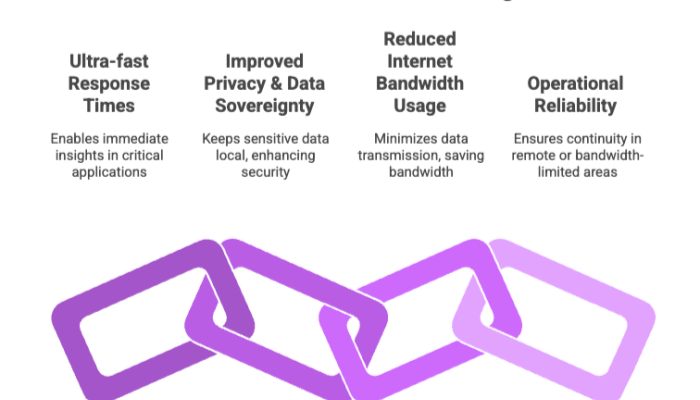

Ultra-fast response times → AI models running on-device deliver immediate insights, which is critical in safety-critical domains like self-driving cars and medical devices.

Improved privacy & data sovereignty → Sensitive sensor data remains local, avoiding risks that come with sending data across networks to external servers.

Reduced internet bandwidth usage → Instead of transmitting raw data, only results or compressed representations are shared with cloud servers.

Operational reliability → Edge devices can continue working even without a stable internet connection, ensuring continuity in remote or bandwidth-limited locations.

In practice, edge AI solutions power everything from smart home appliances that recognize voice commands, to autonomous vehicles making split-second driving decisions, to industrial robots performing predictive maintenance.

As businesses demand real time decision making and consumers expect instant, private, and intelligent experiences, edge AI technology has become a defining force in modern AI innovation.

Edge Computing and Technology

At the heart of edge AI lies edge computing, a paradigm that shifts data analysis and decision-making from centralized cloud servers to local edge devices.

How Edge Technology Powers AI?

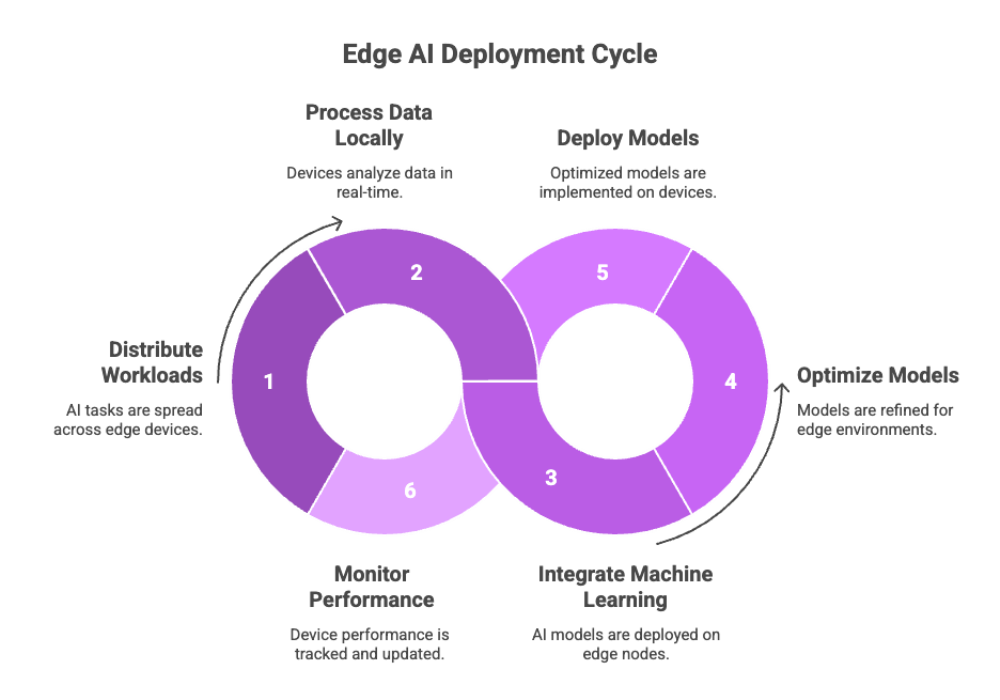

Distributed Processing → Instead of relying solely on massive cloud servers, edge platforms distribute AI workloads across a network of edge devices, sensors, and gateways.

Data Processing Locally → Devices like cameras, drones, or IoT wearables run AI models directly, reducing latency.

Integration with Machine Learning → By deploying trained AI models on edge nodes, real-time predictions are possible for environments that can’t afford cloud delays.

Edge AI Platforms & Tools

To make deploying edge AI scalable, vendors and open-source communities have introduced edge AI platforms. These platforms:

Provide frameworks for deploying AI models on constrained devices (e.g., TensorFlow Lite, PyTorch Mobile, ONNX Runtime).

Allow data scientists and ML engineers to optimize models for AI at the edge.

Offer device orchestration, monitoring, and over-the-air updates.

For instance, NVIDIA Jetson, Google Coral TPU, and Intel OpenVINO are specialized toolkits and hardware solutions that allow developers to deploy inference-ready AI models efficiently across a variety of edge devices.

By combining the scalability of cloud computing for training with the responsiveness of edge technology for inference, businesses can ensure seamless, secure, and cost-efficient AI deployments.

Benefits of Edge AI

The benefits of edge AI are wide-ranging and apply across consumer, industrial, and enterprise domains. Let’s break them down:

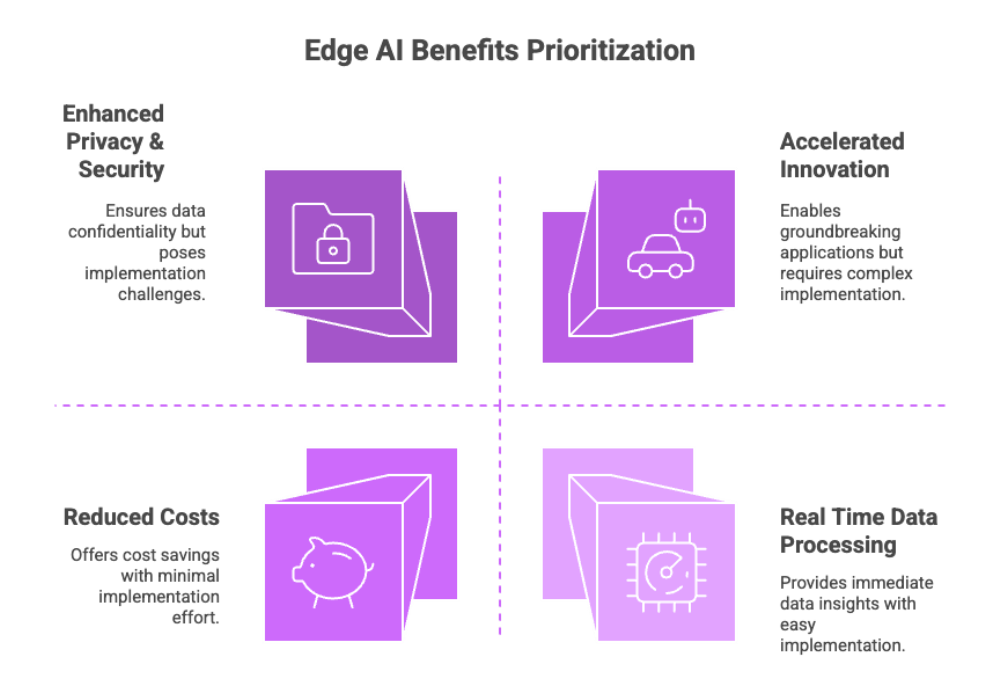

1. Real Time Data Processing

Edge AI offers increased efficiency by running inference on local devices, eliminating the delays associated with cloud-based round trips. For example, in predictive maintenance, analyzing sensor data on a machine in real time prevents downtime without requiring remote processing.

2. Enhanced Privacy & Security

Keeping data locally instead of transmitting it to external servers ensures enhanced privacy. This is crucial in sectors like healthcare, where patient data must remain confidential.

3. Reduced Costs

By reducing internet bandwidth usage and cloud processing requirements, organizations save on recurring infrastructure costs. Deploy AI locally and only transmit insights when necessary.

4. Scalability Across Various Industries

From manufacturing to transportation, edge AI solutions enable businesses to accelerate decision-making, automate business processes, and save time in operations.

5. Accelerated Innovation

Edge AI not only enhances existing processes but also enables entirely new applications like smart homes, autonomous vehicles, and AI-driven robotics that simply wouldn’t be feasible with traditional cloud AI.

In short: edge AI offers a unique balance of speed, security, and scalability that cloud alone cannot achieve.

Cloud Computing Comparison

To fully appreciate the benefits of edge AI, it’s important to contrast it with cloud computing.

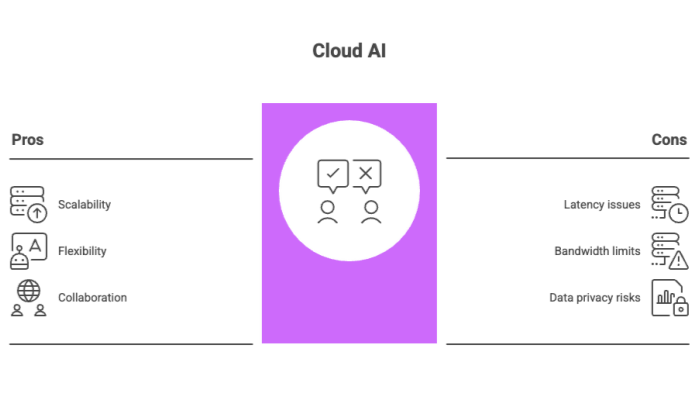

Cloud AI Strengths

Scalability → Cloud servers can handle massive datasets for model training.

Flexibility → Supports a wide range of AI algorithms and architectures.

Collaboration → Centralized storage makes it easier for global teams of data scientists to collaborate.

Cloud AI Weaknesses

Latency issues → In time-sensitive domains, waiting for cloud inference is impractical.

Bandwidth limits → Constantly transmitting sensor data to the cloud consumes costly network resources.

Data privacy risks → Sensitive data stored in cloud computing facilities can create compliance challenges.

Edge AI Complementing Cloud

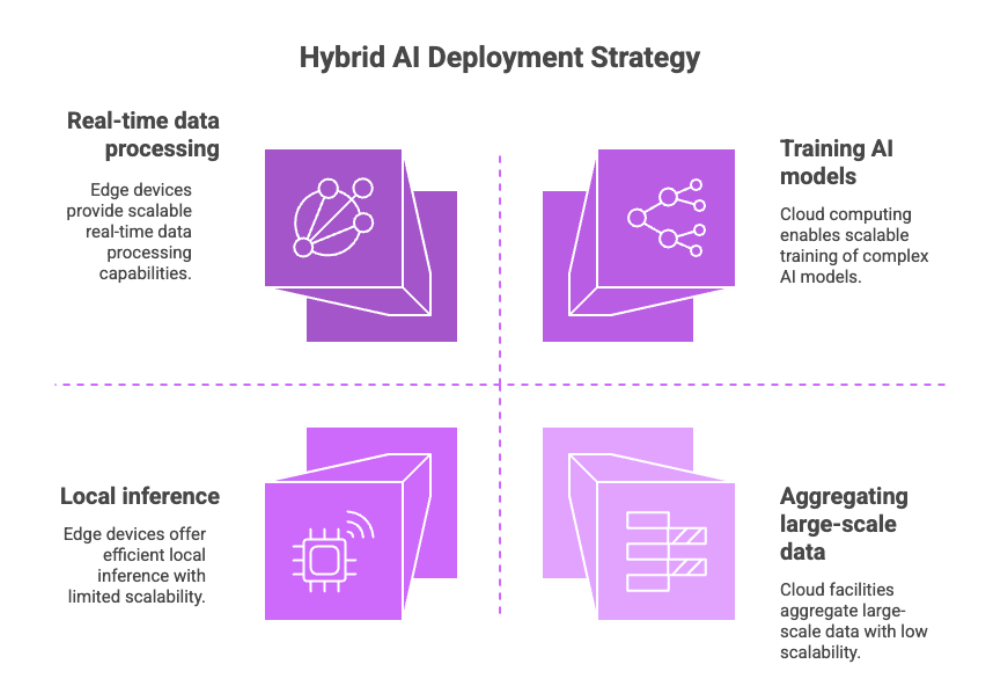

Rather than replacing the cloud, edge AI solutions complement it in a hybrid approach:

Cloud computing facilities → Used for train models, aggregate large-scale data, and perform heavy computation.

Edge devices → Used for real time data processing and local inference.

Together, they deliver both scalability and instant responsiveness.

This cloud + edge synergy ensures enterprises can deploy AI effectively while meeting privacy and latency requirements.

Deploying AI Models on Edge Devices

Deploying AI models on edge devices is where the promise of edge AI becomes practical. Unlike traditional cloud-hosted AI, where data is streamed to a cloud computing facility, deploying edge AI requires careful planning around hardware limitations, connectivity, and optimization.

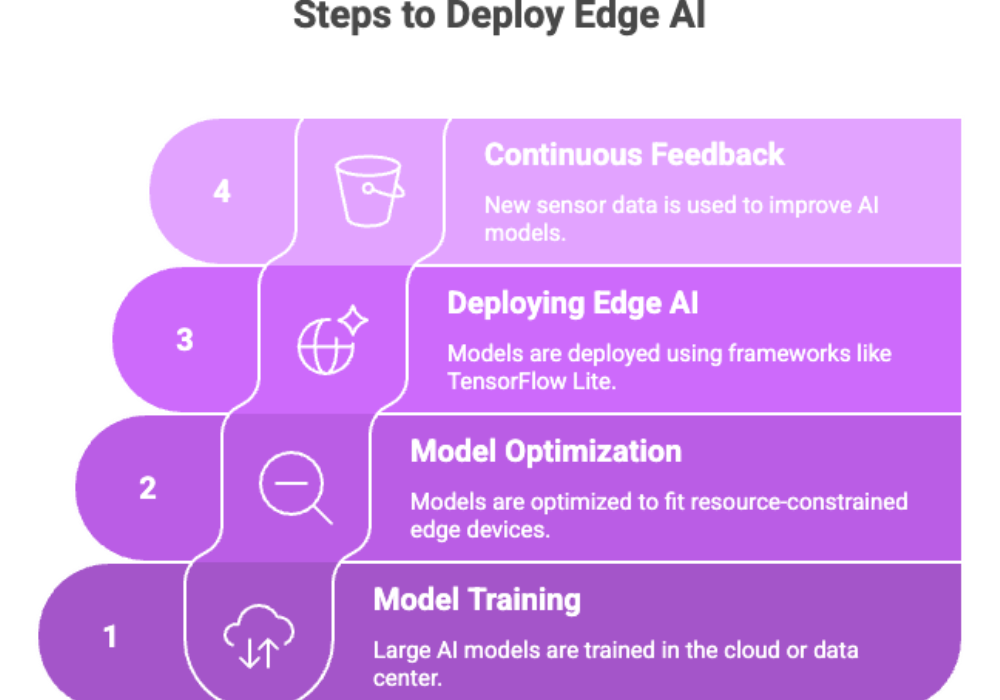

Steps in Deployment

Model Training (Cloud / Data Center)

Large AI models are initially trained in the cloud or a data center, where there is sufficient compute infrastructure.

Here, machine learning and deep learning algorithms ingest massive datasets to learn patterns and relationships.

Model Optimization (Pre-Deployment)

Trained models are often too large for resource-constrained local edge devices.

Techniques like quantization, pruning, and knowledge distillation shrink models without sacrificing much accuracy.

This ensures model performance is balanced with device constraints like memory, storage, and processing power.

Deploying Edge AI

Models are deployed using frameworks like TensorFlow Lite, PyTorch Mobile, or ONNX Runtime.

Developers use edge platforms that simplify deploying AI models across fleets of IoT devices.

Continuous Feedback Loop

Deployed models benefit from a feedback loop, where new sensor data gathered at the network edge is sent back to the cloud.

This supports further training and iterative improvements to ai models.

Training Process & Model Refinement

The training process does not end once models are deployed. Instead:

Devices can send data locally processed summaries or model performance metrics.

Cloud servers aggregate and retrain, pushing updated versions back to edge devices.

This enables a near real time improvement cycle, keeping inference engines accurate and efficient.

This pipeline allows organizations to deploy AI quickly while continuously evolving solutions to meet changing real world problems.

Edge AI Solutions and Applications

1. Manufacturing & Industrial Automation

Predictive Maintenance → Using edge AI models to process vibration, heat, and acoustic data in factories to anticipate machine failures.

Quality Control → Computer vision cameras perform object detection in real time, identifying defects before products reach the market.

Business Operations → Automating inspections and audits improves business processes while saving operational costs.

2. Transportation & Autonomous Vehicles

Self Driving Cars rely on real time decision making from multiple AI models.

LIDAR, radar, and video feeds are processed instantly using deploying edge AI techniques.

Unlike cloud-based inference, edge AI ensures vehicles remain functional without stable connectivity.

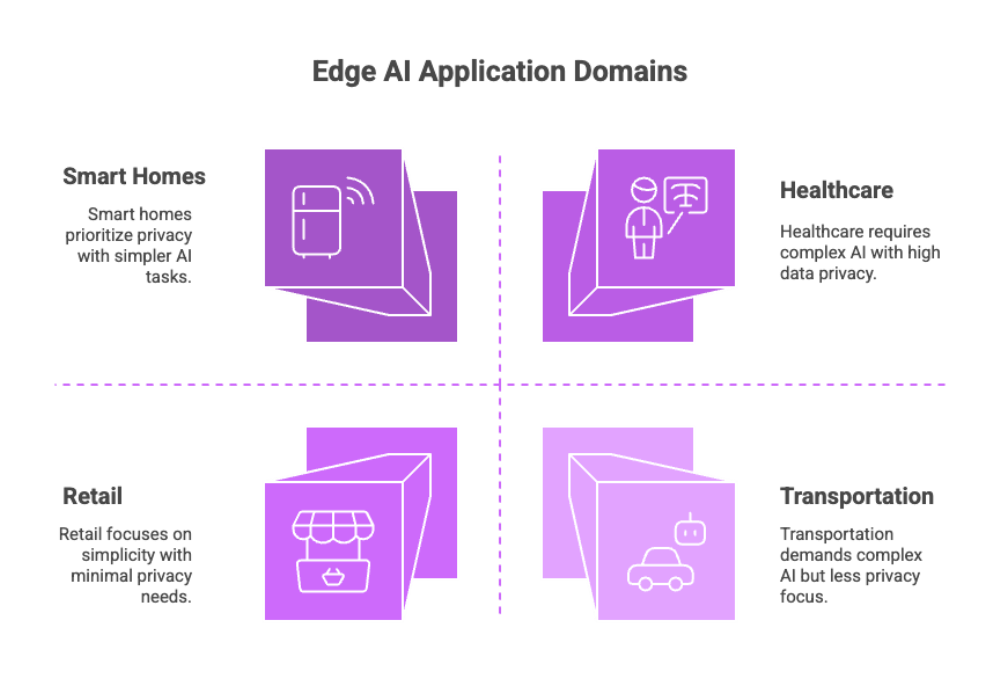

3. Smart Homes & IoT

Smart home appliances (fridges, speakers, thermostats) incorporate edge AI technology to react to voice commands or energy usage patterns.

These devices reduce reliance on cloud AI, offering faster and more private responses.

Smart homes also demonstrate how edge AI use cases improve comfort, efficiency, and save time for everyday users.

4. Healthcare

Medical Imaging → On-device analysis of CT scans or X-rays improves real time decision making in emergencies.

Wearables → Continuous monitoring of heart rate or glucose levels powered by ai algorithms running locally ensures increased efficiency without sending data to the cloud.

Data Security → Local inference safeguards sensitive data and patient privacy.

5. Retail & Customer Experience

Predictive Analytics → AI at the edge enables instant product recommendations in physical stores.

Smart Checkout → Cameras use object detection models to automatically recognize items, eliminating manual scanning.

Business Operations → Edge AI enhances fraud detection and customer analytics in near real time. Learn more about retail AI-first software development services.

In short, edge AI solutions are not limited to one domain — they are reshaping various industries by enabling real world applications that require low latency and high data privacy.

Future of Edge AI and Emerging Trends

The future of edge AI is not just about scaling what exists today; it is about creating new ecosystems where AI at the edge is the default rather than the exception.

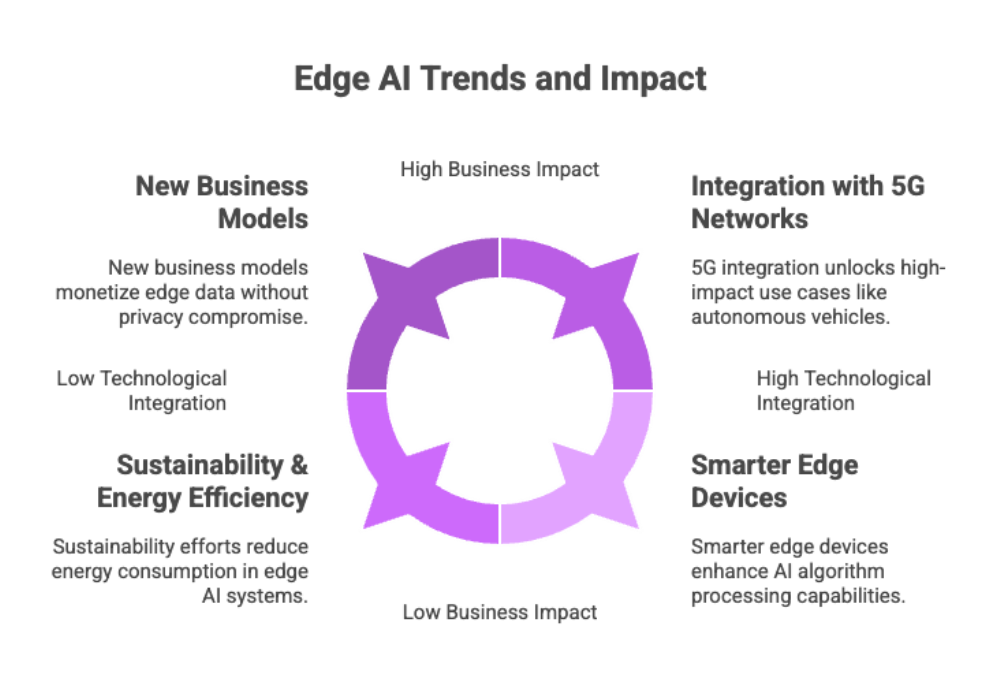

Key Trends Shaping the Future

Integration with 5G Networks

5G provides the low-latency, high-bandwidth environment needed for near real time data processing.

Together, 5G and edge AI platforms will unlock use cases like augmented reality, drone swarms, and autonomous vehicles at scale.

Smarter Edge Devices

Future edge devices will be designed with built-in accelerators for ai algorithms.

From consumer-grade hardware like smartphones to industrial-grade rugged devices, we will see greater adoption of deploying edge AI across the network edge.

Hybrid Cloud + Edge Models

The most competitive enterprises will embrace cloud AI for train models and large-scale analytics, while keeping inference at the edge for low-latency analysis.

This model ensures both cost efficiency and agility.

New Business Models

Edge AI is expected to fuel data-as-a-service (DaaS) and AI-as-a-service (AIaaS) models.

Organizations can monetize sensor data collected at the edge without compromising data privacy.

Sustainability & Energy Efficiency

Future edge AI systems will focus on reducing energy consumption by optimizing ai models for efficiency.

Running inference on local edge devices saves both power and bandwidth compared to centralized data centers.

Conclusion: Why Edge AI is the Future?

When we ask, “What makes edge AI so powerful?” the answer lies in its ability to combine real time data processing, enhanced privacy, and reduced costs — while enabling organizations to accelerate innovation.

The benefits of edge AI are undeniable:

Faster decision-making → critical in autonomous vehicles and emergency healthcare.

Enhanced data privacy → protects sensitive data and user trust.

Increased efficiency → lowers cloud dependency, reduces internet bandwidth usage.

Scalability across various industries → from smart homes to industrial IoT.

As technology advances, edge AI platforms and deploying edge AI strategies will become a core part of every AI-powered solution. For organizations seeking to solve real world problems, edge AI isn’t just a technical upgrade — it’s a strategic necessity.