Top AI Inference Infrastructure Solutions: What to Consider?

AI inference infrastructure is a cornerstone for enterprises aiming to scale artificial intelligence (AI) applications efficiently and securely. As organizations increasingly deploy large-scale AI workloads, selecting the right top AI inference infrastructure solutions becomes critical for optimizing model performance, managing costs, and ensuring compliance. Key stakeholders include CIOs, CTOs, and CISOs who must balance the demands of AI research, operational efficiency, and risk management.

Leading ai labs, such as Meta AI, are driving innovation in AI infrastructure and hardware by pushing the boundaries of compute requirements and orchestration strategies. The rapid advancement of generative ai is also reshaping infrastructure needs, requiring specialized hardware and integrated stacks to support high-performance, scalable deployments.

This article explores the evolving landscape of AI inference infrastructure, highlighting the role of cloud platforms, specialized hardware, and AI infrastructure companies. It also examines the implications for enterprise AI adoption, cost optimization, and compliance, providing actionable insights for technology leaders navigating this complex ecosystem.

Key Takeaways

AI inference infrastructure supports AI tasks such as model training, deployment, and real-time inference, essential for large scale AI applications.

Major cloud providers like Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure offer scalable AI services with seamless integration and robust data management capabilities.

Specialized AI hardware, including GPUs, TPUs, and language processing units (LPUs), drive high-speed inference and efficient AI training.

Inference services are a critical component of AI infrastructure, enabling scalable and efficient AI deployment. Their management and scalability can impact ease of use and costs across different environments.

AI infrastructure companies like NVIDIA and Intel lead innovation in accelerators and high-performance computing, with high performance inference as a key capability for real-time AI workloads, enabling efficient AI workloads.

Enterprises must prioritize security, compliance, and cost efficiency when selecting AI inference platforms to manage risk and maximize AI adoption.

Understanding AI Inference Infrastructure

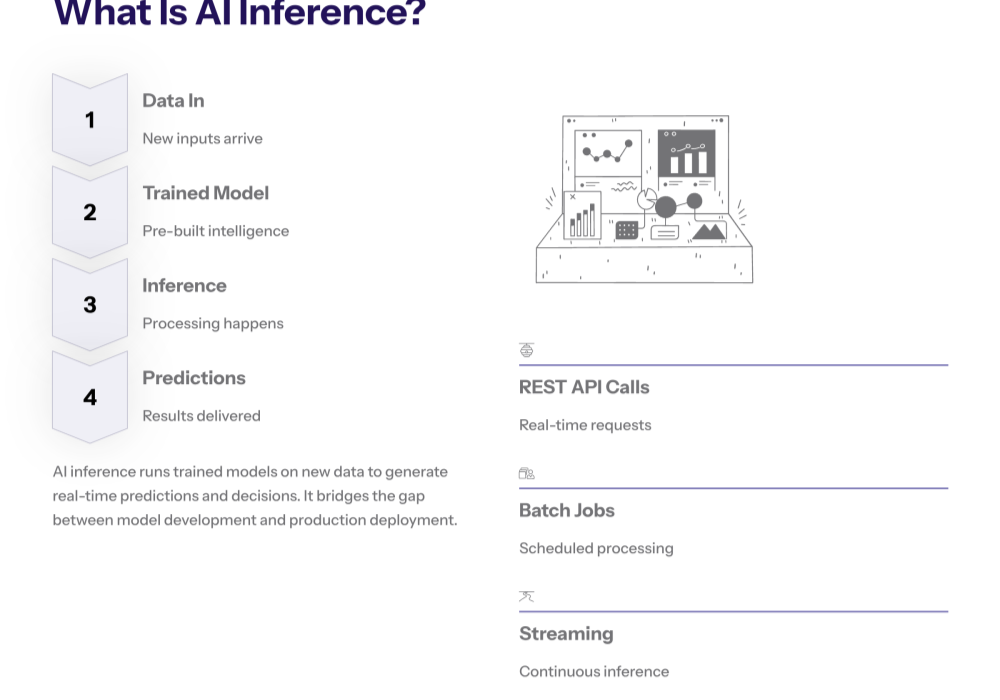

AI inference refers to the process where trained AI models make predictions or decisions based on new input data. This capability powers AI-driven solutions across industries, from natural language processing and computer vision to fraud detection systems and operational efficiency tools.

Large scale AI deployments demand infrastructure that can handle intensive AI workloads, supporting a variety of AI workload types such as REST API calls, batched processing, and streaming, with optimal performance and cost efficiency. AI inference infrastructure solutions encompass cloud platforms, specialized hardware, and software frameworks designed to support AI models throughout their lifecycle—from model training and fine-tuning to deployment and real-time inference.

AI deployment strategies are critical for moving models from proof-of-concept to production environments, ensuring model availability, scalability, and ease of use. The choice of infrastructure directly impacts AI performance, especially for advanced capabilities like reasoning, multi-modality, and adaptive compute systems.

Cloud Platforms as the Backbone

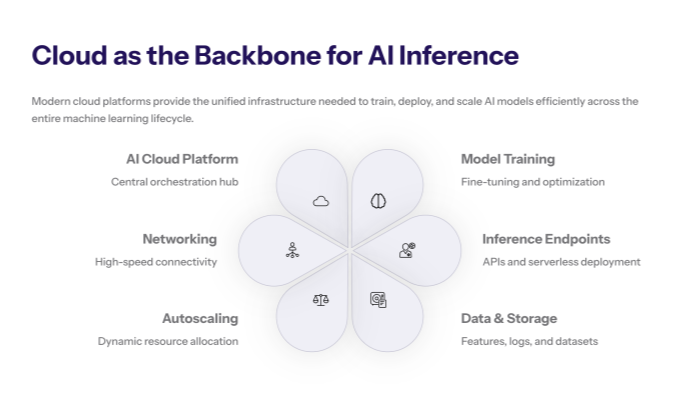

Cloud infrastructure plays a pivotal role in enabling scalable AI solutions. Providers such as Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure each serve as a leading cloud platform for AI inference, offering unified platforms that support AI model deployment, training, and inference.

These platforms provide robust data storage, high-speed networking, and integrated AI services like Vertex AI and Azure Machine Learning, facilitating seamless integration into enterprise workflows. Notably, these cloud platforms differentiate themselves by supporting deployment and scaling of large language models and other advanced AI models, making them ideal for enterprises seeking to leverage LLMs for natural language understanding, generation, and proprietary data access.

Cloud services also support serverless inference endpoints (with serverless model deployment as a key feature), allowing enterprises to scale AI applications dynamically without managing underlying hardware. This flexibility is crucial for handling variable AI workloads and optimizing operational costs.

Specialized Hardware Accelerators

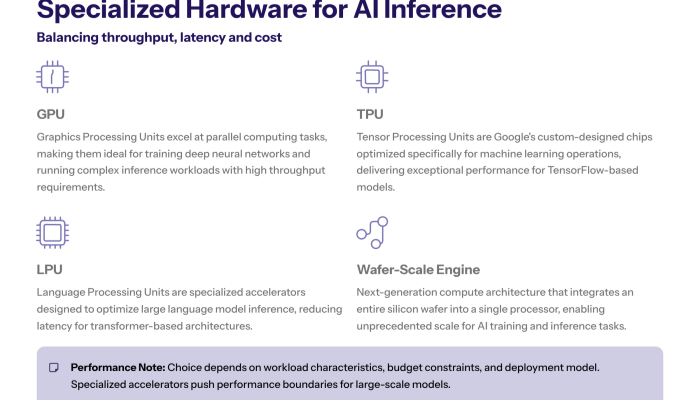

The efficiency of AI inference depends heavily on hardware accelerators. GPUs remain the industry standard for parallel processing, while TPUs (Tensor Processing Units) and LPUs (Language Processing Units—a specialized hardware component designed for high-speed, low-latency AI inference) offer optimized performance for specific AI tasks.

Technologies like NVIDIA’s Tensor Cores and Intel’s Nervana Engine exemplify advancements that deliver high-speed inference and efficient AI training. Additionally, the wafer scale engine represents a breakthrough in large-scale silicon chip design, integrating an enormous number of cores optimized for demanding AI workloads such as large language model training. Modern hardware platforms are increasingly capable of supporting multiple models simultaneously, enabling efficient orchestration and scalability for enterprise AI applications.

Edge AI deployments further necessitate specialized hardware capable of real-time processing with low latency, expanding the reach of AI-driven solutions beyond centralized data centers.

Implications for Enterprise AI Adoption

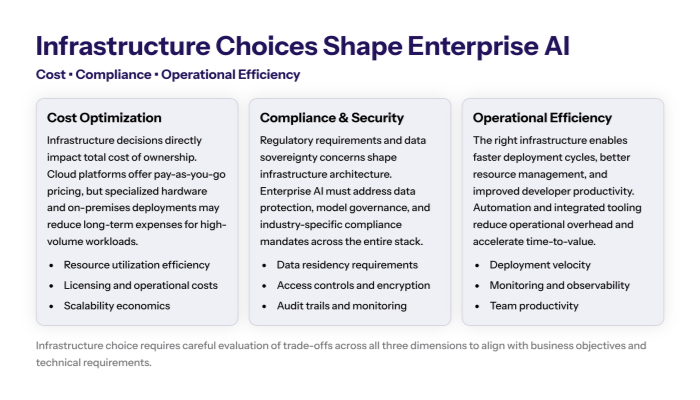

Adopting top AI inference infrastructure solutions impacts multiple facets of enterprise strategy. In addition to optimizing model performance, selecting the right AI platform is crucial for meeting specific enterprise requirements, including deployment environment, hardware compatibility, and integration with existing cloud services.

Cost Optimization: Leveraging cloud-native capabilities and specialized hardware enables enterprises to balance performance with cost efficiency. Transparent pricing models and autoscaling reduce waste and improve ROI. Different platforms also offer cost models tailored for AI projects of varying scale and maturity, allowing organizations to align infrastructure spend with project needs.

Compliance and Security: Enterprises must ensure AI infrastructure complies with industry regulations such as HIPAA, GDPR, and PCI-DSS. Cloud providers and AI infrastructure companies increasingly offer certifications and security features like encryption, role-based access control, and audit logging.

Operational Efficiency: Unified AI platforms streamline AI workflows, from data management and model training to deployment and monitoring. This integration accelerates time-to-market and supports continuous AI model improvement.

Risk Management: Robust infrastructure reduces risks related to data breaches, model drift, and performance degradation. Observability tools and governance frameworks are essential for maintaining AI system integrity. Advanced AI systems provide the security, scalability, and observability needed for enterprise AI, supporting high-performance workloads and complex deployments.

Opportunities and Challenges in AI Inference Infrastructure

As enterprises increasingly rely on AI-driven solutions, the demand for robust and scalable AI inference infrastructure continues to grow. This infrastructure plays a pivotal role in enabling efficient AI processing, supporting large-scale model training, and facilitating seamless AI model deployment.

Many platforms now enable rapid deployment of custom models and pre-trained machine learning models, reducing the need to train models from scratch and accelerating time-to-value. In addition to large-scale model training, hosting and managing large ai models requires specialized, scalable infrastructure capable of supporting complex, resource-intensive workloads.

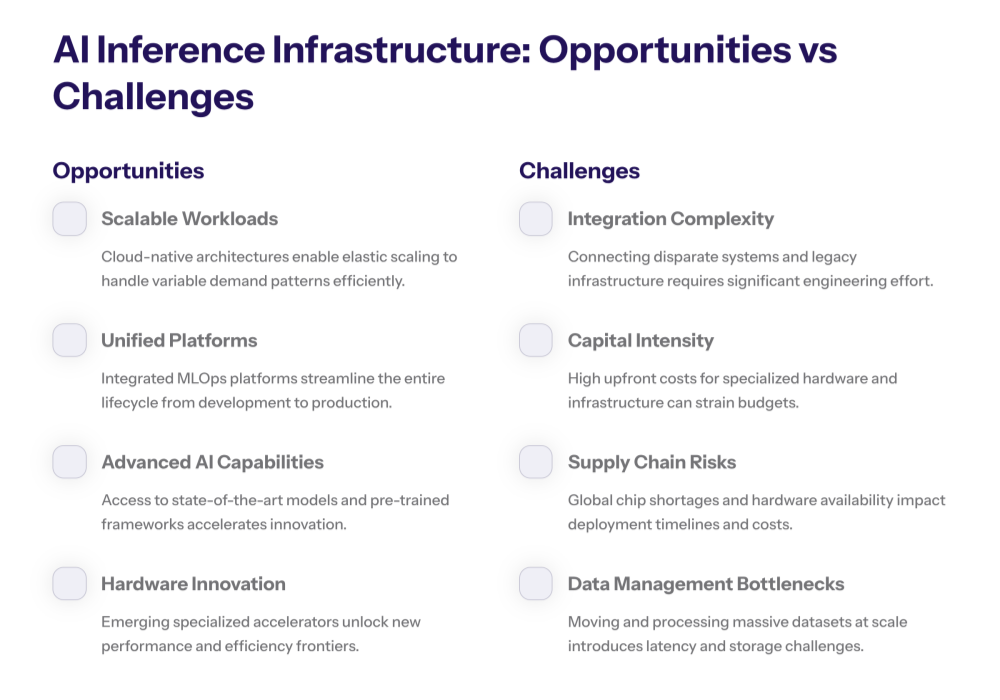

However, while the opportunities are vast—ranging from advanced AI capabilities to innovative hardware technologies—organizations must also navigate significant challenges such as integration complexity, capital investment, and data management. Understanding these opportunities and challenges is essential for technology leaders aiming to leverage top AI inference infrastructure solutions effectively.

Opportunities

Scalable AI Workloads: Enterprises can scale AI applications seamlessly across hybrid and multi-cloud environments, leveraging serverless inference and elastic compute resources.

Operational Efficiency: A unified platform consolidates data management, model training, and inference deployment into a single system, streamlining workflows and improving operational efficiency.

Advanced AI Capabilities: Integration with open source models and custom fine-tuning enhances AI model performance, supporting diverse AI-driven solutions.

Innovation in AI Hardware: Emerging technologies like photonic chips and transformer-specific ASICs promise breakthroughs in energy efficiency and inference speed.

Challenges

Complexity of Integration: Combining multiple AI infrastructure components requires expertise to ensure interoperability and governance.

Capital Intensity: Building or leasing high-performance AI infrastructure demands significant investment and strategic planning.

Supply Chain Risks: Geopolitical factors and hardware shortages can affect availability and cost of AI accelerators.

Data Management: Efficient data storage and transfer are critical to avoid bottlenecks in AI training and inference pipelines.

AI Infrastructure and Security

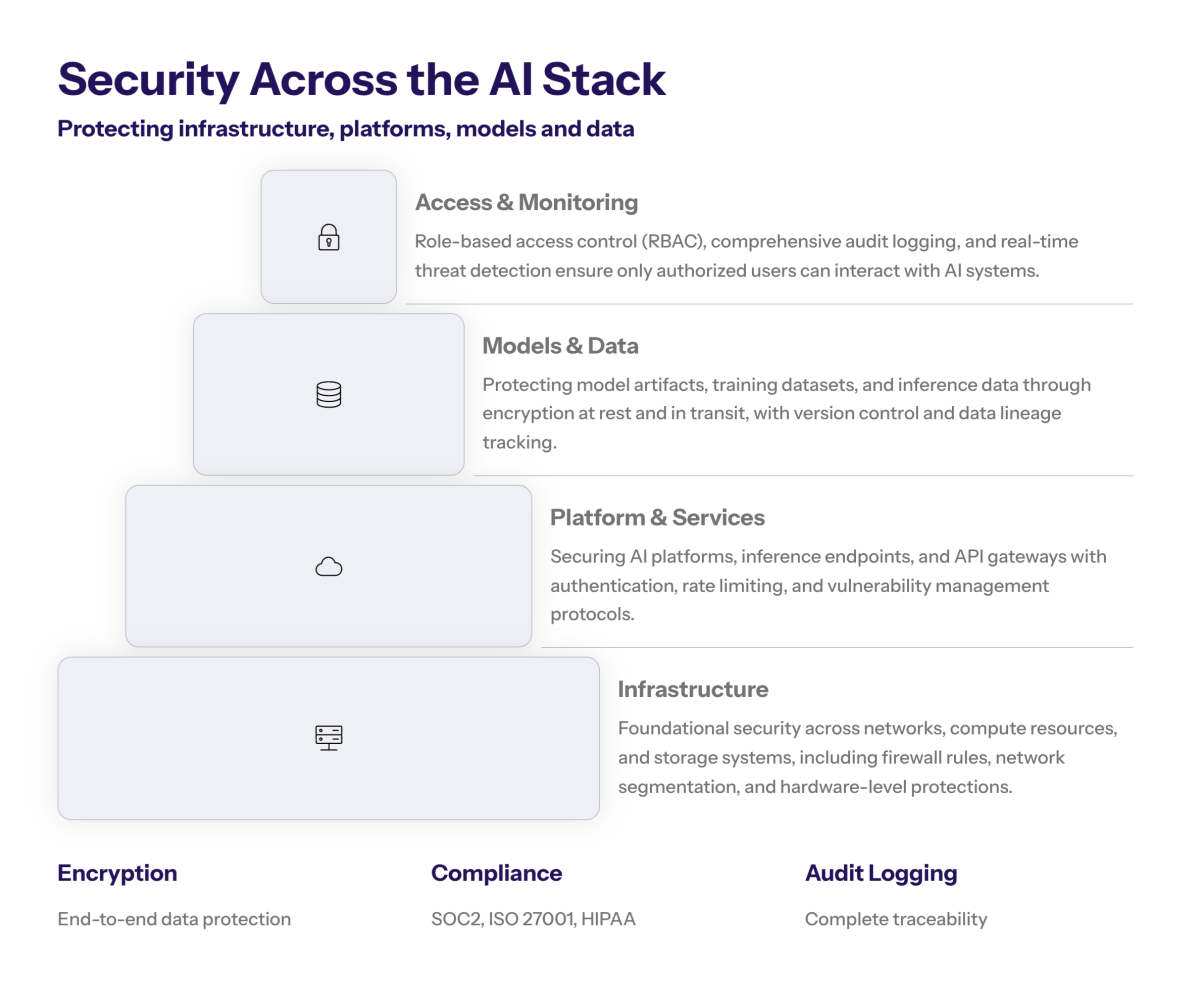

As enterprises accelerate their adoption of AI workloads, the security of AI infrastructure becomes a top priority. Protecting sensitive data, proprietary AI models, and the integrity of AI inference processes is essential for maintaining trust and compliance in large-scale AI deployments. Top AI infrastructure companies recognize these challenges and have developed robust security frameworks to safeguard every layer of the AI stack.

Leading cloud providers such as Google Cloud, Oracle Cloud Infrastructure, and Microsoft Azure deliver advanced security features tailored for AI inference platforms. These include end-to-end encryption, secure data storage, network segmentation, and granular access controls, all designed to protect AI models and data throughout their lifecycle. Additionally, these platforms offer compliance with industry standards and certifications like SOC 2, ISO 27001, and HIPAA, ensuring that AI deployments meet stringent regulatory requirements.

When evaluating an AI inference platform, enterprises should assess the security posture of both the cloud infrastructure and the AI services themselves. Features such as automated threat detection, audit logging, and model versioning help mitigate risks like data breaches and model drift. By partnering with top AI infrastructure companies and leveraging secure cloud infrastructure, organizations can confidently scale their AI initiatives while maintaining robust protection against evolving cyber threats.

Best Practices for AI Inference Infrastructure

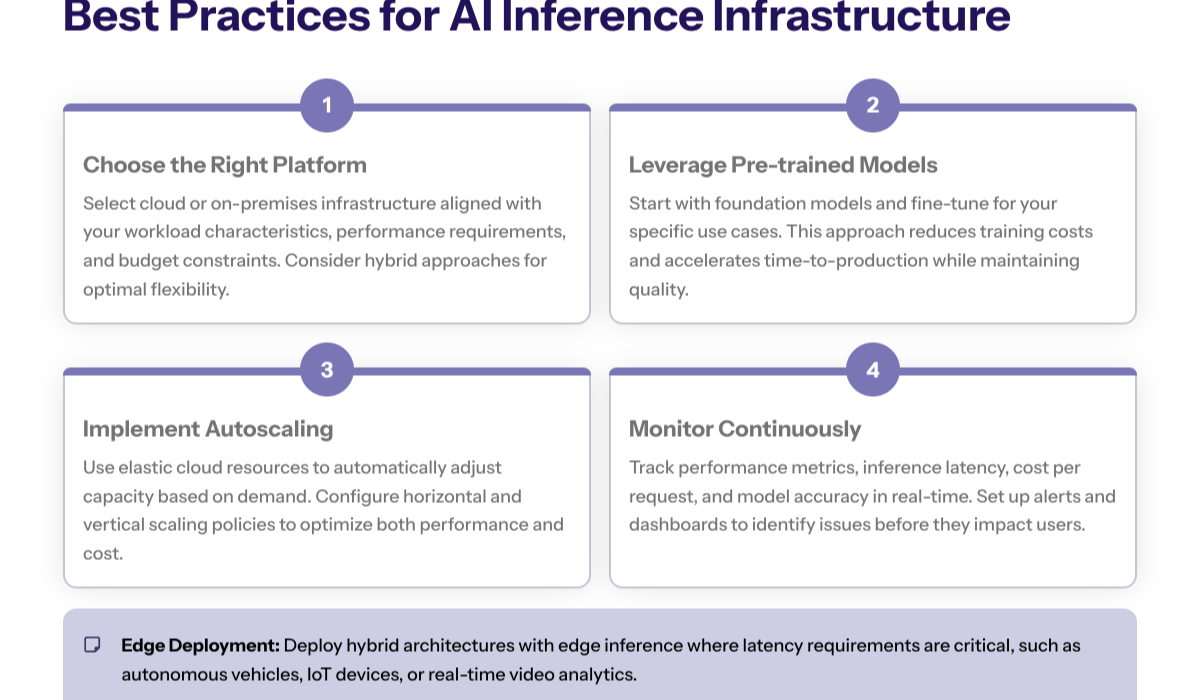

Optimizing AI inference infrastructure is crucial for organizations aiming to deliver high-performance, cost-efficient, and scalable AI applications. To achieve this, start by selecting an AI inference platform that aligns with your specific AI workloads and business objectives. Evaluate platforms based on their support for pre-trained models, ease of integration, and transparent cost structures to ensure efficient AI processing from the outset.

Leverage pre-trained models and fine-tuning capabilities to accelerate AI model deployment and minimize the resources required for model training. This approach not only reduces time-to-market but also enhances cost efficiency, especially when dealing with large scale AI workloads. Cloud services and scalable AI solutions from leading providers enable organizations to dynamically allocate resources, ensuring optimal performance and high-speed inference even as demand fluctuates.

Continuous monitoring of model performance is essential to maintain efficient AI processing and adapt infrastructure as needed. Incorporate hybrid cloud capabilities and edge AI solutions to support real-time AI applications, reduce latency, and extend AI deployments closer to data sources.

By following these best practices—selecting the right inference platform, leveraging scalable cloud infrastructure, and embracing edge and hybrid strategies—enterprises can ensure their AI deployments deliver maximum business value and remain competitive in the rapidly evolving landscape of artificial intelligence.

Future Outlook for AI Inference Infrastructure

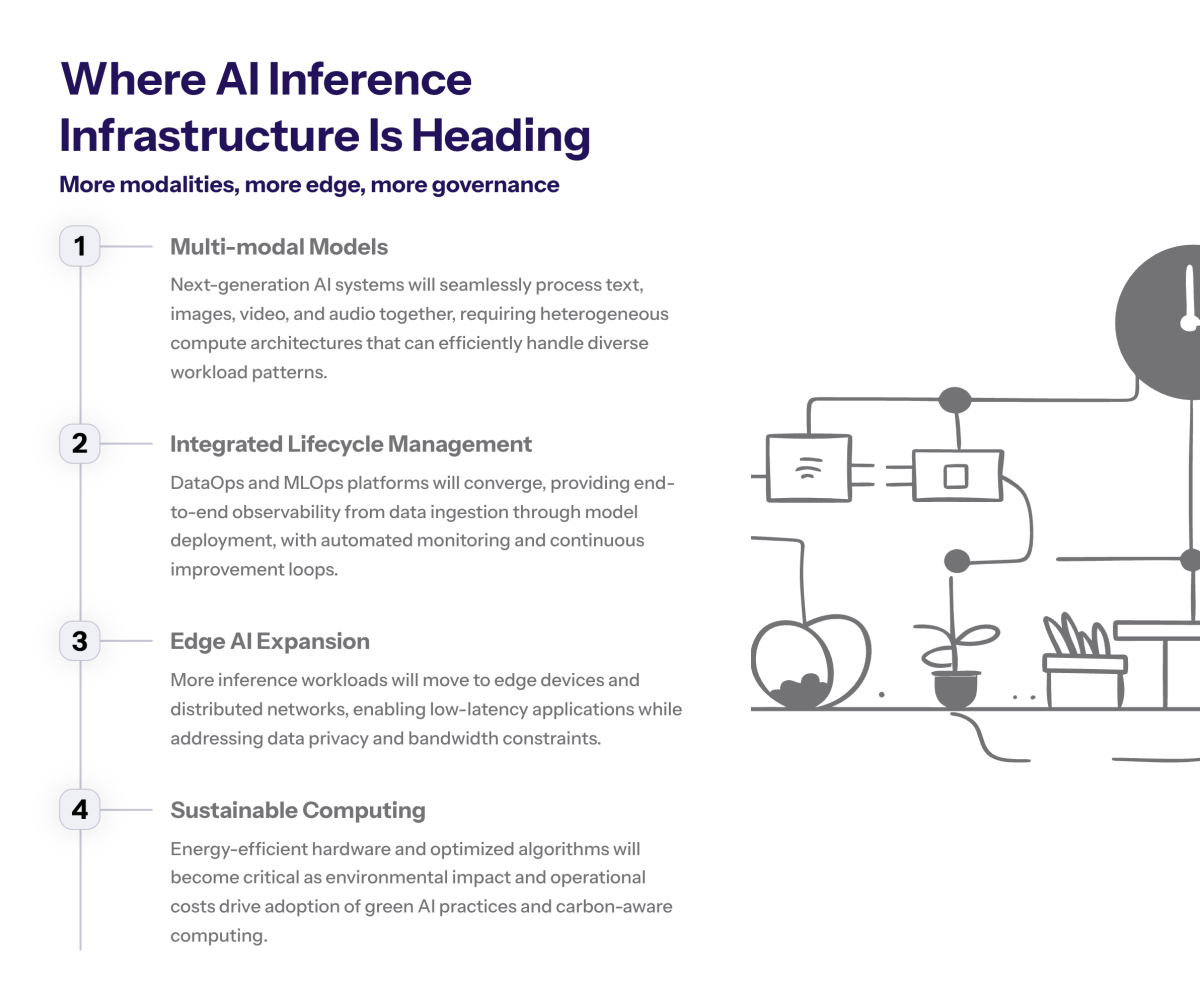

The AI inference infrastructure landscape will continue to evolve with advances in hardware, software orchestration, and cloud services. Enterprises should prepare for:

Increased adoption of multi-modal AI models requiring heterogeneous compute pipelines.

Greater emphasis on AI lifecycle management with integrated DataOps, observability, and governance.

Expansion of edge AI infrastructure to support real-time, low-latency applications.

Growing importance of sustainability and energy-efficient AI computing.

Technology leaders must adopt flexible, secure, and cost-effective AI infrastructure strategies to maintain competitive advantage in this dynamic environment.

Conclusion

Top AI inference infrastructure solutions are foundational to scaling artificial intelligence across enterprises. By leveraging cloud platforms, specialized hardware, and integrated AI services, organizations can optimize model performance, manage costs, and ensure compliance. Navigating the complexities of AI infrastructure requires strategic foresight and operational agility to harness AI’s full potential.

Stay ahead of AI and tech strategy. Subscribe to What Goes On: Cognativ’s Weekly Tech Digest for deeper insights and executive analysis.