Create Your Own Local AI Assistant for Enhanced Privacy

Artificial Intelligence (AI) has reached a stage where individuals no longer need to depend solely on big tech ecosystems for smart assistants. Instead, local AI assistants allow you to run advanced AI models directly on your own devices, ensuring enhanced privacy and greater control over your data.

Unlike cloud-based assistants that constantly send information to remote servers, a private AI assistant built with local models keeps everything processed offline or on your own local machine. This setup eliminates potential data leaks, removes dependence on external servers, and ensures your conversations, queries, and files stay under your control.

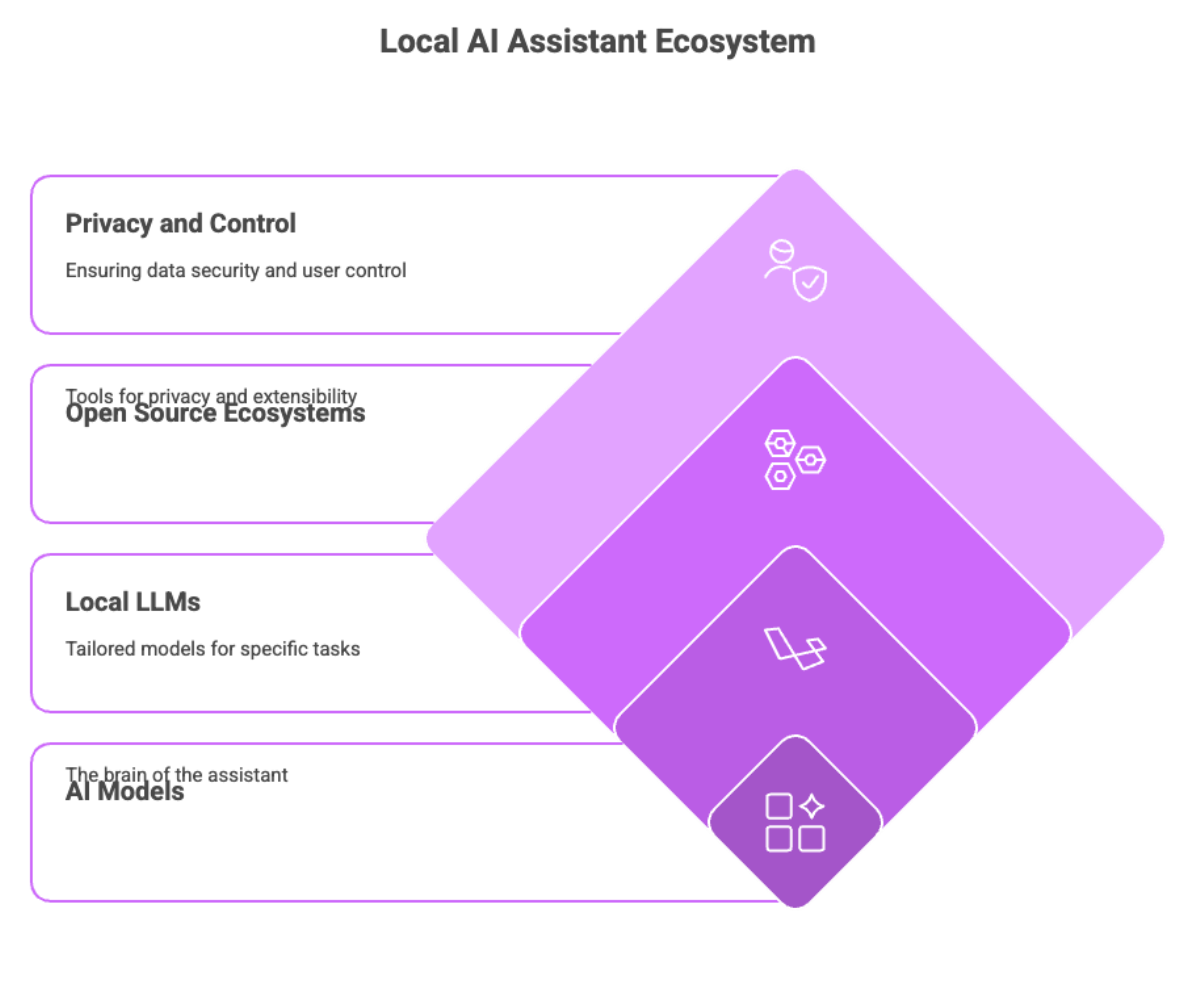

Some foundational points:

AI models act as the brain of your assistant, powering features like natural language understanding, task automation, or even image generation.

Local LLMs (large language models) can be tailored and fine-tuned for specific use cases such as speech recognition, summarization, or semantic search.

Open source ecosystems provide tools like Leon (a well-known open source personal assistant) and Nextcloud Assistant, which combine privacy with extensibility.

For developers and privacy-conscious users, the opportunity to create a local assistant is a true game changer.

Benefits of Local Models

The value of local models extends beyond data control. Let’s unpack why building your own local ai assistant outshines traditional cloud-based options:

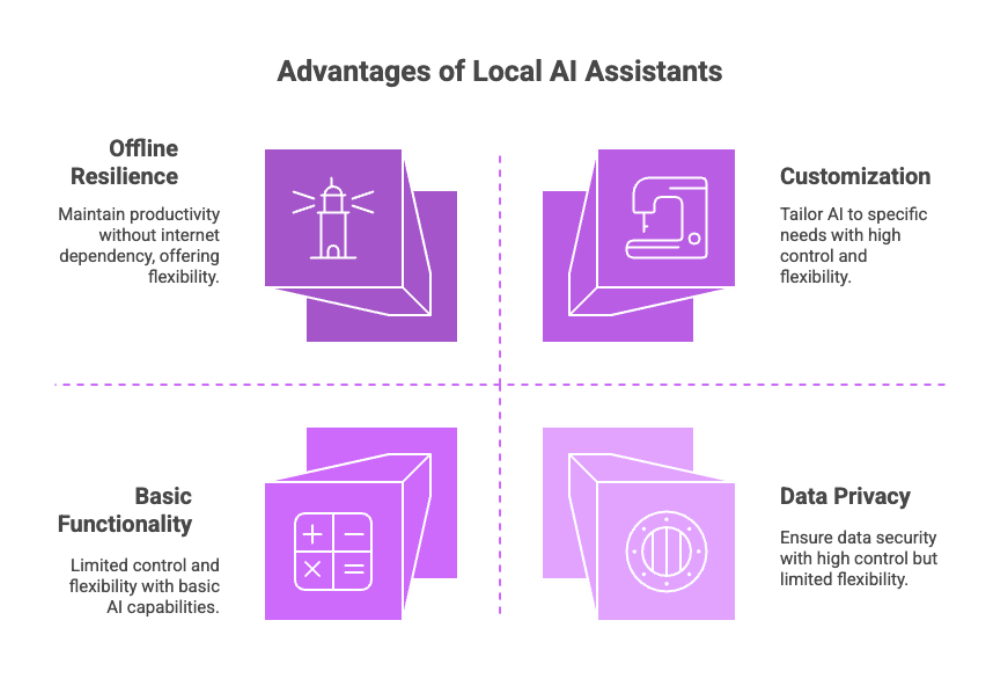

Data Privacy

All processing happens on your local machine, minimizing the chances of data leaks or exposure.

This is essential for handling sensitive data, whether personal (health, finances) or professional (client contracts, confidential projects).

Cost Control

Running models locally avoids recurring cloud costs.

With GPU optimization, smaller yet powerful models can replace expensive cloud instances.

Flexibility and Customization

A private ai assistant can be tuned to your workflow. Want it to manage tasks, generate emails, or organize research? You decide.

Open source frameworks allow you to extend functionality through plugins or community-built extensions.

Offline and Resilient

No need for constant internet connection. Your assistant runs in offline mode, unlike Alexa or Google Assistant.

This is especially useful in environments with limited connectivity, ensuring you maintain productivity.

Integration Potential

Combine your assistant with apps, scripts, or local tools. For example, link it with Nextcloud for chat and call summaries, or integrate with local databases for context-aware responses.

Future-Proofing

With emerging new models like LLaMA, Mistral, and open source models, the ecosystem will only expand, giving you access to continuously evolving AI concepts.

In short: local ai assistants are not just about privacy—they’re about control, savings, and freedom.

Building a Private AI Assistant

Now that we understand the benefits of local models, how do you actually create your own private ai assistant?

Step 1: Define Your Goals

Do you want an assistant to:

Handle speech commands?

Summarize and reword documents?

Assist with coding and development workflows?

Generate visuals through image generation?

Your overall design and architecture will differ depending on the scope.

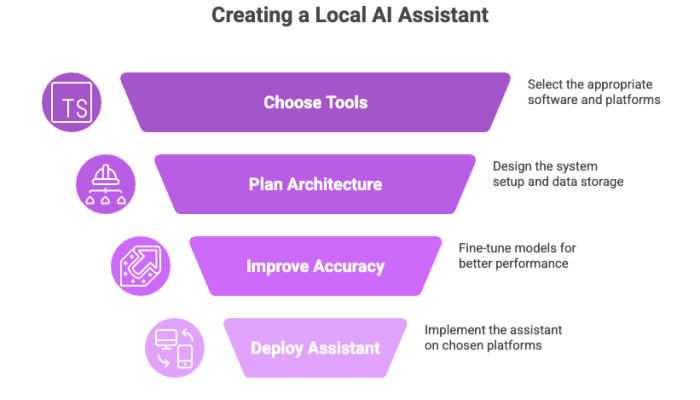

Step 2: Choose Your Foundation Tools

Leon: An open source personal assistant with a Leon CLI npm package, making it accessible for developers to install and extend.

Nextcloud Assistant: Perfect if you’re already using Nextcloud for file management or collaboration.

Ollama: Focused on running models locally, great for experimenting with multiple models in one ecosystem.

LM Studio: A GUI-based solution for working with local LLMs, ideal for non-technical users.

Step 3: Plan Your Architecture

Local Server Setup: Decide whether to host it on your Linux, Windows, or Mac system.

Data Storage: Keep confidential data on encrypted drives.

APIs & Integrations: Connect with external cloud services only when necessary, such as for view image hosting or optional collaborative features.

Step 4: Improve Accuracy

Fine-tune your ai models with:

Chunking and Embedding: Splitting long chat data or documents for improved semantic search.

Training with Your Context: Add personal documents or project files so the assistant understands your domain.

Response Optimization: Test and refine the response cancel respond flow to minimize irrelevant outputs.

Step 5: Deployment

Use orchestration tools like AWS Copilot or lightweight container setups. These make it easier to manage running llms and scale if you ever want to expand to multiple devices.

At this stage, you’ll have a functional local ai assistant.

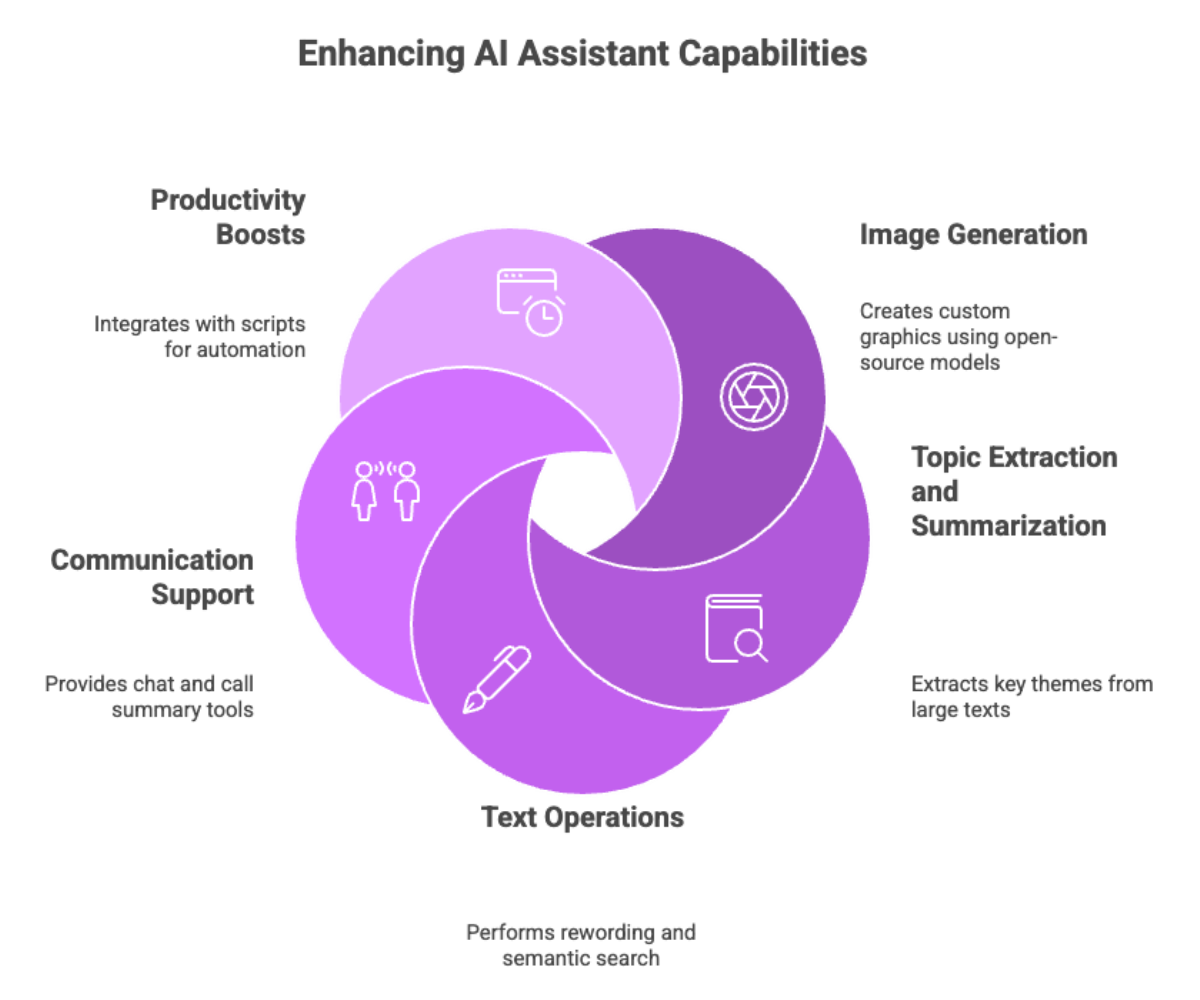

Advanced Features and Image Generation

A basic private ai assistant can already handle language tasks, but the game changer is unlocking advanced features.

Image Generation

Using open source models like Stable Diffusion, you can create custom graphics or illustrations directly on your machine.

Instead of relying on external platforms, your assistant becomes a vision-powered tool.

Example: ask it to generate visuals for a presentation, and it produces them instantly without transmitting sensitive data online.

Topic Extraction and Summarization

Extract key themes from large text files.

Summarize meeting notes, chat logs, or documentation to speed up your learning process.

Text Operations

Rewording, summarization, and semantic search across files.

Example: your assistant could scan project folders, then pull naturally related snippets of information on demand.

Communication Support

Nextcloud Assistant already provides chat and call summary tools.

With speech recognition, your assistant can listen, transcribe, and even provide response drafts during live discussions.

Productivity Boosts

Integrate with python scripts or code editors to run automation.

Create “skills” for your assistant to manage schedules, emails, or development tasks.

By layering these ai capabilities, your local ai assistant becomes more than just a chatbot—it becomes a fully customizable assistant integrated with your daily workflow.

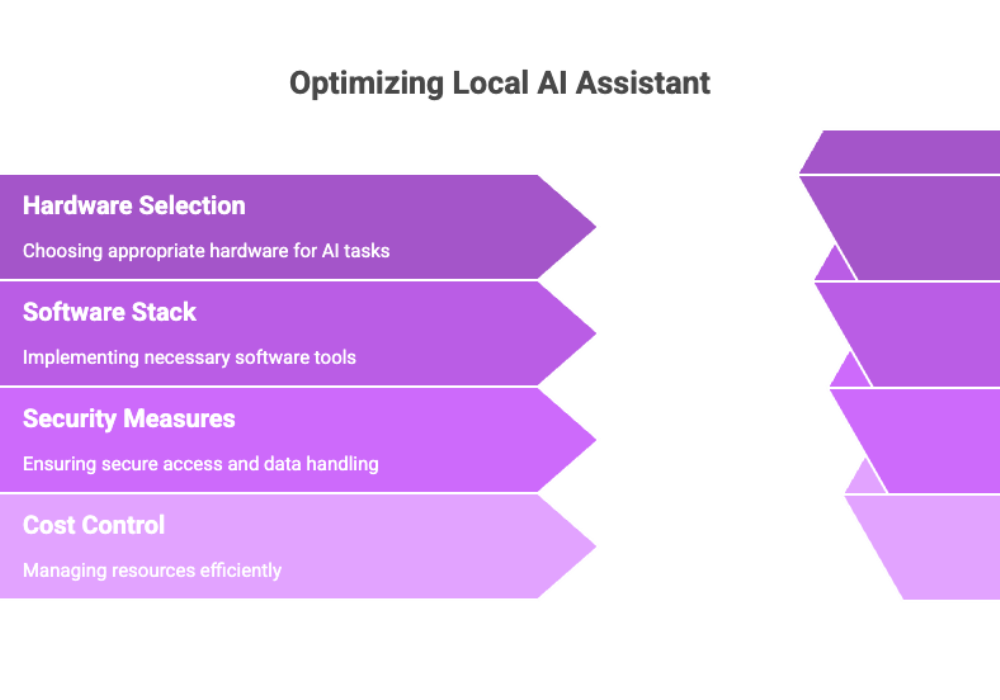

Running Your Local AI Assistant

Once your local ai assistant is built, the next challenge is running models locally in a way that’s efficient, secure, and cost-aware.

Hardware Considerations

GPU-Powered Systems: If you want high-speed image generation or to run large local llm models, a GPU with significant VRAM is essential. NVIDIA and AMD GPUs both work, but NVIDIA’s CUDA ecosystem still has more tooling support.

CPU-Only Alternatives: For lighter models (smaller ai models locally), CPUs can handle them, though at slower speeds. Tools like LLaMA.cpp and Ollama optimize for CPU usage.

Specialized Hardware: We’re seeing an emergence of edge hardware optimized for AI tasks, like Apple’s Neural Engine or ARM-based AI accelerators.

Software Stack

Running a private ai assistant often requires:

Containerization: Docker ensures you can manage multiple assistants or services without conflicts.

Scheduling Tools: AWS System Manager or local cron jobs help shut down heavy GPU workloads when not in use, saving electricity and reducing power consumption.

Security Layers: Use encryption, secure APIs, and role-based access to prevent unauthorized use of your assistant.

Cost Control

Even though you’re not paying recurring cloud services fees, local compute still costs in terms of power draw and hardware wear. Best practices include:

Running smaller models for routine tasks.

Spinning up powerful models only when required (e.g., image generation or deep learning fine-tuning).

Using hybrid setups where local ai assistants process sensitive information locally but offload generic, non-sensitive tasks to optional cloud ai services.

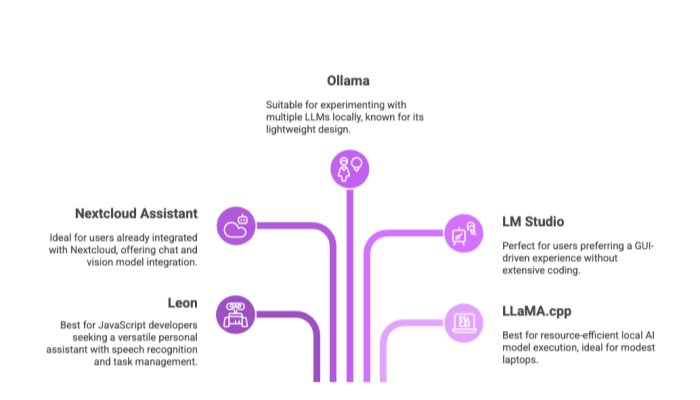

Open Source Solutions

The beauty of the open source ecosystem is that you’re not reinventing the wheel. Several tools and frameworks already exist to bootstrap your local ai assistant.

Leon

A true open source personal assistant, built in JavaScript with a Leon CLI npm package for installation.

Provides natural speech recognition, task management, and extendable skills.

Can be installed on Linux, Mac, or Windows, making it accessible for a wide range of developers.

Nextcloud Assistant

Ideal if you’re already using Nextcloud for files and collaboration.

Provides chat, speech, and call summaries.

Can integrate with vision models for view image generation and annotation.

Ollama

Focused on running llms locally, supports multiple models in one environment.

Lightweight and developer-friendly, excellent for experimenting with different models.

LM Studio

GUI-driven, with drag-and-drop functionality.

Best for users who want local llm capabilities without heavy coding knowledge.

Supports semantic search across local chat data and documents.

LLaMA.cpp & Beyond

Minimalist C++ implementation for running ai models locally.

Very resource-efficient, designed to run llms even on modest laptops.

Serves as proof that local ai assistants don’t need expensive servers to operate.

Why Open Source Wins?

Transparency: You can review the code for security.

Flexibility: Build extensions for industry-specific workflows.

Community Support: Tutorials, forums, and documentation evolve rapidly.

Setting Up Your Local AI Assistant

Building is one step, but setup is the make-or-break phase.

Step-by-Step Setup Flow

Install Dependencies

Use package managers like npm (leon cli npm install) or Python’s pip for dependencies.

Ensure you have the right GPU drivers installed.

Configure Your Assistant

Adjust architecture for memory and performance.

Apply advanced configurations like embedding custom chat data for more personalized context.

Integrate Tools and Skills

Link with productivity apps, local databases, or even python automation scripts.

Add speech input and chat outputs to create a conversational experience.

Test Thoroughly

Run sample queries and monitor response accuracy.

Refine with fine-tuned models or smaller models depending on the task.

Secure and Maintain

Apply encryption to all data stored.

Keep models and software updated to prevent vulnerabilities.

Avoiding Pitfalls

Don’t underestimate system performance requirements; heavy models require strong hardware.

Avoid storing sensitive data unencrypted.

Balance between offline functionality and optional cloud connectivity when necessary.

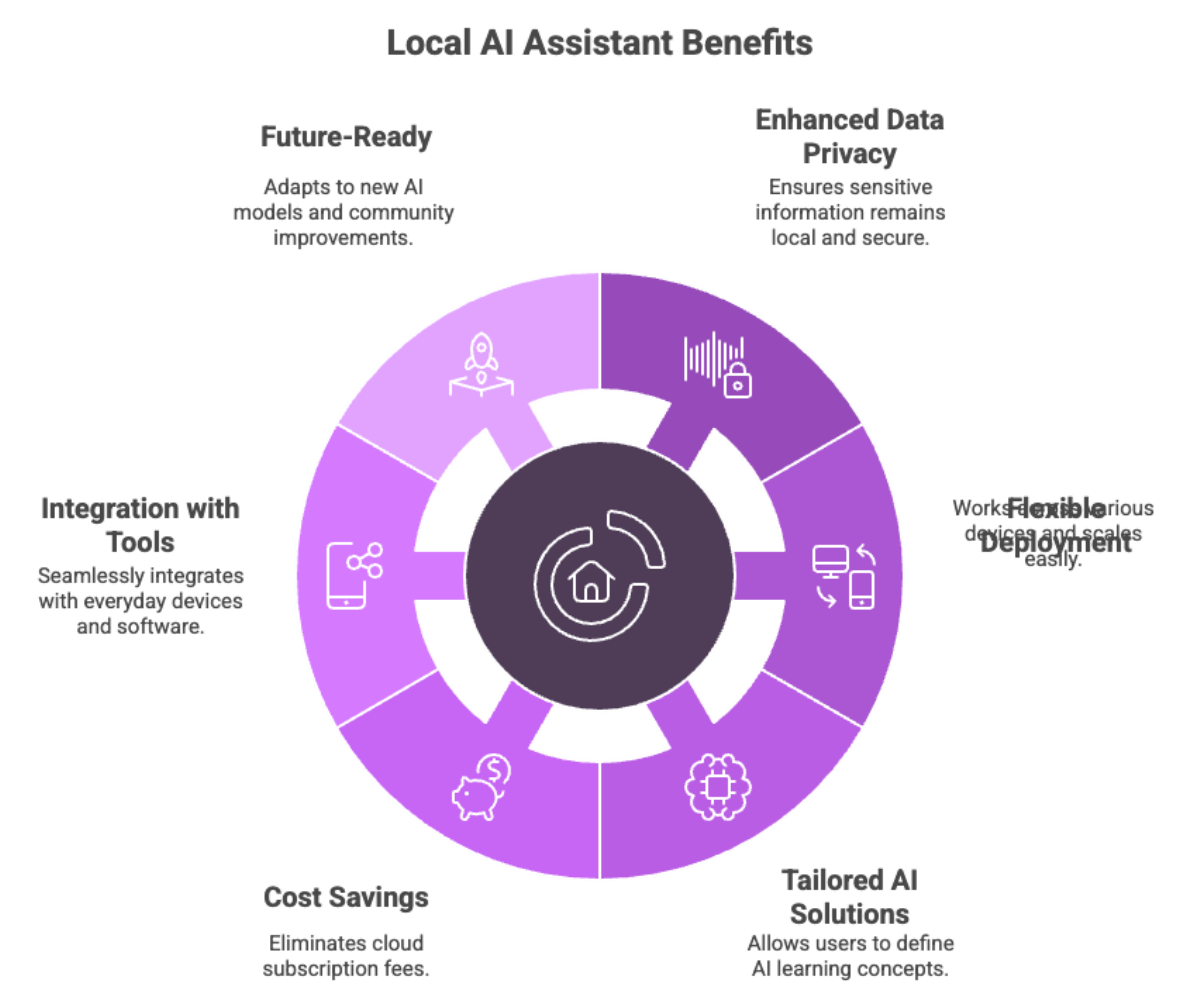

Why Local AI Assistants are a Game Changer?

Let’s tie it all together.

Enhanced Data Privacy

All sensitive information stays local.

No data transmitted to unknown servers.

Flexible Deployment

Works on desktops, laptops, or even wearable devices.

Can scale across multiple devices in a home or office.

Tailored AI Solutions

You define the ai concepts your assistant learns.

Perfect for business operations needing control over workflows.

Cost Savings

No monthly subscription for cloud services.

Only hardware and electricity costs to manage.

Integration with Everyday Tools

Use it for smart homes, security cameras, or industrial monitoring.

Add chat, task scheduling, and document parsing to daily work.

Future-Ready

As new models emerge, you can install them directly.

Open source communities continuously improve assistants like Leon and Nextcloud Assistant.

Truly a Game Changer

The combination of control, complete privacy, and powerful models makes local AI assistants stand out as a revolutionary step in AI adoption.

For both personal use and enterprise, the shift toward decentralized AI represents a paradigm shift away from dependence on big tech.

Advanced Features and Expanding Capabilities

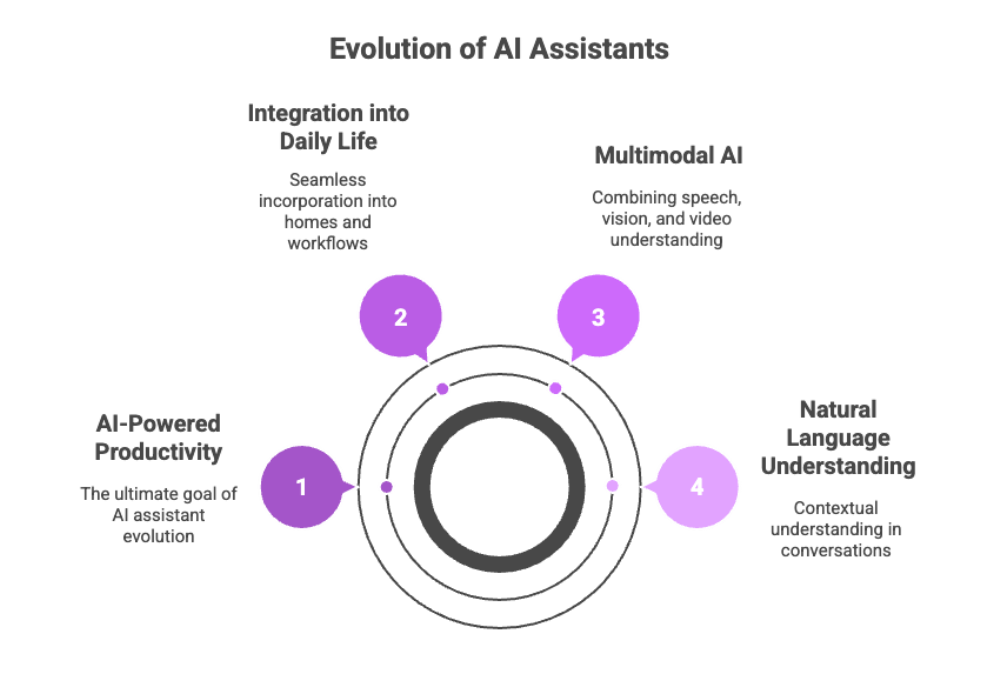

A local ai assistant is not just about responding to queries — it’s evolving into a game changer in AI-powered personal and professional productivity.

Natural Language Understanding at Scale

With advances in machine learning and deep learning, assistants are becoming better at understanding context in conversations.

Semantic search capabilities mean your assistant can pull information from chat data, files, or notes without requiring exact keywords.

Over time, continuous improvement through incremental learning allows assistants to adapt to your unique communication style.

Beyond Text: Multimodal AI

Image generation is already becoming common, letting you view images created in real-time for projects, designs, or presentations.

Upcoming powerful models will combine speech, vision, and even video understanding.

This multimodal capacity means a local ai assistant could summarize a meeting transcript, generate illustrative slides, and draft follow-up emails — all offline and without data leaks.

Integration into Daily Life

In smart homes, assistants can control appliances securely without reliance on cloud servers.

Wearable devices will provide real-time monitoring of health signals, feeding data directly into your assistant while respecting sensitive information.

Professionals will integrate assistants into workflows — from software development to research, creating a new journey of productivity enhancement.

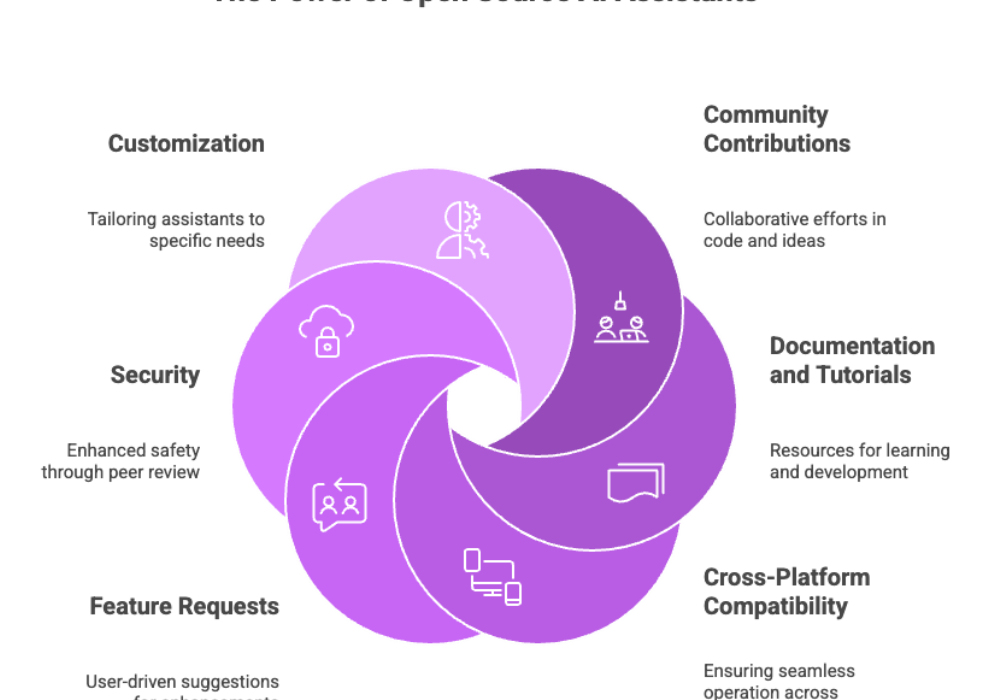

Open Source and Community Collaboration

The open source movement is central to the rise of private ai assistants.

Projects like Leon and Nextcloud Assistant demonstrate the cool potential of open source personal assistants, where anyone can contribute code, suggest extensions, or refine skills.

Communities publish documentation, tutorials, and ideas for new features, creating a cycle of innovation far beyond what closed cloud based systems allow.

Developers are working on cross platform compatibility, ensuring assistants can run seamlessly on Linux, Mac, and Windows systems.

Why Community-Driven Growth Matters?

Encourages naturally related feature requests based on real-world usage.

Increases security through peer review of the code (reducing risks of hidden backdoors).

Fosters fully customizable assistants — from simple task bots to complex enterprise ai servers.

Future Outlook: Where Local AI is Heading

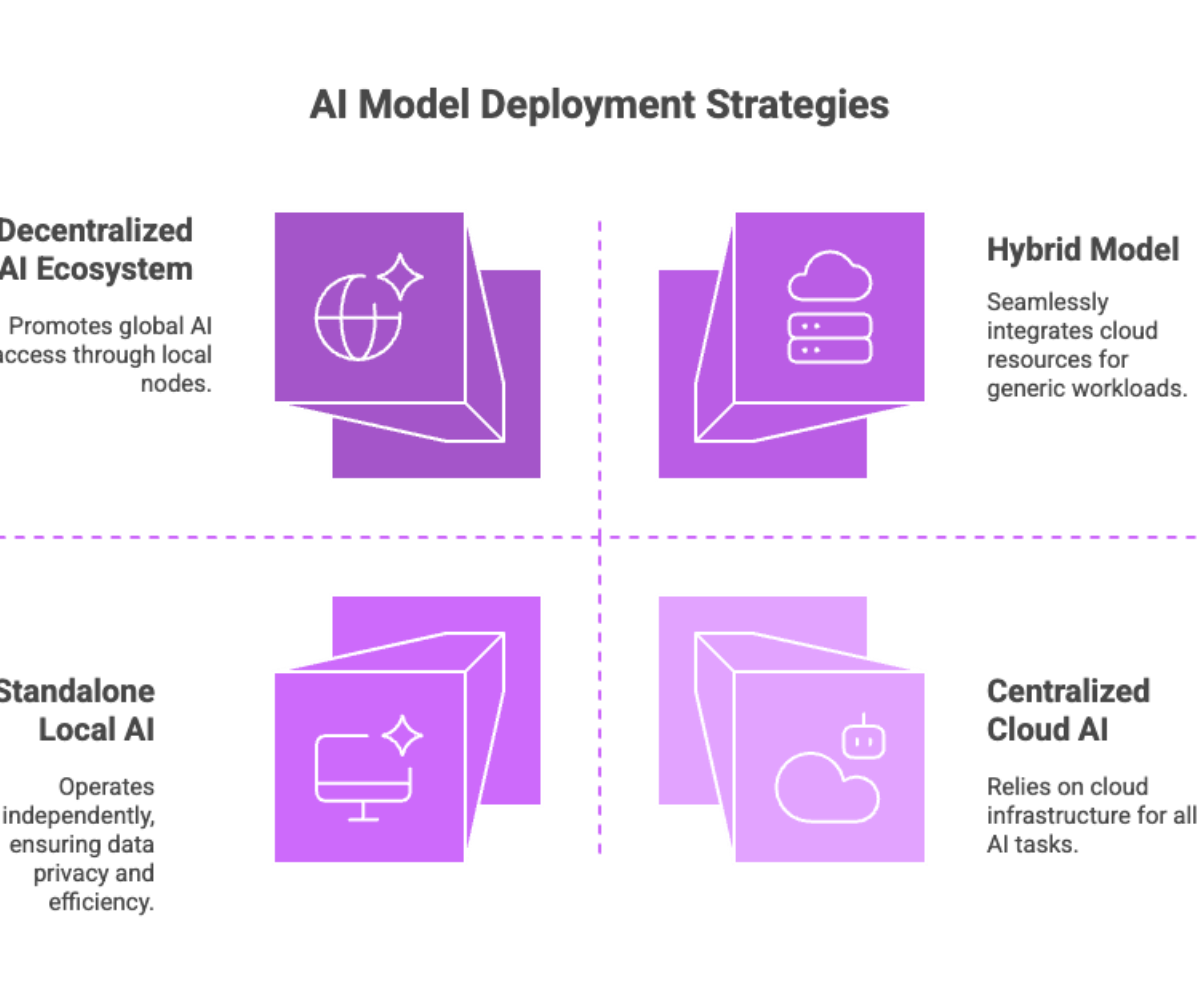

We’re entering a stage where local models are bridging the gap with cloud based ai services.

Smaller but Smarter

Optimized AI models are becoming compact enough to run on consumer hardware while still matching cloud ai capabilities.

This reduces bandwidth usage, cuts cost savings, and enhances data privacy by keeping everything processed locally.

Integration with Cloud (Hybrid Model)

While many will prefer offline operation, hybrid setups will emerge:

Critical data like sensitive information stays local.

Generic workloads (like large-scale training or heavy data transmitted batches) may temporarily use cloud resources.

This offers the best of both worlds: seamless cloud integration without compromising data sovereignty regulations.

The Decentralization Movement

As distributed ai systems evolve, local ai assistants will become nodes in a global, decentralized AI ecosystem.

Instead of one centralized cloud data center, thousands of personal assistants will share relevant information while respecting privacy.

This decentralized approach protects against monopolization by big tech companies and promotes fairer access to ai capabilities.

Why Local AI Assistants Are a True Game Changer

Let’s bring it home.

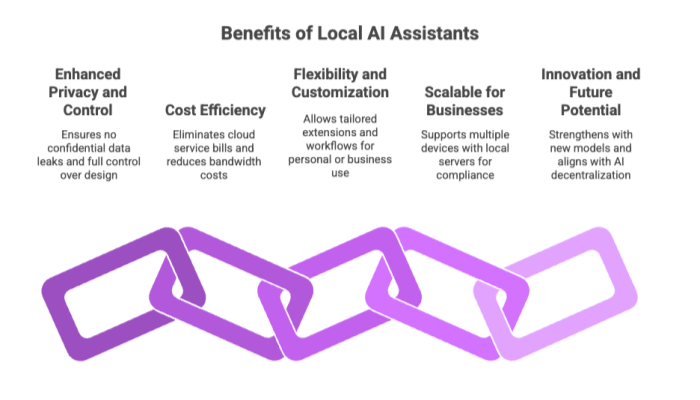

Enhanced Privacy and Control

No confidential data leaks.

Full control over your assistant’s architecture and overall design.

Cost Efficiency

No recurring cloud services bills.

Reduced bandwidth costs thanks to processing data locally.

Flexibility and Customization

Ability to create extensions and workflows tailored to personal use or business operations.

Build assistants that understand your thinking, automate your free time, or even act as project managers.

Scalable for Businesses

Companies can deploy multiple devices with a shared local server, ensuring compliance with data sovereignty regulations.

Support worker safety, quality control, and defect detection in industries without sending raw data to remote servers.

Innovation and Future Potential

With every new model, your assistant gets stronger.

The ai wave is shifting toward decentralization, making local assistants an inevitable part of the future of artificial intelligence.

Conclusion

Building a local ai assistant isn’t just a technical project — it’s an act of empowerment. By choosing open source solutions, managing your own models locally, and embracing local llm frameworks, you gain:

Complete privacy and protection from data leaks.

Game-changing flexibility in how you run, customize, and scale your assistant.

A direct role in shaping the future of decentralized AI services.

Whether you’re using Leon, Nextcloud Assistant, or experimenting with Ollama supports multiple models, the journey is the same: reclaiming control of your AI.

As the ecosystem of tools, ai concepts, and extensions expands, local ai assistants will redefine what it means to have a digital companion. They won’t just answer queries — they’ll become integrated assistants in our projects, homes, and businesses, all while keeping sensitive information safe.

This is why local ai assistants aren’t just another step in AI’s evolution. They are the game changer that ensures the technology works for us — privately, securely, and on our own terms.