Local AI Models: Your Guide to Running Them Smoothly

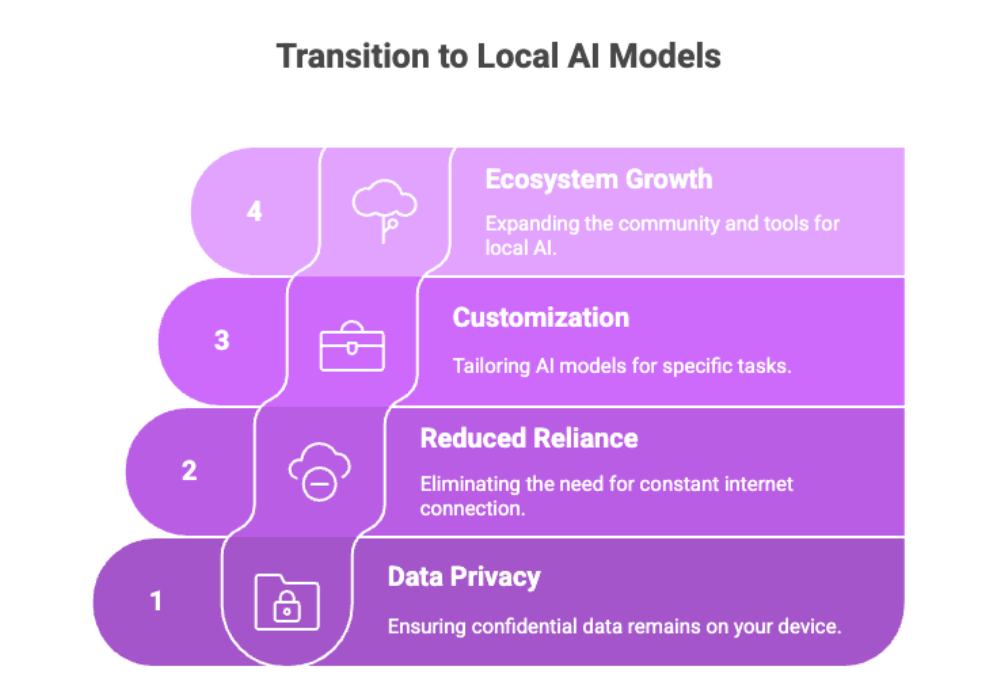

For years, most people accessed artificial intelligence through cloud services, relying on cloud servers to handle the heavy lifting of training and inference. But today, a major shift is underway: local AI models. Instead of sending sensitive data to remote providers, you can run powerful AI models locally on your own local machine, gaining full control over your workflows.

This approach offers three major advantages:

-

Data privacy: Confidential data never leaves your device.

Reduced reliance on cloud services: No need for constant internet connection.

Customization: Ability to train AI models or fine-tune existing ones for specific tasks.

The ecosystem is growing quickly. Ollama supports multiple models through a user-friendly interface and has already earned over 33.3k stars on GitHub. Other tools like LM Studio and LLaMa.cpp are rising as well. For developers, researchers, and hobbyists, the opportunity to experiment with open source models and create fully customizable solutions has never been better.

In this guide, we’ll walk you through the benefits of local AI models, how to get started, the best AI tools, and what you need to run large language models efficiently on a local server or desktop.

Benefits of Local AI Models

Running AI models locally offers several advantages compared to traditional cloud services. Let’s explore the most impactful ones.

1. Privacy and Security

Complete privacy: Since all data is processed locally, there’s no risk of transmitting sensitive data to third-party servers.

Data privacy by design: Perfect for healthcare, finance, and research fields dealing with confidential data.

2. Cost Savings

Cloud AI costs can add up quickly, especially when running AI models at scale. By running models locally, you eliminate recurring cloud charges. Your only expenses are hardware and electricity.

3. Full Control

When you own the stack, you also own the process:

Run various AI models without restrictions.

Experiment with advanced configurations like quantization, pruning, or GPU offloading.

Decide which smaller models or powerful models to load depending on your workflow.

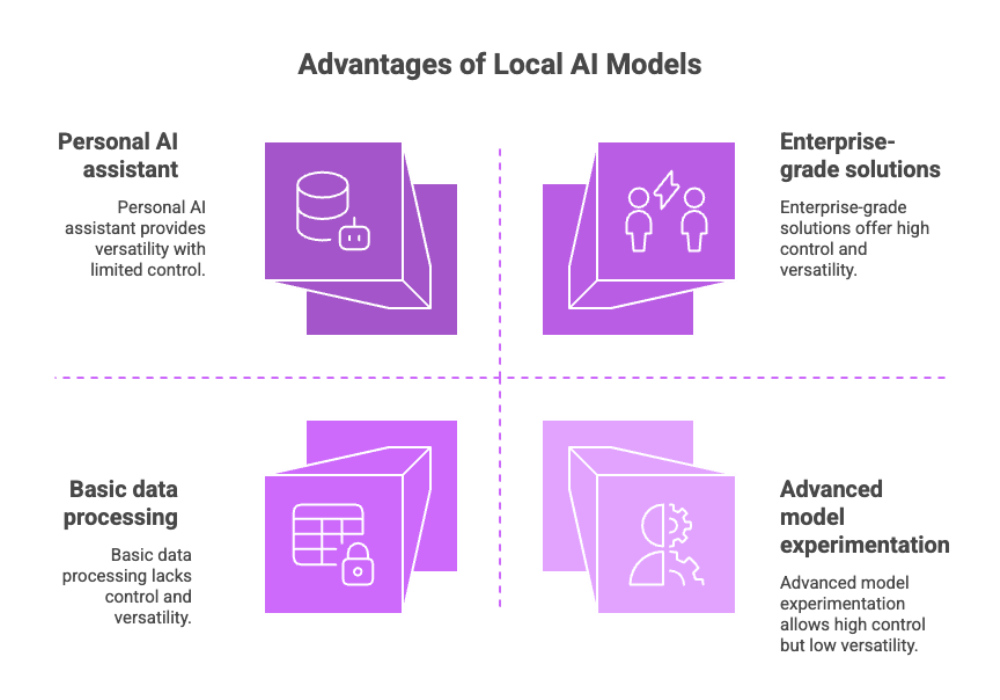

4. Versatility

Local AI isn’t limited to one type of application. You can:

Generate text.

Analyze chat data.

Perform semantic search.

Build existing applications powered by open source models.

For individuals, personal use might involve a local AI assistant. For companies, local models enable enterprise-grade solutions with complete privacy.

Getting Started with AI Models

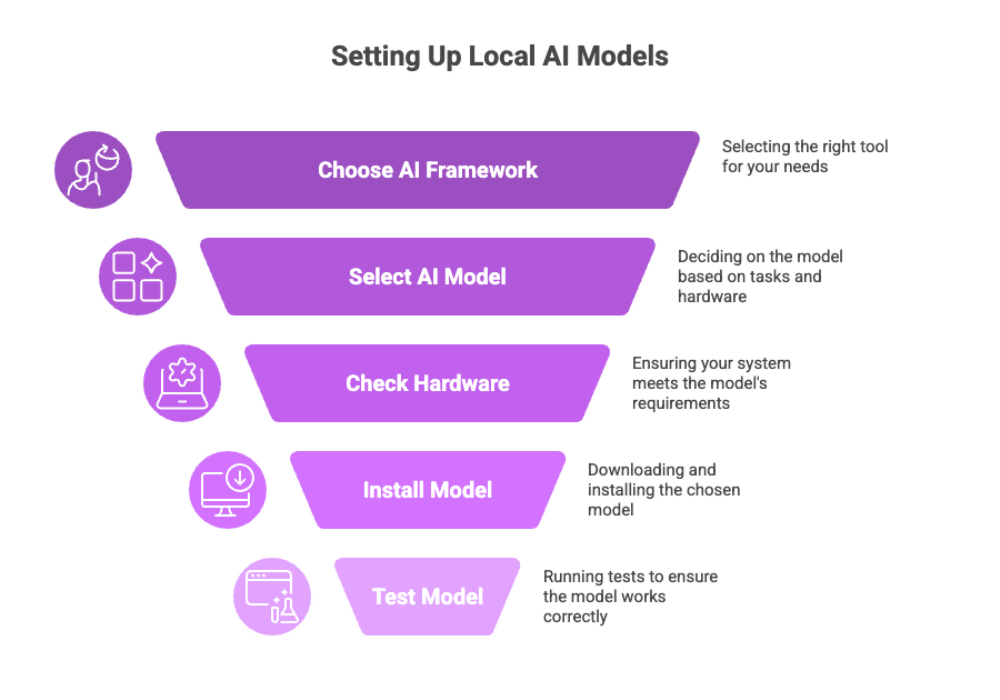

If you’re new to running AI models locally, the process might feel daunting. But modern AI tools have made it easier than ever to get started.

Step 1: Download the Right Tool

Choose a framework that suits your needs:

Ollama: Simple, privacy-focused, designed for complete privacy.

LM Studio: Feature-rich with broad supported models.

LLaMa.cpp: Powerful, flexible, and suited for those who want advanced configurations.

Step 2: Choose a Model

Factors to consider:

Model size: Larger language models provide more capability but need stronger hardware.

Specific tasks: Some models are fine-tuned for language understanding, while others excel in image or code generation.

Different models: Test multiple options to compare results.

Step 3: Check Hardware Requirements

Running AI models locally demands resources. Your computer should have:

Sufficient storage for model files.

Adequate processing power (CPU or GPU).

Enough memory to handle installed models.

If resources are limited, start with smaller models and scale up gradually.

Step 4: Download and Install

Most AI tools provide clear documentation. Simply download the tool, load your chosen model files, and follow installation steps.

Step 5: Run and Test

Once installed, test the setup. Try running AI models for semantic search, text generation, or language translation. Use fine-tuned configurations to improve accuracy.

By starting small and scaling, you’ll quickly gain confidence in running models locally without needing an external AI server.

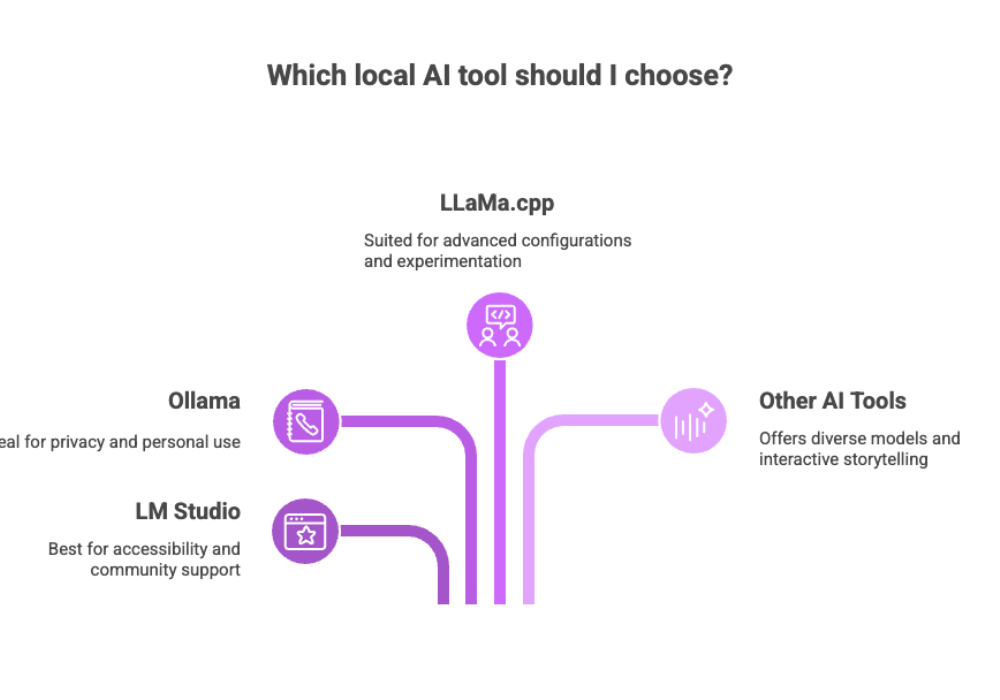

Popular Local LLM Tools

When it comes to local AI, a handful of AI tools stand out. Each offers unique strengths, making it easier to run LLMs without deep expertise.

1. LM Studio

Known as one of the most accessible platforms for running local LLMs.

Supports many models, including open source models.

Offers an intuitive interface for software development workflows.

Strong community support with guides, extensions, and plug-ins.

2. Ollama

Simple to use, with an emphasis on complete privacy.

Ollama supports multiple models out of the box.

Best for personal use or teams needing full control over their AI models locally.

Lightweight, cross-platform (Windows, macOS, Linux).

3. LLaMa.cpp

Designed for developers who want advanced configurations.

Perfect for experimenting with local LLMs in cross-platform environments.

Suited for those interested in fine-tuned open source models.

4. Other AI Tools

GPT4All: Offers various AI models for offline use.

KoboldAI: Targeted at interactive storytelling and chat data generation.

If you need expert guidance or want to discuss your AI needs, contact Cognativ’s team of AI and software experts.

When choosing a tool, consider:

Ease of use vs. customization.

Range of supported models.

Access to community resources and plug-ins.

Running Local LLMs

Running local LLMs (large language models on a local machine) is easier than it sounds, but it requires preparation.

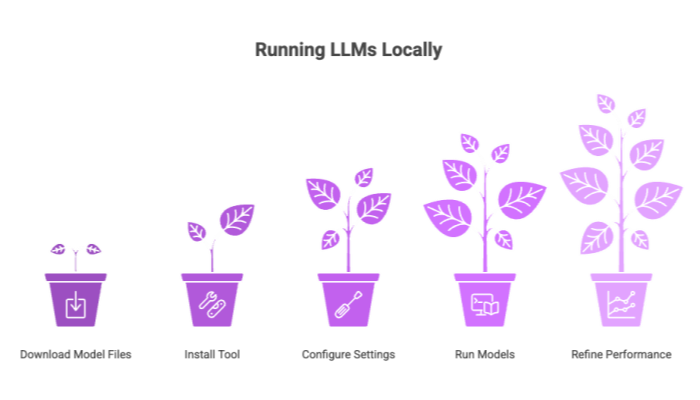

Step-by-Step Guide

Download model files: Every tool will provide instructions for downloading different models.

Install the tool: Use Ollama, LM Studio, or another tool of choice.

Configure settings: Adjust advanced configurations such as memory allocation, precision, and GPU usage.

Run models: Start running models locally to test output.

Refine performance: Apply techniques like fine-tuned training, quantization, or data augmentation.

Hardware and Software Needs

Compatible operating system (Windows, macOS, Linux).

Adequate hardware (at least 16GB RAM for most large language models locally).

Optional: GPU acceleration for powerful models.

Optimizing Performance

Start with smaller models for testing.

Scale up to large language models once your local machine proves stable.

Monitor usage to avoid resource bottlenecks.

By following these steps, you’ll be able to run LLMs confidently, making running AI models locally part of your daily software development or existing applications.

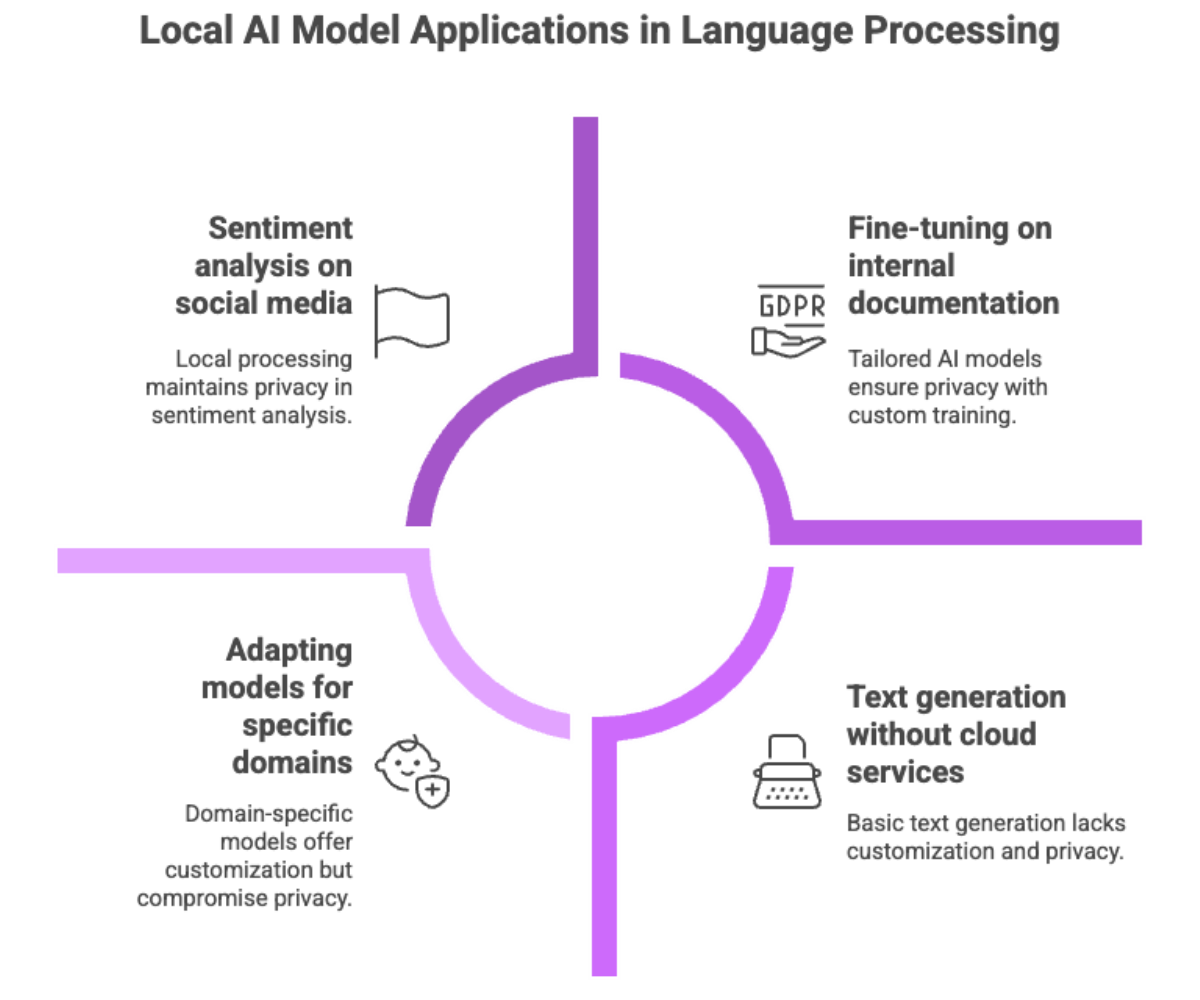

Language Processing with Local AI

One of the most compelling uses of local AI models is in language processing. With large language models running directly on your local machine, you can unlock advanced language understanding and generation capabilities without sending data to third-party servers.

Key Language Processing Tasks

Text generation: Create essays, stories, or code snippets without requiring cloud services.

Semantic search: Search through knowledge bases or chat data with accuracy by embedding and comparing text.

Sentiment analysis: Analyze reviews, social media, or customer support tickets locally.

Language translation: Run translation models locally to convert text between languages.

Text summarization: Extract key insights from documents, research, or meeting transcripts.

Custom Training and Fine-Tuning

Running AI models locally allows you to train AI models on your own datasets. For example:

A company could fine-tune a large language model on internal documentation for existing applications.

Researchers can adapt open source models to specific domains like legal, medical, or scientific text.

Educators can train smaller language models on academic datasets for personal use or classrooms.

By keeping everything on a local server or desktop, organizations ensure complete privacy while building fully customizable solutions.

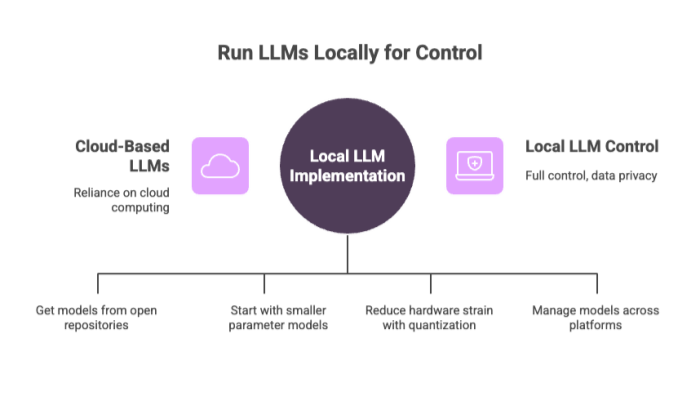

Large Language Models

The rise of large language models (LLMs) has revolutionized software development, customer support, and creative writing. But the catch has always been the reliance on cloud computing. With the latest AI tools, you can now run LLMs directly on your machine.

Benefits of Running LLMs Locally

Full control: Choose how AI models locally are configured, updated, or combined.

Data privacy: Keep sensitive data such as medical notes or financial records off cloud servers.

Reduced costs: Eliminate dependency on external providers who charge for API keys and requests.

Runs locally: Many local LLM setups no longer require an internet connection, making them reliable in offline environments.

Practical Tips for Running Local LLMs

Download model files from reputable open source repositories.

Test smaller models first before moving to powerful models with billions of parameters.

Optimize advanced configurations such as quantization to reduce hardware strain.

Use frameworks like Ollama or LM Studio to manage installed models and test them across cross-platform environments.

Using LLMs in Business

Imagine a customer support team with thousands of chat data logs. Instead of sending them to cloud services, they could run LLMs locally for:

Semantic search across tickets.

Auto-generating responses with fine-tuned models.

Monitoring sentiment in real time while maintaining data privacy.

In this way, local AI models extend the power of large language models while maintaining efficiency and security.

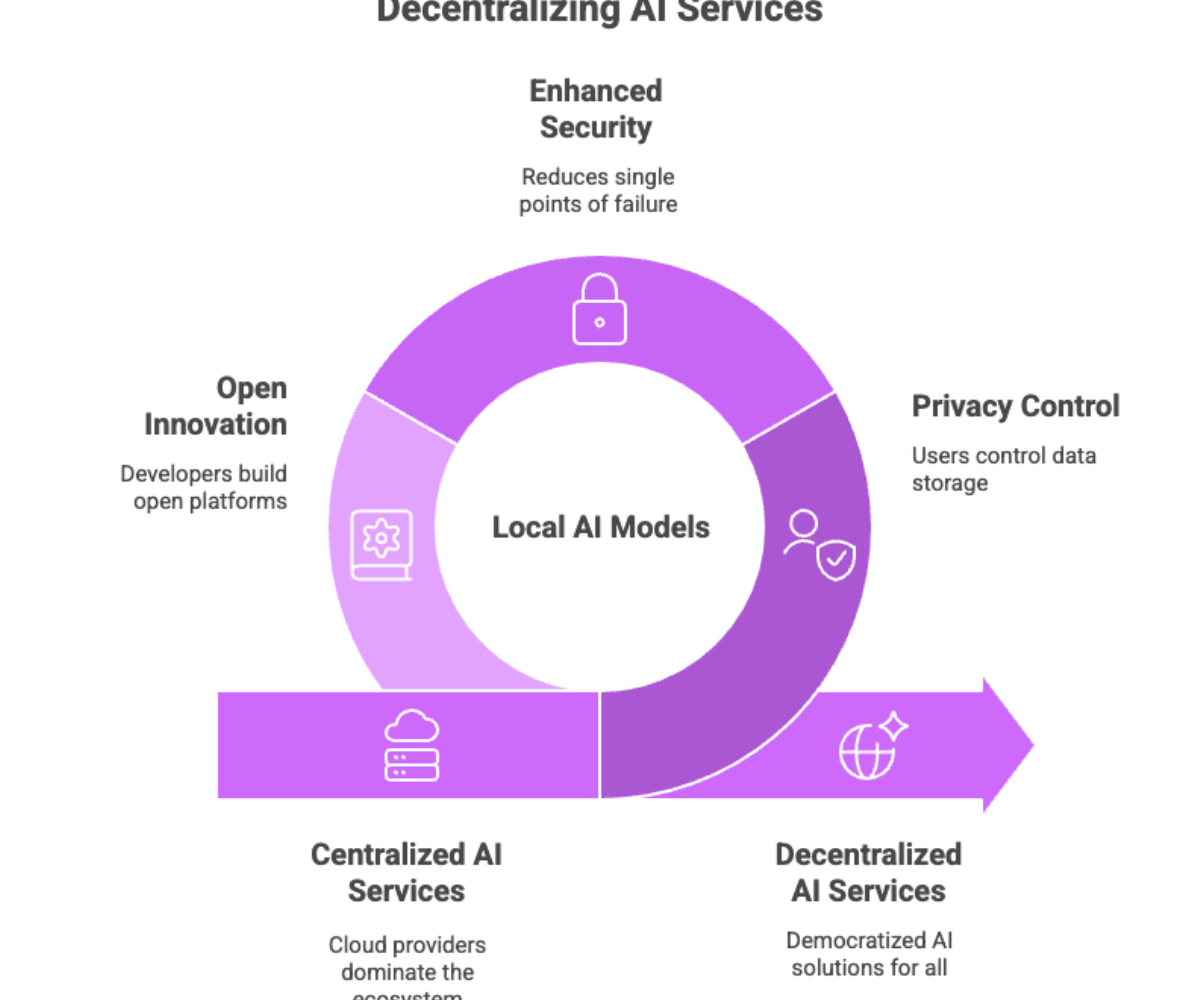

Decentralization of AI Services

A broader movement is underway: the decentralization of AI services. For years, the AI ecosystem was dominated by a handful of cloud providers, forcing developers to rely on external APIs and submit sensitive data. But with local AI models, the tide is shifting.

Why Decentralization Matters?

Privacy concerns: More users want to control how their data is stored and processed.

Security: Decentralized systems reduce single points of failure that hackers target.

Innovation: By removing gatekeepers, developers can build open platforms and experiment with various AI models.

Local AI as a Pillar of Decentralization

Running AI models locally embodies decentralization because:

Runs locally without a constant internet connection.

Encourages use of open source models over closed APIs.

Promotes fully customizable AI tools where you control the model files, advanced configurations, and output.

Real-World Trends

Open source models like LLaMa and Falcon are gaining traction.

LM Studio communities already count tens of thousands of developers.

Startups are integrating AI strategies and local AI into existing applications to reduce bandwidth costs and dependency on cloud services.

The decentralization of AI services isn’t just a technical shift — it’s a cultural one. It signals a move toward democratized AI solutions, where developers, businesses, and even individuals have the ability to run their own local models and create specific tasks without gatekeepers.

Conclusion and Next Steps

Local AI models are no longer a niche experiment; they are quickly becoming a mainstream way to leverage artificial intelligence. Whether you’re a developer seeking software development freedom, a researcher worried about privacy concerns, or a business aiming to cut costs, the ability to run models locally provides unmatched advantages.

Key Takeaways

Running AI models locally ensures complete privacy by keeping sensitive data off third-party servers.

Tools like Ollama, LM Studio, and LLaMa.cpp simplify the process of running local LLMs.

You can fine-tune open source models to meet specific tasks in industries like finance, healthcare, or education.

Decentralization of AI services empowers developers to innovate without depending on cloud services.

What You Can Do Next?

Download a tool like Ollama or LM Studio.

Experiment with different models — start with smaller models before moving to large language models.

Try semantic search or language understanding tasks with your own datasets.

Share your experiences with the growing community to help advance local AI technology.

The Future of Local AI

As hardware improves and open source models mature, expect local AI models to power more existing applications. From local servers in enterprises to wearable devices for personal use, local AI is shaping a more secure, affordable, and innovative AI landscape.

Running AI models locally isn’t just a technical choice — it’s a statement about ownership, privacy, and control. By embracing local AI, you’re contributing to a future where intelligence is not centralized, but distributed, resilient, and fully customizable.