What Is Local AI? A Guide to Secure and Efficient On-Device Solutions

Artificial intelligence (AI) refers to the creation of computer systems that can perform tasks traditionally requiring human intelligence — tasks like language translation, speech recognition, real-time decision making, and predictive analysis. Over the past decade, AI has become integrated into daily life through voice assistants, recommendation systems, and smart automation.

Traditionally, AI has been delivered through cloud AI, which relies on cloud servers and remote servers for data processing. But there’s a growing alternative: local AI.

Local AI refers to the practice of running AI models directly on local devices — such as laptops, desktops, smartphones, or even IoT systems — instead of relying solely on cloud-based services.

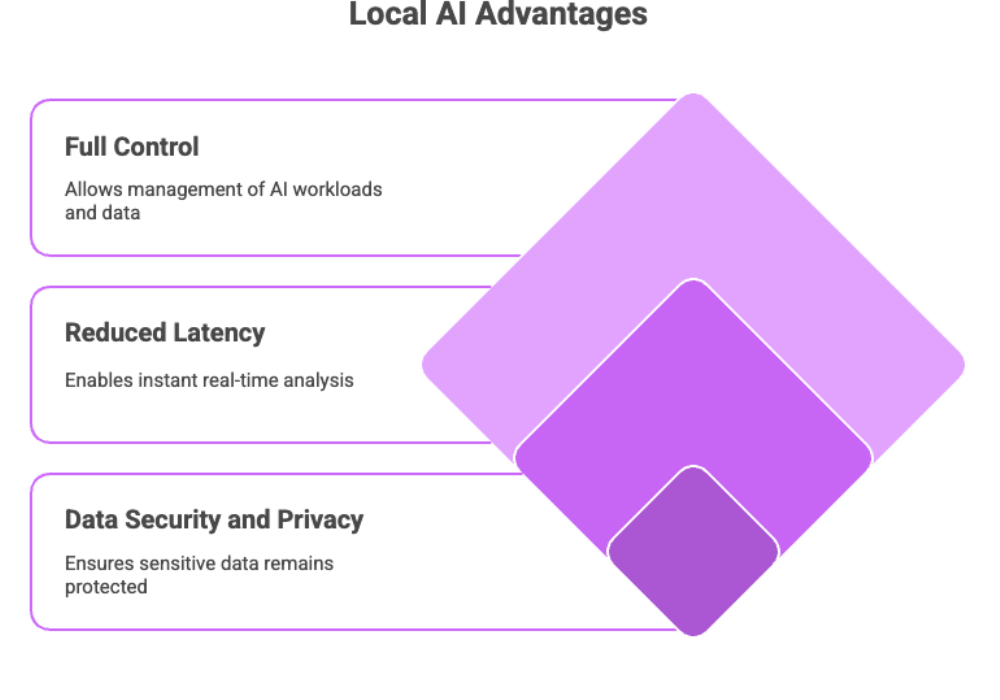

This approach comes with significant advantages:

-

Data security and data privacy: No sensitive data has to leave your machine.

-

Reduced latency: Real-time analysis happens instantly without depending on an internet connection.

-

Full control: You decide how AI workloads are managed and what training data is used.

As a result, local AI offers a game changer solution for industries where sensitive information, intellectual property, or proprietary information cannot risk exposure, allowing organizations to implement effective solutions.

How Local AI Works?

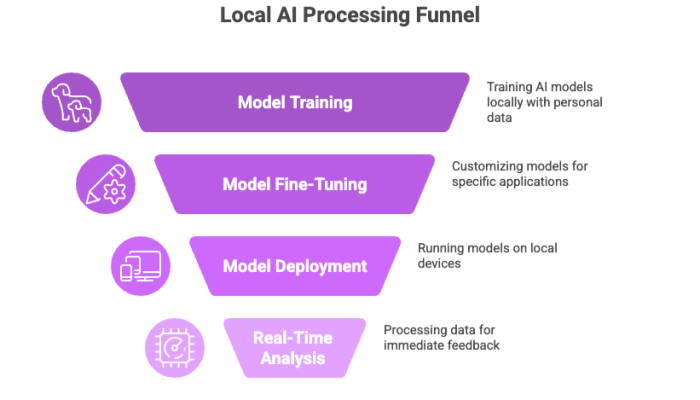

So, how local AI actually functions? The process revolves around running AI models and performing data processing directly on local hardware, without outsourcing to cloud services or external servers.

Key Mechanisms:

-

Model Training and Fine-Tuning

-

Developers can perform model training locally, using their own training data, ensuring data ownership and privacy.

-

Fine tuning allows customization of generative AI models or large AI models to better fit specific use cases.

-

-

Running AI Models Locally

-

Once a model is trained or downloaded, users can run AI models locally on personal computers or even mobile devices.

-

This allows offline functionality — no internet connectivity required for tasks like translations, summarization, or anomaly detection.

-

-

Real-Time Analysis

-

By processing data directly on local devices, AI can handle real-world applications that require immediate feedback.

-

Examples include security systems that detect intrusions instantly or healthcare devices performing real time analysis of patient signals.

-

Why It Matters?

Unlike cloud AI, which often requires sending data to centralized cloud based solutions, local AI eliminates the need for constant connectivity. This reduces data privacy concerns and ensures that organizations retain full control over their sensitive data.

Benefits of Local AI

The key benefits of running local AI models go far beyond convenience.

1. Improved Data Security and Privacy

-

Local AI ensures that all sensitive data and sensitive information remain on-device.

-

This reduces exposure to cyberattacks that can occur when sending data to third-party providers.

-

For industries like healthcare, finance, or legal, data ownership is critical — local AI offers a secure alternative to cloud based AI.

2. Reduced Latency and Faster Response

-

Local devices don’t need to query cloud servers for every task.

-

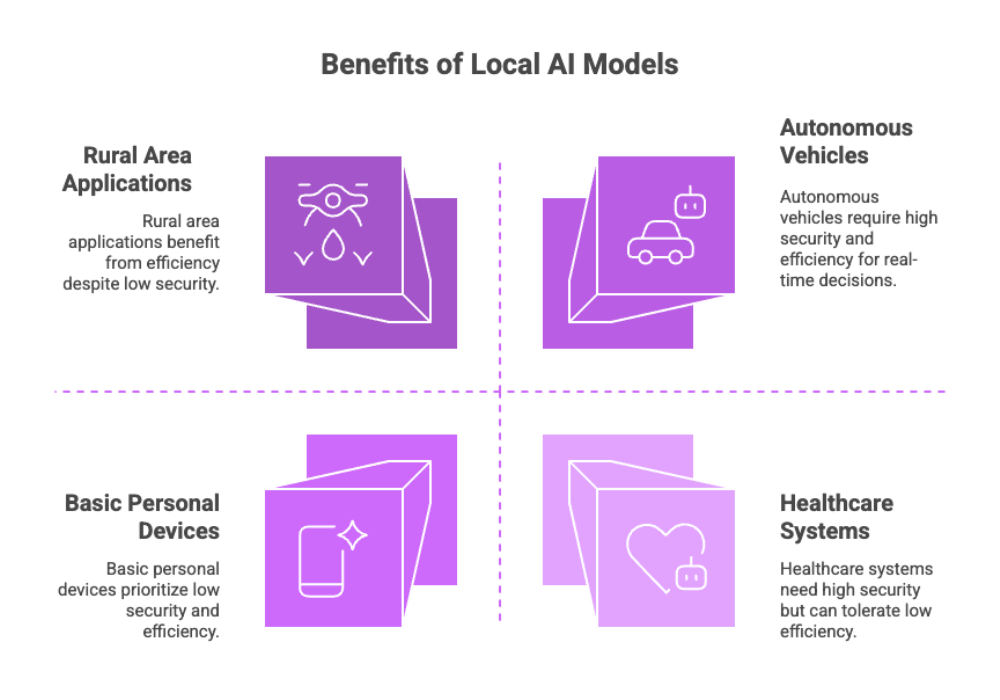

For real time analysis and real time decision making, this near-instant performance is invaluable — think autonomous vehicles or industrial automation.

3. Autonomy and Offline Functionality

-

Because it works without internet connectivity, offline AI can run anywhere.

-

Applications in rural areas, military operations, or personal devices benefit from independence from networks.

4. Cost Efficiency

-

Avoids ongoing cloud storage and processing fees.

-

For long-term AI workloads, local AI eliminates expensive recurring costs by relying on local hardware.

5. Energy Efficient Options Emerging

-

With optimization techniques like quantization and pruning, even large AI models are becoming energy efficient enough to run on smaller machines.

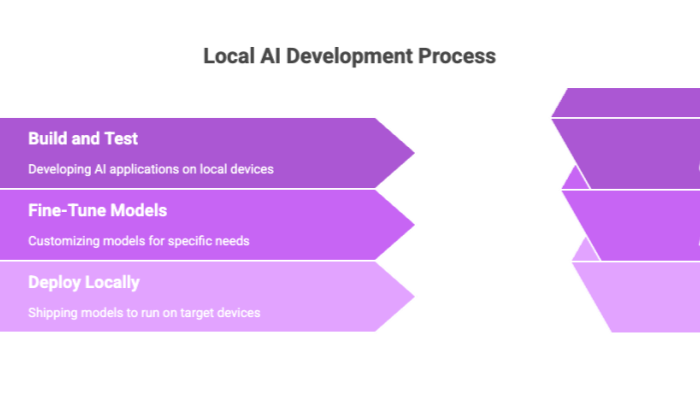

AI Development and Deployment with Local AI

Traditional AI development often requires vast cloud-based services for model training and scaling. However, local AI simplifies the pipeline:

-

Developers can build and test AI applications directly on laptops or workstations.

-

Local AI models can be fine-tuned for domain-specific needs without exposing proprietary information.

-

Deployment becomes easier — instead of uploading models to cloud AI, developers simply ship local models that run locally on target devices.

This approach not only speeds up iteration but also strengthens data privacy. Many developers now prefer open source models, which can be modified and customized without vendor lock-in.

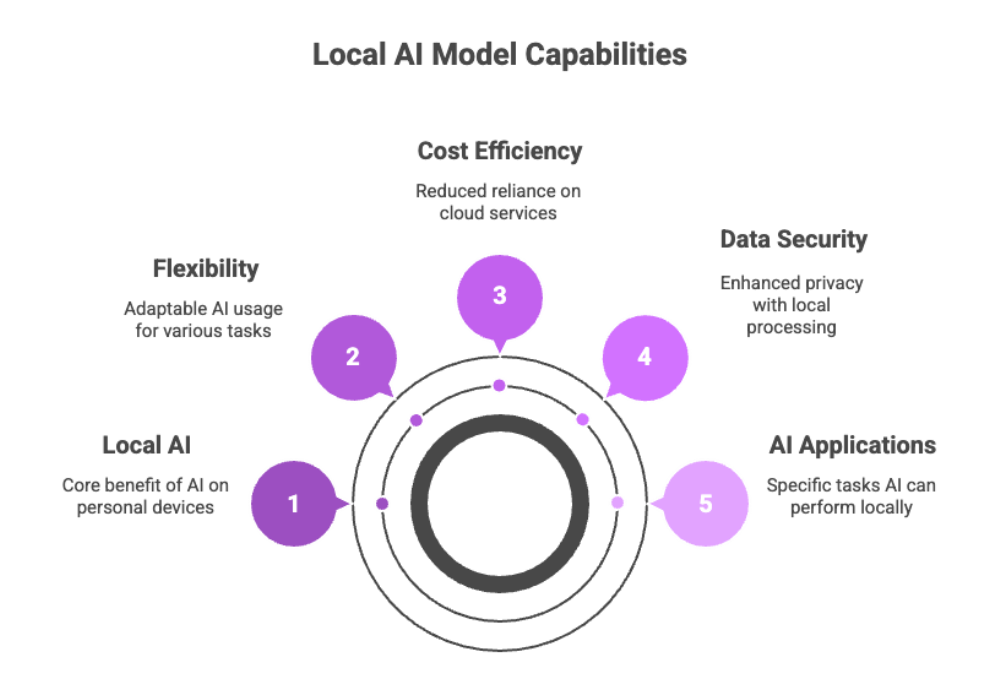

AI Models Locally

When people hear “AI,” they often think of massive large language models running in big data centers. But thanks to optimization, you can now run AI models locally on personal devices.

Local AI Models in Action:

-

Text Generation: Drafting articles, writing blog posts, or answering questions.

-

Image Generation: Creating illustrations and marketing materials without needing cloud based services.

-

AI Applications: Translation, summarization, and making AI accessible across devices.

Running AI Models Efficiently

-

Smaller models can run on CPUs with limited computational power.

-

Larger models often require GPUs but can still be managed with optimized frameworks.

By running AI models locally, users gain flexibility, cost efficiency, and enhanced data security.

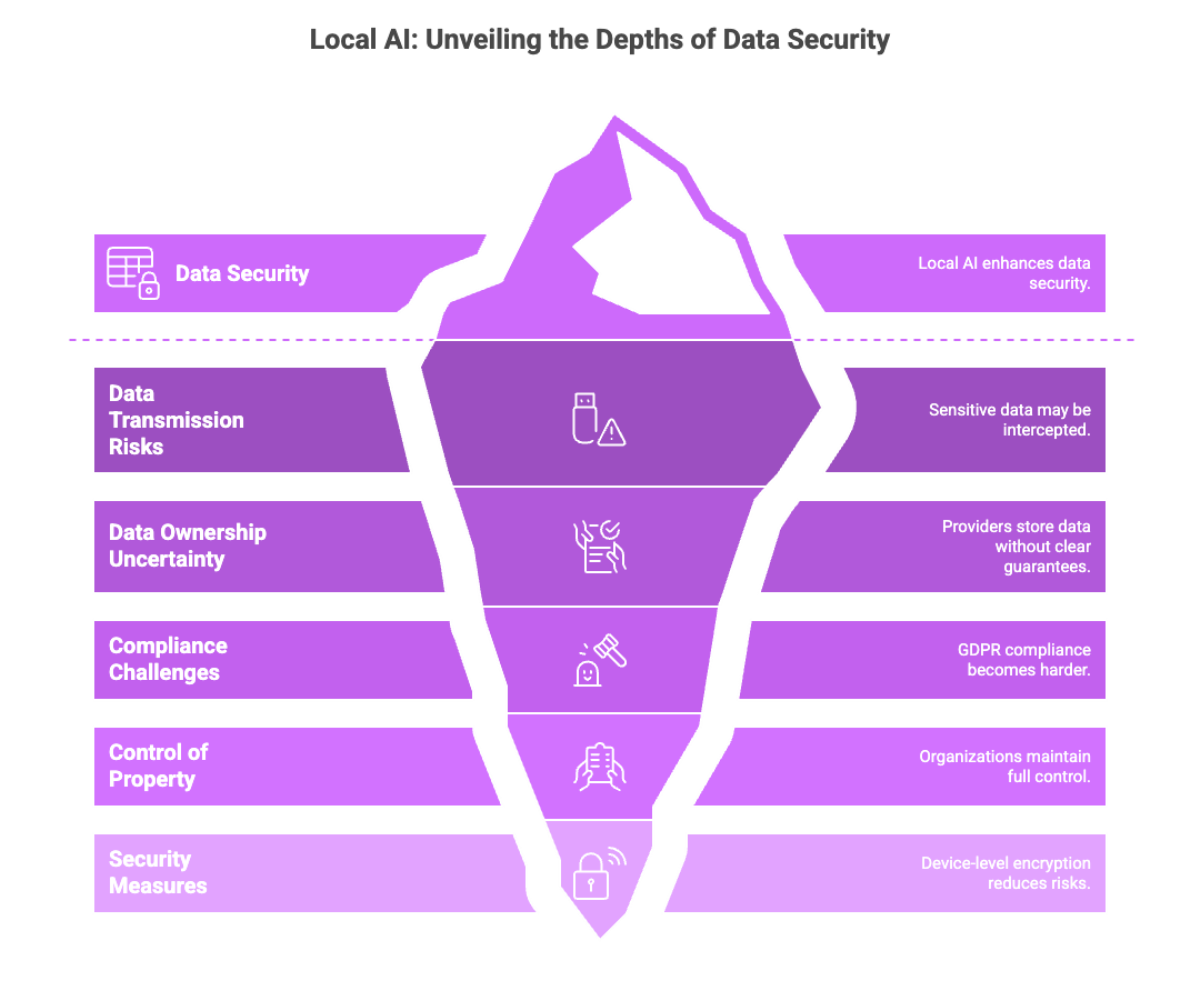

Data Security and Privacy

One of the strongest arguments for local AI is data security. When you use cloud based services, all information — from raw text to images — often needs to be uploaded to cloud servers or external servers. This creates multiple risks:

-

Sensitive data may be intercepted during data transmission.

-

Providers may store sensitive information without clear data ownership guarantees.

-

Compliance with data privacy concerns and regulations like GDPR becomes harder when sending data outside controlled environments.

With local AI, however:

-

Data privacy is safeguarded because everything is processed locally.

-

Developers and organizations maintain full control of proprietary or intellectual property.

-

Data security measures such as device-level encryption, offline storage, and locally hosted AI environments further reduce risks.

This is why local AI offers a game changer solution for industries like healthcare, law, and defense, where mishandling sensitive information can lead to severe consequences.

Cloud AI vs Local AI

While both approaches are powerful, their key benefits and limitations differ:

|

Aspect |

Cloud AI |

Local AI |

|---|---|---|

|

Data Processing |

Relies on cloud based solutions and centralized cloud servers. |

Processing data locally on local devices. |

|

Scalability |

Very high — can scale across millions of users easily. |

Limited by local hardware and computational power. |

|

Latency |

Dependent on internet connectivity and round trips to the server. |

Instant, enabling real time analysis and real time decision making. |

|

Data Privacy |

Data leaves the user’s device, raising data privacy concerns. |

Local AI eliminates most risks since data stays local. |

|

Cost |

Recurring fees for cloud storage and compute. |

More upfront investment in own hardware, but greater cost efficiency long term. |

|

Offline Functionality |

Requires internet connection. |

Provides offline AI and offline functionality. |

In short: Cloud AI is best when massive datasets, global collaboration, or elastic scaling are priorities. Local AI shines where data security, autonomy, and low latency are critical.

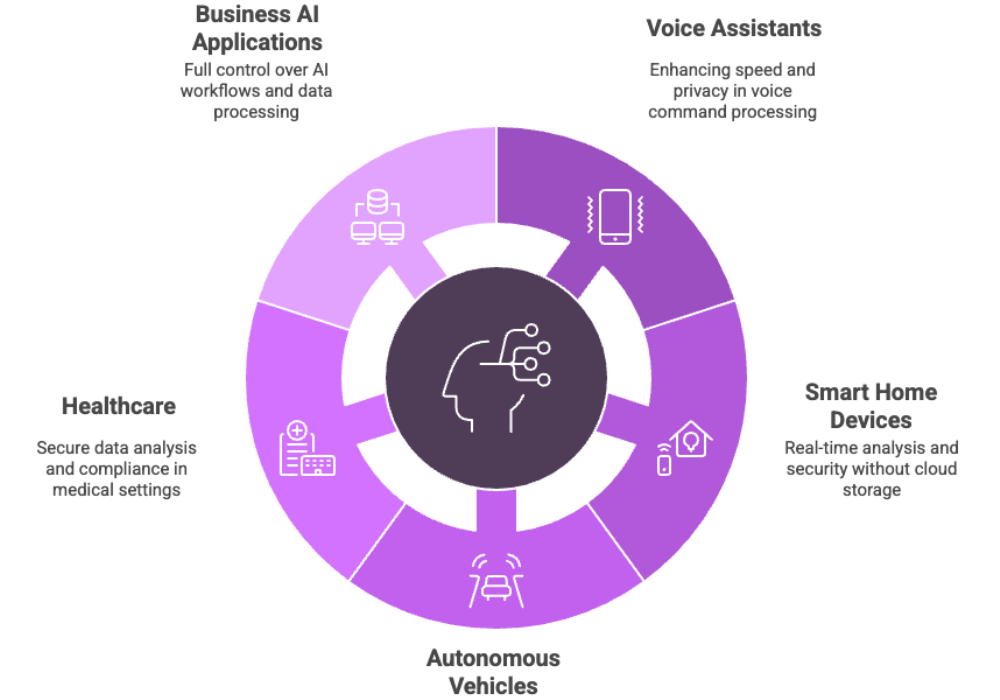

Real-World Examples of Local AI

The adoption of local AI models is already transforming industries. Here are some real-world examples:

-

Voice Assistants

-

Apple’s Siri processes some commands as offline AI, handling them without cloud servers to improve speed and data privacy.

-

Other voice assistants are integrating local AI ensures better handling of sensitive information.

-

-

Smart Home Devices

-

Smart cameras and thermostats now use local AI for anomaly detection, facial recognition, and security systems monitoring.

-

By running AI models locally, these devices provide real time analysis without needing cloud storage.

-

-

Autonomous Vehicles

-

Cars leverage local AI models to analyze sensor input for split second decisions in navigation, safety, and collision avoidance.

-

Here, real time decision making isn’t optional — it’s life-critical.

-

-

Healthcare

-

Hospitals are adopting local AI to analyze medical scans and patient data on local hardware, reducing risks tied to sending data over networks.

-

This not only supports data security but ensures compliance with data privacy concerns and regulations.

-

-

Business AI Applications

-

Companies are deploying local AI servers to handle AI workloads like text generation, image generation, and data processing.

-

These systems reduce reliance on cloud based AI while enabling full control over workflows.

-

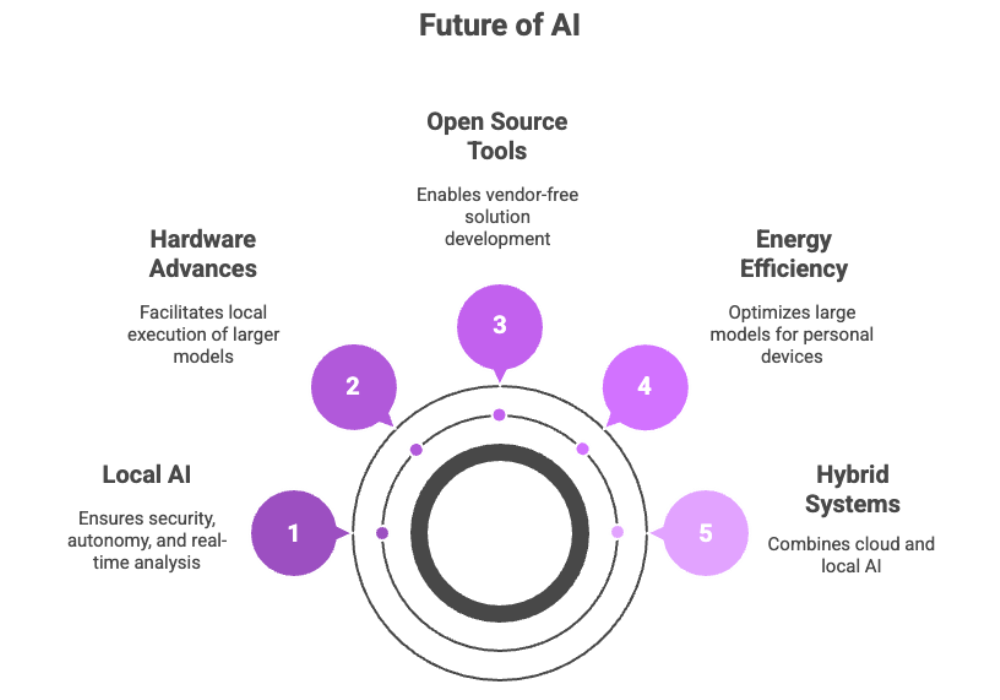

Future of Local AI

Looking ahead, the role of local AI will only grow as:

-

Large AI models become more energy efficient and optimized for personal devices.

-

Open source models and tools allow developers to build solutions without vendor lock-in.

-

Hardware advances (like GPUs and AI accelerators) make it easier to run locally even with larger models.

-

New generative AI models will be available to download and run AI models locally, giving users even more creative power.

The future of AI won’t be cloud-only. Instead, we’ll see hybrid systems where cloud AI provides scalability, while local AI ensures security, autonomy, and real time analysis.

Conclusion

To summarize:

-

Local AI refers to running AI models directly on local devices, instead of cloud based services.

-

The key benefits include data security, reduced latency, offline functionality, and cost efficiency.

-

Local AI eliminates many data privacy concerns tied to cloud based solutions, offering users full control over their sensitive data.

-

Real-world use cases like voice assistants, autonomous vehicles, and healthcare applications prove its growing value.

-

The future is hybrid — where both cloud AI and local AI work together to provide scalable yet secure solutions.

Ultimately, local AI offers a secure and efficient on-device solution that’s a game changer for businesses, developers, and individuals who value data ownership and real time decision making.